Machine Learning Engineer’s Guide to MLOps Mastery

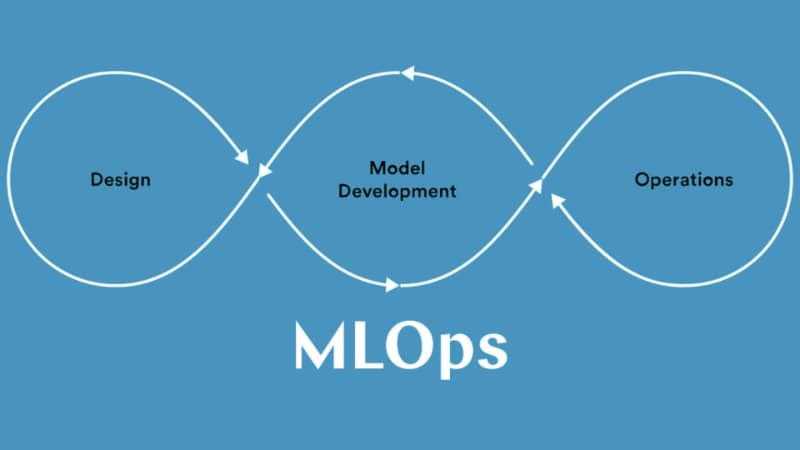

In the realm of AI Machine Learning Engineer are crucial, for creating an approach, to crafting and implementing deep learning and machine learning models referred to as MLOps. This specialized MLOps process is designed to cater to the needs of data science teams guaranteeing an effective work routine.

The collaborative role frequently involves:

- Machine Learning Engineers

- IT Specialists

- DevOps Engineers

- Data Scientists

Machine Learning Engineers play a pivotal role in establishing a cohesive methodology for the development and deployment of deep learning and machine learning models, known as MLOps. The MLOps pipeline is specifically tailored to meet the requirements of data science teams, ensuring a consistent and efficient workflow.

Machine Learning Engineer’s Operations Services Blueprint :

- Model version control

- Continuous integration delivery (CI/CD)

- Model service catalogs for deployed models

- Infrastructure management

- Real-time performance monitoring

- Adherence to security

- Governance protocols

In the field of Machine Learning Operations, a set of steps is established to prepare an ML model for deployment. These thorough actions guarantee that the model can expand efficiently to serve users while maintaining its precision allowing for integration, into real-world applications.

The specific tasks, such, as managing versions of models implementing integration and delivery processes (CI/CD) creating service catalogs for models monitoring infrastructure in time ensuring performance according to security protocols, and enforcing governance standards are crucial not only for Machine Learning Engineers but also for other professionals involved in the project. IT Specialists, DevOps Engineers, and Data Scientists all play roles in ensuring the success of Machine Learning Operations. By collaborating on these tasks as a team, scalability and accuracy are assured, paving the way for integration of ML models, into practical applications.

Purpose-Driven MLOps: A ML Engineer’s Guide:

- MLOps, at its core, is about making sure our powerful machine learning programs run seamlessly within a company. It acts as the orchestrator behind the scenes ensuring a partnership, between data scientists and also IT professionals.

- In other words, MLOps helps in deploying and managing intelligent applications by combining data science and also IT operations best practices. This ensures that the entire process. From creating these models to maintaining their effectiveness. Runs

- Businesses can utilize MLOps to enhance team collaboration speed up machine learning models and also improve efficiency. It’s, like giving these applications a privilege to function seamlessly in real-world scenarios.

- MLOps incorporates smart IT operations strategies to tackle the challenges faced in the realm of machine learning. It’s akin to having an assistant that handles tasks tracks changes and also ensures smooth operations.

- Think of MLOps as the angel, for machine learning models. It not only aids in creating these tools but also supervises them to maintain accuracy and effectiveness. It acts as a safety net that proactively monitors these models assists them in learning from data and keeps them maintained.

- In terms of MLOps is the sauce that optimizes the development, implementation, and also sustenance of magical machine learning processes.

It doesn’t just enhance the agility of data science teams; it also plays a role, in developing dependable and scalable machine learning applications that deliver benefits to businesses.

Empowering Machine Learning Engineer: The Role of MLOps:

For Machine Learning Engineer, MLOps is the essential guardian angel in the realm of developing, implementing, and sustaining machine learning applications. It acts as a behind-the-scenes maestro, ensuring seamless collaboration between data scientists and IT professionals. MLOps facilitates the effective deployment and management of intelligent applications, incorporating best practices from data science and IT operations. It is the magic ingredient that not only makes data science teams more agile but also contributes to building reliable and scalable machine learning applications, bringing tangible value to businesses. Machine Learning Engineers can consider MLOps as their dedicated safety net, proactively monitoring models, helping them learn from new data, and ensuring they remain in top-notch shape throughout their lifecycle.

Unlocking Advantages: Key Benefits of MLOps Implementation:

The way businesses implement, oversee, and grow their machine learning (ML) models is being completely transformed by Machine Learning Operations, or MLOps. This creative method streamlines the whole ML lifecycle by fusing ML procedures with DevOps concepts. The following are some major advantages of using MLOps that may help your company achieve data-driven excellence:

Streamlined Collaboration for Faster, Better ML Results:

- Enhanced Collaboration: MLOps facilitates smooth communication and also cooperation between operations, development, and data science teams. This synergy makes sure that all of the ML pipeline participants collaborate well, which leads to quicker model deployment and also better results.

- Accelerated Time-to-Market: MLOps drastically cuts down on the amount of time needed to create, test, and also implement models by automating and streamlining ML activities. The development lifecycle is being accelerated, which results in a faster time to market for new features and also products.

- Improved Model Performance: MLOps makes it possible to monitor and also adjust models continuously, guaranteeing that ML models constantly function well. Organizations may adjust models to evolving data patterns and also business needs via this iterative feedback loop, which produces more accurate forecasts.

- Scalability and Efficiency: Businesses can easily extend their machine learning infrastructure using MLOps to accommodate expanding datasets and also rising model complexity. The ML system’s scalability guarantees that it will continue to be responsive and also effective even as company demands grow.

Build Smart ML: Reliable, Cost-Effective, Compliant:

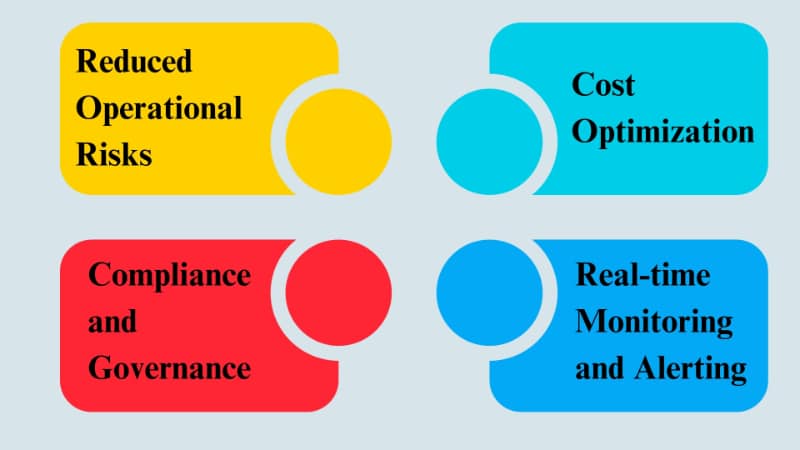

- Reduced Operational Risks: MLOps incorporates rigorous testing and also validation processes into the ML lifecycle. This helps identify and mitigate potential issues before they impact production, reducing operational risks and ensuring the reliability of ML applications.

- Cost Optimization: By automating repetitive tasks and resource allocation, MLOps optimizes resource utilization, resulting in cost savings. Efficient resource management and also streamlined processes contribute to a more economical and sustainable ML implementation.

- Compliance and Governance: MLOps facilitates the implementation of robust compliance and also governance practices. This is crucial for industries with strict regulatory requirements, ensuring that ML processes adhere to compliance standards and also ethical considerations.

- Real-time Monitoring and Alerting: One of the main components of MLOps is the ongoing monitoring of ML models. Businesses can immediately fix problems and also preserve the integrity of their machine-learning applications by accessing real-time information on model performance and also automated alerts for abnormalities.

In summary, adopting MLOps is a calculated step toward obtaining agility, efficiency, and dependability in the field of machine learning as well as technical advancement. By harnessing the key benefits of MLOps, organizations can stay ahead in the competitive landscape and also unleash the full potential of their data-driven initiatives.

Key Benefits of MLOps For Machine Learning Engineer:

- Streamlined Workflow: MLOps enhances collaboration and communication, ensuring a seamless workflow for machine learning engineers.

- Rapid Deployment: Implementing MLOps accelerates the deployment of machine learning models, reducing time-to-market.

- Improved Scalability: MLOps facilitates scalable and efficient model deployment, allowing ML engineers to handle increasing workloads.

- Enhanced Collaboration: MLOps fosters collaboration between data scientists, developers, and operations teams, fostering a cohesive working environment.

- Robust Monitoring: MLOps implementation provides robust monitoring capabilities, allowing ML engineers to track model performance and address issues promptly.

- Efficient Resource Utilization: MLOps helps optimize resource allocation, ensuring that machine learning engineers utilize computing resources efficiently.

- Continuous Integration and Delivery (CI/CD): MLOps enables ML engineers to implement CI/CD practices, automating testing and deployment for smoother development cycles.

- Version Control: MLOps ensures version control for machine learning models, making it easier for ML engineers to manage and track changes.

Comparing MLOps and DevOps: Understanding the Differences:

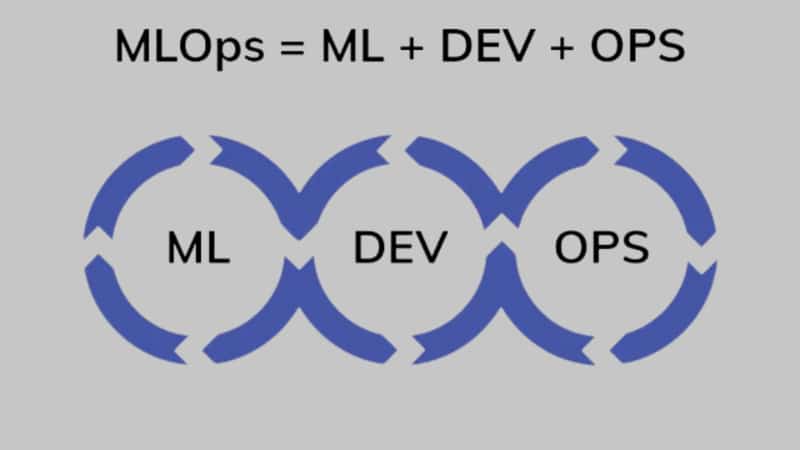

There are several significant distinctions between MLOps (Machine Learning Operations) and DevOps (Development Operations) when it comes to testing, retraining, teams, experimentation, and data emphasis. Understanding these distinctions is crucial for organizations seeking to optimize their workflows and also achieve seamless integration of machine learning models into their development processes.

Contrasting MLOps and DevOps in Software Development:

- Experimentation:

- MLOps: concentrates on using machine learning models in iterative experiments that need ongoing observation and also improvement. The focus is on improving model performance through ongoing testing and also evaluation.

- DevOps: Prioritizes code experimentation and development cycles, focusing on rapid and reliable software delivery. While experimentation is crucial, it is more centered around software features and functionality.

- Teams:

- MLOps: Involves working together amongst IT operations teams, machine learning engineers, and also data scientists. Cross-functional expertise is essential for successful model deployment and also maintenance.

- DevOps: Promotes cooperation between the IT operations and also development teams, guaranteeing a single strategy for software development, testing, and also deployment

- Data Focus:

- MLOps: Emphasizes control, availability, and quality of data since these factors have a significant impact on machine learning model performance.

- DevOps: Although data is important, programming and application functioning are given greater attention. Data considerations in DevOps primarily revolve around database management and application performance.

Contrasting Testing and Retraining in MLOps and DevOps:

- Testing:

- MLOps: Testing includes model validation, accuracy checks, and performance assessment in addition to standard software testing. Continuous testing is vital to ensure the reliability of machine learning models.

- DevOps: Focuses on automated software code testing to guarantee applications’ stability and functioning throughout the development lifecycle.

- Retraining:

- MLOps: Remains the process of retraining machine learning models using fresh data to adjust to evolving trends and raise accuracy over time. Continuous monitoring and retraining are integral components.

- DevOps: Primarily concerns itself with the release and deployment of software updates, with less emphasis on continuous retraining of models.

In summary, although MLOps and DevOps have many similar concepts, their subtle distinctions are rooted in the particular difficulties and demands posed by the creation and use of machine learning models. Integrating both approaches can create a powerful synergy, enabling organizations to deliver robust software applications infused with machine learning intelligence.

Implementing MLOps for Efficient Machine Learning:

Navigating MLOps Maturity Levels on the Production Journey:

As machine learning (ML) models become increasingly complex and also deeply integrated into critical systems, the need for efficient and reliable management becomes paramount. Presently, MLOps—a systematic method for deploying, administering, and tracking machine learning models in production—is the result of the union of DevOps concepts with ML methods.

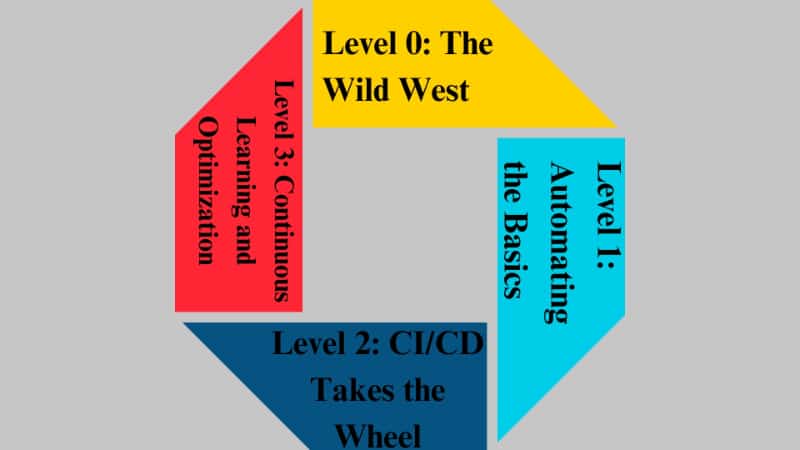

However, the journey towards MLOps maturity doesn’t happen overnight. It’s a gradual process, characterized by distinct levels of maturity that reflect the organization’s approach to model deployment and operations.

Exploring MLOps Levels for Effective Machine Learning:

Level 0 MLOps Unleashed: The Wild West Chronicles:

Imagine a dusty frontier town without any established rules or infrastructure. That’s Level 0 MLOps. This stage is characterized by manual processes, infrequent model deployments, and limited automation. Models are often siloed, making collaboration and monitoring a challenge.

Level 1: Automating Core Processes with Precision Tools:

Things start to get organized at Level 1. Here, the focus shifts to automation, with pipelines established for continuous training and testing. This level utilizes tools like Airflow and JupyterHub to automate repetitive tasks, improving efficiency and reducing errors. However, deployment remains manual, and monitoring capabilities are still evolving.

Level 2: Seamless Collaboration with CI/CD Automation:

Level 2 marks a significant leap forward with the introduction of a fully integrated CI/CD pipeline. This streamlines the model development process, allowing for rapid updates and deployments.

- Automation reigns supreme

- Encompassing data preparation

- Model training

- Deployment

- Monitoring

Teams can now collaborate seamlessly, and robust monitoring systems provide valuable insights into model performance and potential issues.

Level 3: Elevating MLOps with Continuous Learning Mastery:

Level 3 is the frontier of MLOps maturity. Here, the focus extends beyond automation to continuous learning and optimization. Models are monitored in real-time, with performance data used to trigger automatic retraining or adjustments. This level involves sophisticated tools and frameworks like MLflow and Run:ai, which provides advanced features for data lineage management, experiment tracking, and model governance.

- Data Lineage Management: Tracking and documenting the end-to-end journey of data, from its origin to consumption, to ensure transparency, quality, and regulatory compliance in the machine learning pipeline.

- Experiment Tracking: Monitoring and also recording the details of machine learning experiments, including parameters, data inputs, and outcomes, to facilitate reproducibility, collaboration, and informed decision-making in model development.

- Model Governance: Implementing policies, controls, and processes to manage the lifecycle of machine learning models, ensuring accountability, ethical use, and compliance with organizational and regulatory standards in the MLOps environment.

Machine Learning Engineer’s MLOps Journey: Levels Unveiled:

Level 0 MLOps Unleashed: The Wild West Chronicles:

Picture a frontier town without established rules or infrastructure. That’s Level 0 MLOps for machine learning engineers. This stage involves manual processes, infrequent model deployments, and limited automation. Models are often siloed, posing challenges for collaboration and monitoring.

Level 1: Automating Core Processes with Precision Tools:

As machine learning engineers progress to Level 1, the focus shifts to automation. Precision tools like Airflow and JupyterHub are employed to establish pipelines for continuous training and testing. While efficiency improves and errors are reduced, deployment remains manual, and monitoring capabilities are still evolving.

Level 2: Seamless Collaboration with CI/CD Automation:

Level 2 marks a significant leap forward for machine learning engineers with the introduction of a fully integrated CI/CD pipeline. This streamlines the model development process, allowing for rapid updates and deployments. Automation covers data preparation, model training, deployment, and monitoring. Teams can collaborate seamlessly, and robust monitoring systems offer valuable insights into model performance and potential issues.

Level 3: Elevating MLOps with Continuous Learning Mastery:

Level 3 signifies the cutting edge of MLOps maturity, for machine learning professionals. At this stage the emphasis moves beyond automation to learning and refinement. Monitoring models in time along with performance metrics initiates retraining or modifications. This elevated stage incorporates tools such, as MLflow and Run; ai, offering functionalities for managing data lineage tracking experiments and overseeing model governance.

Discovering Your MLOps Maturity with the Level Finder:

Achieving maturity, in MLOps is not a formula. The perfect stage varies based on what your organization requires and the resources available. Yet having knowledge of the stages enables you to evaluate where you currently stand and pinpoint areas that could use enhancement.

Initiating MLOps: A Guide to Starting Your Journey:

- Identify your goals: What do you want to achieve with MLOps? Increased efficiency? Has it improved model performance?

- Assess your current state: Evaluate your existing processes, tools, and infrastructure.

- Choose your starting point: Based on your goals and current state, select a level to target.

- Implement gradually: Start small and scale up over time as your team gains experience and confidence.

- Embrace collaboration: MLOps success depends on communication and teamwork.

- Continuously learn and adapt: The MLOps landscape is constantly evolving, so be prepared to adjust your approach as needed.

Optimizing ML Operations: Strategic Steps for Success:

Embarking on a successful Machine Learning Operations (MLOps) journey involves a strategic sequence of essential steps. Let’s delve into each crucial phase to optimize your machine-learning endeavors.

- Scoping for Success: In the initial phase, clearly define the scope of your MLOps project. Understand the objectives, identify stakeholders, and establish key performance indicators (KPIs). A well-defined scope sets the foundation for a successful MLOps implementation.

- Data Engineering Excellence: Data is the lifeblood of any machine learning model. Engage in robust data engineering practices to ensure data quality, accuracy, and relevance. Efficient data pipelines and preprocessing are paramount, laying the groundwork for reliable model training.

- Modeling Mastery: The heart of MLOps lies in crafting powerful and accurate machine learning models. Leverage cutting-edge algorithms, embrace model experimentation, and fine-tune parameters for optimal performance. Rigorous testing and validation ensure that your models meet the desired standards.

- Deployment with Precision: Transitioning from model development to deployment demands precision and efficiency. Employ containerization and orchestration tools to streamline deployment processes. Ensure seamless integration with existing systems and maintain version control for easy updates.

- Vigilant Monitoring: Post-deployment, continuous monitoring is key to the success of any MLOps initiative. Implement robust monitoring solutions to track model performance, detect anomalies, and facilitate timely interventions. Regular updates and improvements based on real-time insights contribute to sustained success.

Initiating MLOps Guide for A Machine Learning Engineer:

- Set Goals: Define your MLOps objectives. Do you aim for increased efficiency or improved model performance as a Machine Learning Engineer?

- Assess Current State: Evaluate processes, tools, and infrastructure from your Machine Learning Engineer’s perspective.

- Choose Starting Point: Select a level based on goals and current state. Start small, scale gradually, leveraging your expertise.

- Implement Gradually: Begin with small-scale implementations, building confidence and expertise over time.

- Embrace Collaboration: Effective communication and teamwork are vital for MLOps success among Machine Learning Engineers.

- Learn and Adapt: The MLOps landscape evolves; be ready to adjust your approach as a Machine Learning Engineer.

Strategic ML Ops: Success for Machine Learning Engineers:

- Scope for Success: Define the project scope in the initial phase. Understand objectives, identify stakeholders, and establish KPIs as a Machine Learning Engineer.

- Data Engineering Excellence: Engage in robust data engineering practices, ensuring quality, accuracy, and relevance for reliable model training.

- Modeling Mastery: Craft powerful ML models by leveraging cutting-edge algorithms, embracing experimentation, and fine-tuning parameters for optimal performance.

- Deployment Precision: Transition from model development to deployment with precision. Use containerization and orchestration tools for streamlined processes, ensuring seamless integration and version control.

- Vigilant Monitoring: Post-deployment, continuous monitoring is crucial for MLOps success as a Machine Learning Engineer. Implement robust solutions for tracking model performance, detecting anomalies, and facilitating timely interventions. Regular updates based on real-time insights contribute to sustained success.

Optimal MLOps Implementation: Essential Best Practices:

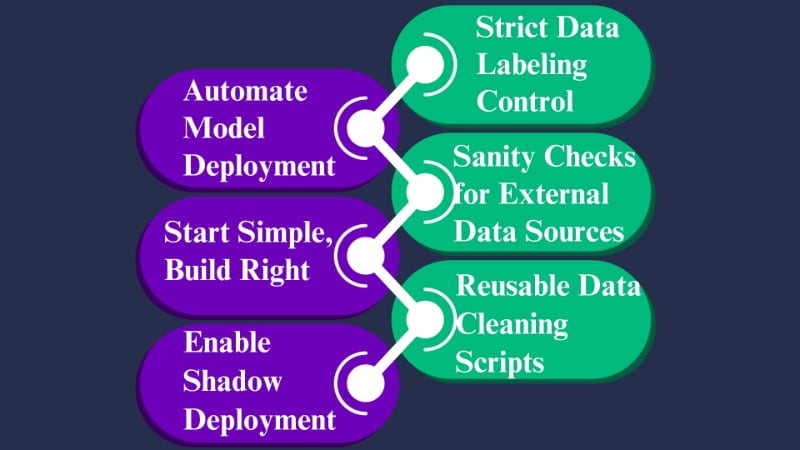

Best Practices for ML Deployment & Data Quality Control

- Automate Model Deployment: Embrace automation tools to streamline the deployment of machine learning models. This reduces manual errors, accelerates deployment cycles, and ensures a seamless transition from development to production.

- Start Simple, Build Right: Begin with a straightforward approach to model development. Focus on building a solid infrastructure that can accommodate more complex models in the future. This sets the foundation for scalability and adaptability.

- Enable Shadow Deployment: Implement shadow deployment strategies to run new models alongside existing ones without affecting the production environment. This allows for real-world testing and validation before full deployment.

- Strict Data Labeling Control: Exercise strict control over data labeling processes to maintain data quality and accuracy. Consistent and reliable labels are essential for training models that generalize well to diverse scenarios.

- Sanity Checks for External Data Sources: Make sanity tests of external data sources a top priority to guarantee the accuracy and consistency of incoming data. Identify and rectify anomalies promptly to maintain the integrity of your machine learning pipeline.

- Reusable Data Cleaning Scripts: Create reusable programs to clean and combine data. This promotes consistency in preprocessing steps, making replicating and maintaining data preparation processes across various projects easier.

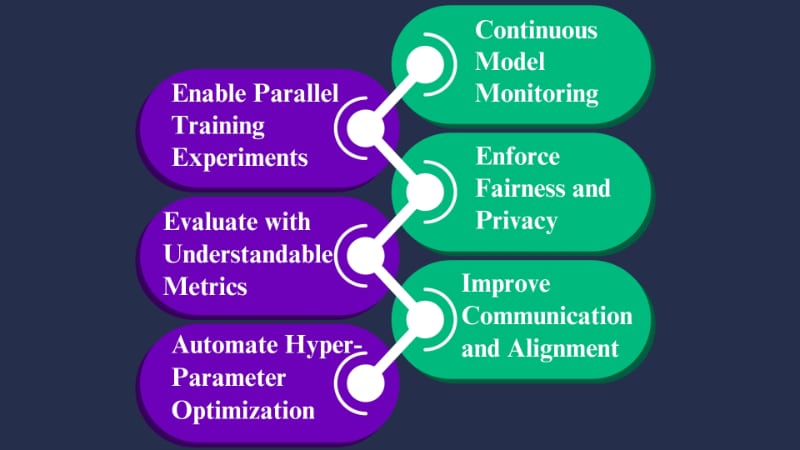

Streamlining Practices for Rapid Model Development:

- Enable Parallel Training Experiments: Optimize training efficiency by enabling parallel training experiments. This accelerates the exploration of different model architectures and hyperparameters, leading to faster model development.

- Evaluate with Understandable Metrics: Assess model training using simple and understandable metrics. Clear evaluation metrics provide insights into model performance, making it easier for teams to interpret results and iterate on model improvements.

- Automate Hyper-Parameter Optimization: Implement automated processes for hyper-parameter optimization. This ensures that models are fine-tuned for optimal performance without the need for manual intervention.

- Continuous Model Monitoring: Establish continuous monitoring mechanisms to track the behavior of deployed models. Real-time insights allow for proactive identification and resolution of issues, ensuring ongoing model reliability.

- Enforce Fairness and Privacy: Prioritize fairness and privacy in model development by implementing measures to detect and address biases. Ensure that sensitive data is handled responsibly, adhering to privacy regulations.

- Improve Communication and Alignment: Enhance communication and alignment between cross-functional teams. Clear communication channels and collaborative efforts ensure that all stakeholders are on the same page, fostering a more cohesive and productive MLOps environment.

Organizations may create a reliable and effective process for deploying machine learning models by following these MLOps best practices. From automation to continuous monitoring and improved communication, these guidelines contribute to the successful implementation of MLOps, enabling organizations to derive maximum value from their machine-learning initiatives.

Optimal MLOps: Best Practices for Machine Learning Engineers

- Clear Objectives: Define precise MLOps goals aligned with improved efficiency and model performance as a Machine Learning Engineer.

- Thorough Assessment: Evaluate existing processes, tools, and infrastructure from a Machine Learning Engineer’s perspective to identify areas for improvement.

- Strategic Starting Point: Choose a level tailored to your goals and current state, starting small and scaling gradually to build confidence.

- Collaboration Embrace: Prioritize effective communication and teamwork for successful MLOps implementation among Machine Learning Engineers.

- Continuous Learning: Stay adaptable in the evolving MLOps landscape. Be prepared to adjust your approach and leverage new methodologies.

- Scope Definition: Clearly define the project scope in the initial phase, understand objectives, and stakeholders, and establish key performance indicators (KPIs).

- Data Quality Focus: Engage in robust data engineering practices, ensuring quality, accuracy, and relevance for reliable model training.

- Modeling Excellence: Craft powerful ML models by embracing cutting-edge algorithms, experimentation, and fine-tuning parameters for optimal performance.

- Deployment Precision: Transition from model development to deployment with precision, utilizing containerization and orchestration tools for streamlined processes.

- Vigilant Monitoring: Implement robust monitoring solutions post-deployment, tracking model performance, detecting anomalies, and facilitating timely interventions. Regular updates based on real-time insights contribute to sustained success.

Streamlining MLOps for Machine Learning Engineers:

Optimizing ML Deployment with CI/CD and Orchestration:

- Testing:

- Unit Testing: In the field of machine learning it’s essential for a data scientist to thoroughly check each part of the ML system. This includes testing the data preparation feature selection and model predictions to confirm they work correctly.

- Integration Testing: It is crucial to make sure that various parts work well together. As someone who works with machine learning checking that these parts integrate smoothly is important to ensure the effectiveness and unity of the system.

- Performance Testing: Evaluating the speed, scalability, and efficiency of the machine learning model in situations is a task. Performance testing helps the machine learning engineer fine-tune the model, for use cases.

- Deploying:

- Containerization: By using containerization methods, like Docker ML engineers can guarantee deployment across environments. They package both the ML model and its requirements, into containers making deployment easier and reducing compatibility problems.

- Orchestration: By using orchestration tools such, as Kubernetes ML engineers can automate the deployment, scaling, and management of machine learning systems. This automation boosts efficiency and reliability, for ML engineers.

- Continuous Deployment: By setting up CI/CD pipelines the deployment process is automated. This allows ML engineers, to efficiently and dependably make changes, to the production environment enabling seamless development and deployment cycles.

- Managing:

- Version Control: Machine learning engineers make use of version control systems to keep track of changes made to the machine learning model, code, and dependencies. This allows team members to work together effectively and offers the ability to revert, to versions in case any problems occur.

- Model Registry: It’s crucial to have a database where you can store and handle versions of machine learning models. This setup allows machine learning engineers to access and compare models promoting transparency and reproducibility.

- Configuration Management: ML engineers utilize configuration files to handle parameters, hyperparameters, and various settings allowing for flexibility, in fine-tuning and deploying models while facilitating model management.

- Monitoring:

- Logging: ML developers incorporate logging to record details, how the model performs any issues that arise, and the flow of data in and out while it’s running. This helps troubleshoot and gain insights into how the model functions, in real-world settings.

- Alerting: Machine learning experts establish alert systems to inform parties, about irregularities, deviations from patterns, or model decline. Timely notifications enable reactions, to concerns.

- Drift Detection: Machine learning engineers need to keep an eye, on data and model drift consistently. Noticing shifts in input patterns or model outcomes indicates the need, for updates or retraining to guarantee the model’s long-term effectiveness.

The valuable insights mentioned earlier are not just limited to machine learning engineers. Also apply to a range of professionals, including IT experts, DevOps specialists, and data scientists. Individuals, in these fields have a shared responsibility in ensuring the success of machine learning initiatives through testing, strategic implementation, effective management, and continuous monitoring. Testing plays a role for everyone involved covering unit tests, integration tests, and performance evaluations regardless of their roles. Deployment techniques such, as containerization orchestration and continuous deployment are practices embraced by IT professionals and DevOps experts to streamline automated processes.

Control versions, model registry, and managing configurations are important, for keeping things clear and working well within teams no what they specialize in. Monitoring methods like logging, alerting and drift detection are crucial for data scientists IT experts, and DevOps engineers to make sure machine learning models work reliably in real-world situations. These common principles help everyone, in IT, DevOps and data science succeed together.

Machine Learning Engineers’ Path to Precision: CRISP-ML(Q):

CRISP-ML(Q) serves as a standardized process model tailored for the development of machine learning (ML) projects. This acronym likely represents a specialized version or extension of the well-established CRISP-DM (Cross-Industry Standard Process for Data Mining) model, designed specifically to address the nuances of machine learning applications.

For a machine learning engineer, CRISP-ML(Q) provides a structured framework that guides them through the entire lifecycle of ML development. This begins with a thorough understanding of the business objectives, where the engineer collaborates closely with stakeholders to define clear goals and also requirements for the ML solution. This initial phase emphasizes the importance of aligning ML models with the broader strategic objectives of the organization.

Moving forward, the CRISP-ML(Q) model assists the machine learning engineer in data understanding and also preparation. This involves sourcing, cleaning, and transforming data to make it suitable for training and also evaluation. The engineer leverages their expertise to ensure that the data used in the ML project is relevant, accurate, and also representative of the real-world scenarios the model is intended to address.

Synergizing IT, DevOps, and Data Science in ML Projects

This machine learning (ML) project, is suitable for IT specialists, DevOps engineers, and also data scientists alike. Representing a specialized extension of CRISP-DM, this framework guides professionals through the multifaceted ML development lifecycle. Collaborating closely with stakeholders, IT specialists ensure that ML solutions align seamlessly with overarching business objectives. DevOps engineers leverage their expertise in data understanding and also preparation, refining, and transforming data to meet the rigorous demands of ML model development.

Data scientists, integral to the process, employ various algorithms to build and also evaluate models, emphasizing accuracy and reliability. The model underscores the importance of effective communication, urging all specialists to interpret and also explain models to diverse stakeholders. From deployment to maintenance, IT specialists, DevOps engineers, and also data scientists collectively navigate the complexities of CRISP-ML(Q), ensuring the successful integration of ML solutions into real-world scenarios.

Innovation Unleashed: MLOps Stack Canvas Strategies:

The architecture and infrastructure stack for MLOps are specified in the MLOps Stack Canvas. Determining the platforms, technologies, and tools required to support the whole lifecycle of machine learning models—from creation to deployment and also monitoring—is part of this.

Championing Accountability: ML Model Governance 101:

The procedures put in place to oversee and guarantee the appropriate use of ML models are referred to as ML Model Governance. This includes defining and enforcing policies around model development, deployment, and ongoing monitoring to address ethical considerations, compliance requirements, and overall responsible AI practices.

To support the efficient and responsible deployment of machine learning models in production contexts, MLOps concepts include standardized development methods, architectural considerations, and governance practices. These principles are vital for organizations seeking to derive value from their ML initiatives while maintaining reliability, scalability, and ethical use of AI technologies.

Intelligent Solutions: ML at the Core of Software:

The process of designing software driven by machine learning (ML) is intricate and requires a thorough understanding of the business needs as well as the nuances of the ML workflow lifecycle. Let’s explore the importance of understanding business requirements and provide an overview of a typical end-to-end ML workflow lifecycle.

Essential Insight: Business Requirement Understanding:

- Problem Definition: Begin by clearly defining the problem that the ML-powered software aims to solve. Understand the specific challenges and also goals of the business.

- Data Relevance: Identify and also gather relevant data that aligns with the business requirements. Quality data is crucial for training accurate ML models.

- Scope and Constraints: Define the ML solution’s scope and any constraints that might impact the design and also implementation. This ensures realistic expectations and also feasible outcomes.

- Business Impact: Understand the potential impact of the ML solution on the business. This involves assessing how the software will contribute to goals such as efficiency improvement, cost reduction, or revenue increase.

- Integration with Existing Systems: Consider how the ML-powered software will integrate with existing systems and workflows within the organization. Seamless integration enhances adoption and also efficiency.

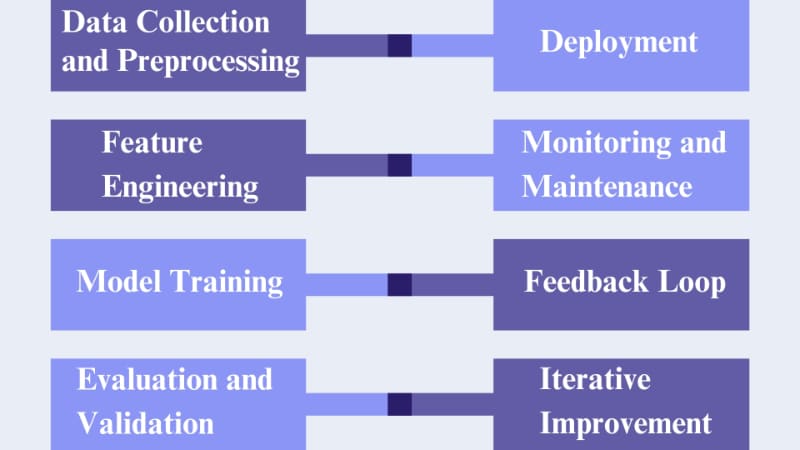

Complete ML Workflow: From Inception to Deployment:

- Data Collection and Preprocessing: Acquire and also clean the data, ensuring it is relevant and representative. Preprocessing involves handling missing values, scaling, and encoding categorical variables.

- Feature Engineering: Choose and provide characteristics that add significant value to the machine learning model. Creating a format for raw data that is appropriate for model training is the task of this stage.

- Model Training: Choose an appropriate ML algorithm based on the nature of the problem. Train the model using historical data, and also validate its performance using a separate dataset.

- Evaluation and Validation: Assess the model’s performance using metrics relevant to the business problem. Validate the model’s accuracy, precision, recall, and also other relevant metrics.

- Deployment: Implement the ML model into the production environment. Ensure compatibility with existing software systems and also monitor its performance in real-world conditions.

- Monitoring and Maintenance: Continuously monitor the model’s performance post-deployment. Put in place procedures for updating and also retraining the model with fresh data to preserve accuracy.

- Feedback Loop: Establish a feedback loop between end-users and also developers. Gather feedback to improve the model and address any issues that arise during real-world usage.

- Iterative Improvement: Iterate the model and also the entire ML workflow based on feedback, changing business requirements, and evolving data patterns.

Understanding business requirements is foundational to this entire process, ensuring that the ML-powered software aligns with the organization’s goals and also delivers meaningful value. Regular collaboration between data scientists, developers, and also business stakeholders is essential for a successful ML implementation.

ML Mastery Unveiled: Exploring Software’s Three Phases:

The three ML-based software tiers correspond to discrete phases in the creation and also implementation of ML applications.

- Data Engineering Pipelines:

- Data Collection: Involves gathering relevant data from various sources, ensuring its quality, and also aggregating it for analysis.

- Data Cleaning: The process of identifying and handling errors, missing values, and outliers to ensure that the data is accurate and also reliable.

- Data Preparation: This includes organizing and also changing the data to make it appropriate for ML model training.

- ML Pipelines and ML Workflows:

- Model Training: The phase where machine learning models are developed using algorithms and also historical data. This involves selecting the appropriate model, training it on the prepared data, and also fine-tuning its parameters for optimal performance.

- Deployment Automation: In this stage, automated processes are employed to deploy trained models into production environments. This includes packaging models, creating APIs, and also setting up the infrastructure for model deployment.

- Model Serving Patterns and Deployment Strategies:

- Model Serving Patterns: Refers to the architectural patterns used for serving machine learning models in production. This could involve deploying models as microservices, using serverless architectures, or employing edge computing for inference.

- Deployment Strategies: Encompasses the various approaches to deploying models, such as A/B testing, canary releases, and blue-green deployments. These strategies help minimize risks and ensure a smooth transition of ML models into real-world applications.

By addressing these three levels, ML-based software development covers the entire lifecycle, from handling raw data to deploying and serving models in production. Each level plays a crucial role in building robust, scalable, and also efficient machine learning applications.

Machine Learning Engineers Mastering MLOps Dynamics:

Embarking on the path of machine learning engineering involves navigating the landscapes of MLOps, ML Model Governance, and ML driven software development. In the realm of MLOps the MLOps Stack Canvas acts as a roadmap detailing the architecture and infrastructure elements, for the lifecycle of ML models. From inception to deployment and monitoring. The dedication to responsibility is evident in ML Model Governance, where rules are put in place and enforced to uphold practices, in machine learning and comply with regulations.

Understanding the importance of grasping the stages of software development is crucial. It all begins with an examination of business needs, which includes defining issues determining the significance of data, and assessing how it affects the business. Moving through the lifecycle of ML workflow, which involves tasks, like gathering data deploying it and more requires management of data-creating features, training models, and setting up feedback loops, for ongoing refinement and enhancement.

Exploring the three stages of machine learning software. Data Engineering Pipelines, ML Pipelines and Workflows and Model Serving Patterns, with Deployment Strategies. Offers a method. This comprehensive approach, covering everything from managing data to deploying models enables machine learning experts to create scalable and effective ML applications. Working closely with data scientists, developers and business stakeholders is crucial, in this field ensuring the execution of ML projects.

Final Thoughts on the Matter: A Comprehensive Analysis:

In conclusion MLOps, short, for Machine Learning Operations represents an approach that seamlessly integrates machine learning within the DevOps structure enhancing efficiency and collaboration throughout the ML lifecycle. Through automation monitoring and encouraging teamwork between data scientists and operations teams MLOps speeds up model deployment enhances performance and ensures scalability. The combination of the three stages of ML-driven software development with MLOps practices such, as testing, deployment, management, and monitoring provides a roadmap for companies to navigate the realm of machine learning. This strategic adoption does not streamline processes. Also promotes responsible AI practices and ethical considerations bolstering the success of data-driven endeavors.

Keywords: Machine Learning Engineer | active learning machine learning | pattern recognition and machine learning | masters in AI and machine learning | MLOps | Machine Learning Operations

FAQs (Frequently Asked Questions)

Is machine learning a good career?

Machine learning is a hot career. In general, machine learning is a highly sought-after skill in today’s tech industry, professionals who have expertise in this field are in high demand.

What is required to become a machine learning engineer?

To become a machine learning engineer, you’ll need at least a bachelor’s degree, certifications in machine learning, a blend of technical skills and practical experience.

Is ML engineer a stressful job?

Machine learning engineer can be stressful like any tough job. Machine Learning Engineers often face complex challenges.

Is machine learning engineer highest paid?

An Entry Level Machine Learning Engineer with less than three years of experience earns an average salary of ₹7.6 Lakhs per year. Highest salary that a Machine Learning Engineer can earn is ₹22.0 Lakhs per year.