Ensemble learning is a powerful machine learning technique that combines multiple models to improve predictive performance. By leveraging the diversity of individual models, ensemble methods can enhance accuracy and robustness. Through techniques like bagging, boosting, and stacking, ensemble learning harnesses the collective intelligence of diverse models to make more accurate predictions. This approach finds wide application across various domains due to its ability to reduce overfitting and improve generalization. Understanding ensemble learning is essential for maximizing predictive accuracy and building robust machine learning models.

Ensemble Learning: A Machine Learning Paradigm

Ensemble learning represents a fundamental paradigm in machine learning where multiple models are combined to enhance predictive accuracy. By leveraging the strengths of diverse models, ensemble methods can outperform individual algorithms. Techniques like bagging, boosting, and stacking are commonly used in ensemble learning to create robust and accurate predictions. This approach is widely adopted in various applications because it can improve generalization and mitigate overfitting. Understanding ensemble learning is crucial for developing high-performing machine learning systems.

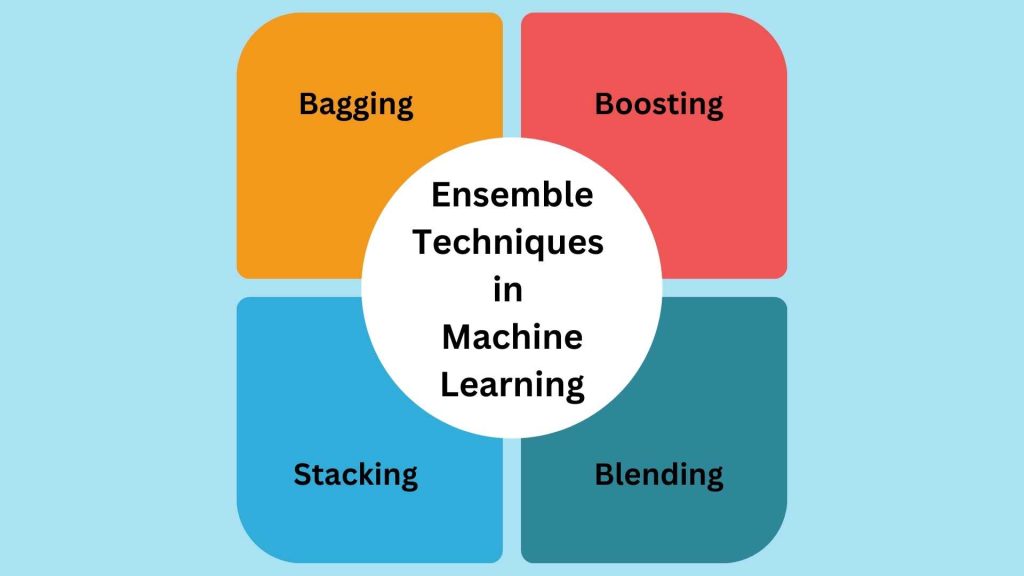

Ensemble Techniques in Machine Learning

Ensemble learning is a powerful technique in machine learning that combines multiple models to improve predictive performance. By leveraging the strengths of diverse models, ensemble methods can often outperform any single model. Here are some of the most commonly used ensemble techniques:

Bagging (Bootstrap Aggregating)

- Bagging involves creating multiple models from a single base model by training each model on a different subset of the training data.

- The subsets are created using bootstrap sampling, where samples are drawn from the original dataset with replacement.

- Each base model is trained independently, and their predictions are combined using majority voting for classification or averaging for regression.

- Random Forest is a popular example of a bagging algorithm that uses decision trees as base models.

Boosting

- Boosting is an iterative process where weak learners (base models) are trained sequentially, with each subsequent model focusing on the mistakes made by the previous models.

- The most well-known boosting algorithm is AdaBoost (Adaptive Boosting), which adjusts the weights of the training samples based on the performance of the previous models.

- Gradient Boosting is another popular boosting technique that uses gradient descent to minimize the loss function and improve the ensemble’s performance.

Stacking (Stacked Generalization)

- Stacking involves training multiple base models on the same dataset and then using a meta-model to combine their predictions.

- The base models are trained independently, and their outputs are used as features for the meta-model.

- The meta-model is trained to learn the optimal way to combine the predictions of the base models.

- Stacking can handle heterogeneous base models, allowing for different types of machine-learning algorithms.

Blending

- Blending is similar to stacking, but it uses a simpler approach to combine the predictions of the base models.

- Instead of training a meta-model, blending uses a weighted average of the base model predictions.

- The weights are determined based on the performance of each base model on a validation set or using a grid search technique.

These ensemble techniques can significantly improve the accuracy and robustness of machine learning models by leveraging the strengths of multiple models. However, it’s important to note that ensemble methods may come at the cost of interpretability, as the final model becomes more complex.

Algorithms for Ensemble Learning

Random Forest

Random Forest is a versatile ensemble learning method that operates by constructing a multitude of decision trees during training and outputting the mode of the classes as the prediction. Here are key points about Random Forest:

- Random Forest involves creating multiple decision trees by selecting random subsets of features and data points to build each tree.

- Each tree in the forest is trained independently, and the final prediction is made by aggregating the predictions of all trees through voting or averaging.

- This algorithm is known for its robustness against overfitting and its ability to handle high-dimensional data effectively.

- Random Forest is widely used in various applications due to its simplicity, scalability, and high accuracy in both classification and regression tasks.

XGBoost

XGBoost, short for Extreme Gradient Boosting, is a powerful boosting algorithm that has gained popularity for its efficiency and performance. Here are key points about XGBoost:

- XGBoost is an optimized implementation of gradient boosting that focuses on computational speed and model performance.

- It sequentially builds a series of weak learners to correct the errors of the previous models, resulting in a strong ensemble model.

- This algorithm uses gradient descent optimization to minimize the loss function and improve the predictive accuracy of the ensemble.

- XGBoost is known for its scalability, handling large datasets efficiently, and its ability to capture complex patterns in the data.

These algorithms, Random Forest and XGBoost, are among the most popular and effective ensemble learning techniques used in machine learning for their robustness, accuracy, and versatility across various domains.

Deciphering the Power of Ensemble Algorithms

Ensemble learning algorithms have gained immense popularity in recent years due to their ability to improve the performance of machine learning models significantly. By combining multiple base models, ensemble methods can harness the strengths of diverse algorithms and mitigate their weaknesses. Here’s an in-depth look at the key factors that contribute to the power of ensemble algorithms:

Diversity and Error Reduction

- Ensemble methods work by combining the predictions of multiple base models, each of which may capture different patterns or aspects of the data.

- By leveraging the diversity of base models, ensembles can reduce the overall error of the system1.

- Bagging techniques, such as Random Forest, create diverse models by training each base model on a random subset of the training data.

- Boosting algorithms, like AdaBoost and Gradient Boosting, sequentially train base models to focus on the mistakes made by previous models, gradually improving the ensemble’s performance.

Robustness and Generalization

- Ensemble methods are less prone to overfitting and can generalize better to unseen data compared to individual models.

- By combining the predictions of multiple models, ensembles smooth out the noise and idiosyncrasies present in individual models.

- The diversity among base models ensures that the ensemble can adapt to various patterns in the data and perform well on a wide range of tasks.

Handling Uncertainty and Complexity

- Ensemble methods provide a measure of confidence in their predictions by considering the level of agreement or disagreement among the constituent models.

- This is particularly useful in situations where understanding the uncertainty of predictions is critical, such as in medical diagnosis or financial risk assessment.

- Ensemble algorithms can handle complex and diverse datasets by leveraging the collective intelligence of multiple models.

- They are adaptable to different types of data and problem domains, making them versatile tools for a wide range of applications.

Scalability and Parallelization

- Many ensemble methods, such as Random Forest and Gradient Boosting, can parallelize the training of base models.

- This scalability allows ensemble algorithms to handle large datasets efficiently and train models in a reasonable amount of time.

- The parallelization of base model training is a key factor in the computational efficiency of ensemble methods, making them suitable for big data applications.

The power of ensemble algorithms lies in their ability to combine the strengths of multiple models, reduce errors, improve robustness, handle complexity, and scale to large datasets. By leveraging the diversity of base models and employing techniques like bagging and boosting, ensemble methods have become indispensable tools in the field of machine learning.

How to Ensemble Two Models?

Ensembling two models involves combining the predictions of each model to create a more robust and accurate prediction. This approach is particularly useful when individual models have different strengths and weaknesses, and combining them can lead to a better overall performance. Here are the steps to ensemble two models:

Choosing the Right Ensemble Method

- Averaging: This method involves taking the average of the predictions from both models. It is commonly used for regression problems where the goal is to predict a continuous value.

- Voting: This method involves taking the class with the highest vote count from both models. It is commonly used for classification problems where the goal is to predict a categorical outcome.

- Weighted Averaging: This method involves assigning weights to each model based on their performance and then taking a weighted average of their predictions. This approach allows for more flexibility in the ensemble process.

Implementing the Ensemble

- Simple Averaging: For regression problems, the predictions from both models are averaged directly. For example, if model A predicts 0.4 and model B predicts 0.6, the ensemble prediction would be 0.5.

- Voting: For classification problems, the class with the highest vote count from both models is chosen. For example, if model A predicts class A with 0.7 probability and model B predicts class B with 0.3 probability, the ensemble prediction would be class A.

- Weighted Averaging: For both regression and classification problems, the predictions from both models are weighted based on their performance. For example, if model A has a higher accuracy than model B, it would be given a higher weight in the ensemble.

Evaluating the Ensemble

- Performance Metrics: The performance of the ensemble model is evaluated using metrics such as mean squared error (MSE) for regression problems and accuracy for classification problems.

- Comparison to Individual Models: The ensemble model is compared to the performance of the individual models to determine if the ensemble improves the overall accuracy.

Advantages and Disadvantages

- Advantages: Ensembling two models can improve the overall accuracy and robustness of the predictions. It can also help to reduce overfitting and improve the generalizability of the model.

- Disadvantages: Ensembling two models can increase the complexity of the model and make it more difficult to interpret. It can also lead to overfitting if the models are highly correlated.

Real-World Applications

- Financial Forecasting: Ensembling two models can be used in financial forecasting to improve the accuracy of stock market predictions.

- Medical Diagnosis: Ensembling two models can be used in medical diagnosis to improve the accuracy of disease diagnosis.

- Image Classification: Ensembling two models can be used in image classification to improve the accuracy of image recognition.

By following these steps and considering the advantages and disadvantages of ensembling two models, you can effectively combine the predictions of two models to create a more robust and accurate prediction.

Evaluation of Ensemble Predictions

Evaluating the performance of ensemble predictions is crucial for assessing the effectiveness of the ensemble learning approach and comparing it to individual models. There are several metrics and techniques used to evaluate ensemble predictions, each with its advantages and considerations. Here are some key aspects of evaluating ensemble predictions:

Performance Metrics

- Classification Metrics: For classification tasks, common metrics include accuracy, precision, recall, F1-score, and area under the ROC curve (AUC-ROC).

- Regression Metrics: For regression tasks, popular metrics are mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE), and coefficient of determination (R-squared).

- Probabilistic Metrics: When dealing with probabilistic outputs, metrics like log-loss, Brier score, and calibration curves can assess the quality of probability estimates.

Comparison to Individual Models

- Comparing ensemble performance to the best individual model: This approach evaluates whether the ensemble outperforms the single best-performing model in the ensemble.

- Comparing ensemble performance to the average individual model: This method compares the ensemble’s performance to the average performance of all individual models in the ensemble.

- Statistical significance tests: Techniques like paired t-tests or Wilcoxon signed-rank tests can determine if the performance difference between the ensemble and individual models is statistically significant.

Cross-Validation and Holdout Sets

- K-fold cross-validation: This technique splits the data into K folds, trains the ensemble on K-1 folds, and evaluates it on the remaining fold. The process is repeated K times, and the average performance is reported.

- Nested cross-validation: This approach uses an inner loop for model selection and hyperparameter tuning, and an outer loop for evaluating the final ensemble.

- Holdout sets: A portion of the data is set aside as a test set and is used to evaluate the final ensemble after training and tuning the remaining data.

Ensemble Diversity and Stability

- Measuring diversity: Metrics like pairwise disagreement, entropy, and Kohavi-Wolpert variance can quantify the diversity among base models in the ensemble.

- Assessing stability: Evaluating the consistency of ensemble predictions across different training runs or data splits can provide insights into the stability of the ensemble.

Practical Considerations

- Computational efficiency: Evaluating ensemble models can be computationally intensive, especially when using cross-validation or large datasets.

- Interpretability: Ensemble models can be less interpretable than individual models, making it challenging to understand the reasoning behind predictions.

- Domain-specific considerations: Certain applications may require specific evaluation metrics or techniques based on the problem domain and stakeholder requirements.

By considering these aspects and using appropriate evaluation metrics and techniques, researchers and practitioners can effectively assess the performance of ensemble predictions and make informed decisions about the suitability of ensemble learning for their specific tasks and datasets.

Ensemble Size and Impact on Prediction Accuracy

The size of an ensemble, referring to the number of component classifiers within it, plays a crucial role in determining the prediction accuracy of the ensemble. Understanding how ensemble size impacts prediction accuracy is essential for optimizing the performance of ensemble learning models. Here are key insights into the relationship between ensemble size and prediction accuracy:

Importance of Ensemble Size

- Impact on Accuracy: The number of component classifiers in an ensemble directly influences the accuracy of predictions. While a larger ensemble size can potentially improve accuracy by capturing more diverse patterns in the data, there is an optimal number of classifiers beyond which the accuracy may start to deteriorate.

- Computational Resources: Larger ensemble sizes may require additional computational resources, leading to increased training times and model complexity. It is crucial to strike a balance between ensemble size and computational efficiency to ensure practical implementation.

- Statistical Considerations: Determining the ideal ensemble size involves statistical tests to find the optimal number of components that maximize prediction accuracy. The “law of diminishing returns in ensemble construction” suggests that having too few or too many classifiers can negatively impact accuracy.

Strategies for Determining Ensemble Size

- Statistical Tests: Traditional statistical tests have been used to determine the appropriate number of component classifiers in an ensemble. These tests help identify the optimal balance between model complexity and prediction accuracy.

- Theoretical Framework: Recent advancements propose a theoretical framework that suggests an ideal number of component classifiers for an ensemble. This framework indicates that using the same number of independent classifiers as class labels can lead to the highest accuracy, highlighting the importance of ensemble size in optimizing performance.

Practical Considerations

- Online Ensemble Classifiers: For online ensemble classifiers dealing with big data streams, determining the ensemble size becomes even more critical. A priori determination of ensemble size is essential to ensure efficient and accurate predictions in real-time scenarios.

- Ensuring Diversity: Ensemble methods thrive on diversity among base models. While increasing ensemble size can enhance diversity, it is essential to balance diversity with model interpretability and computational efficiency.

- Optimizing Performance: Finding the right ensemble size involves a trade-off between model complexity, computational resources, and prediction accuracy. Experimentation and validation are key to identifying the optimal ensemble size for a specific task or dataset.

Understanding the impact of ensemble size on prediction accuracy is fundamental in designing effective ensemble learning models that strike a balance between model complexity, computational efficiency, and predictive performance. By carefully considering ensemble size and its implications, practitioners can harness the full potential of ensemble methods in improving predictive accuracy across various machine learning tasks.

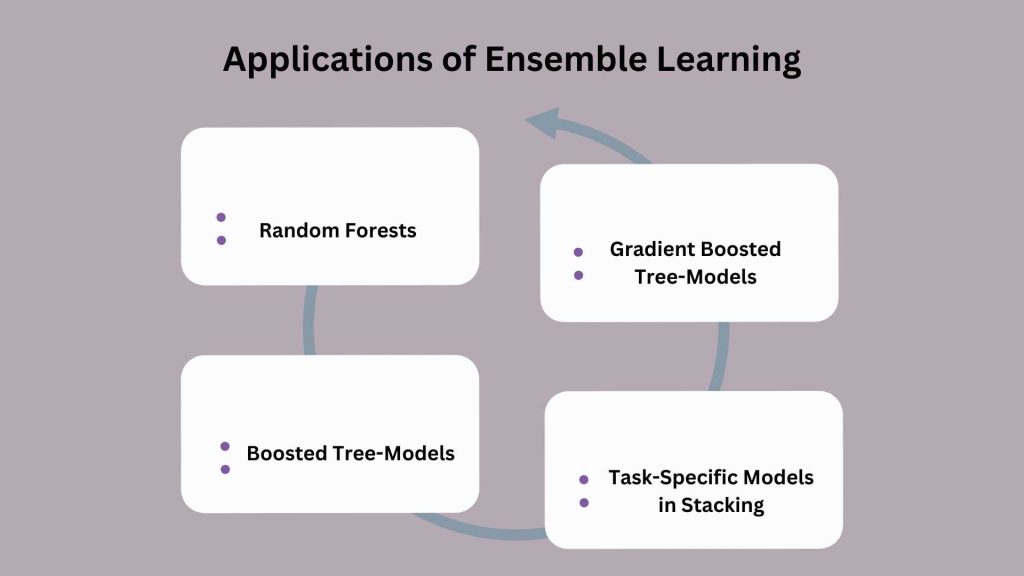

Applications of Ensemble Learning

Ensemble learning techniques have found widespread applications across various domains due to their ability to improve predictive accuracy and robustness. Here are some notable applications of ensemble learning:

Random Forests

Random Forest is a popular ensemble method that combines multiple decision trees to enhance performance. It has been successfully applied in numerous domains:

- Financial Forecasting: Random Forest has been used for stock price prediction, credit risk assessment, and fraud detection in financial applications.

- Bioinformatics: In bioinformatics, Random Forest has been employed for tasks like protein structure prediction, gene expression analysis, and disease classification.

- Image Recognition: Random Forest has shown promising results in image recognition tasks, such as object detection, image segmentation, and facial recognition.

Boosted Tree-Models

Boosting techniques, such as AdaBoost and Gradient Boosting, have been widely used to create powerful ensemble models:

- Sentiment Analysis: Boosted tree-models have been applied to sentiment analysis tasks, where they combine multiple weak learners to accurately classify text as positive, negative, or neutral.

- Recommendation Systems: In recommendation systems, boosted tree-models have been used to combine various features, such as user preferences, item attributes, and social interactions, to provide personalized recommendations.

- Anomaly Detection: Boosted tree-models have been employed for anomaly detection in various domains, including network intrusion detection, fraud prevention, and industrial process monitoring.

Gradient Boosted Tree-Models

Gradient Boosting is a powerful ensemble technique that has been successfully applied in numerous real-world scenarios:

- Web Search Ranking: Gradient Boosted Tree-Models have been used by major search engines to rank web pages based on relevance, user preferences, and other factors.

- Online Advertising: In online advertising, Gradient Boosting has been used to predict click-through rates, optimize ad placement, and improve targeting.

- Churn Prediction: Gradient Boosted Tree-Models have been applied to predict customer churn in subscription-based services, helping companies retain valuable customers.

Task-Specific Models in Stacking

Stacking, an ensemble technique that combines multiple base models using a meta-model, has been used to create task-specific ensemble models:

- Kaggle Competitions: In Kaggle competitions, participants often employ stacking to combine various models, such as neural networks, decision trees, and support vector machines, to create powerful ensembles that outperform individual models.

- Biomedical Image Analysis: In biomedical image analysis, stacking has been used to combine task-specific models, such as those for tumor segmentation, cell detection, and tissue classification, to improve overall performance.

- Natural Language Processing: In NLP tasks like machine translation and text summarization, stacking has been used to combine models trained on different architectures, such as recurrent neural networks and transformers, to achieve state-of-the-art results.

These applications demonstrate the versatility and effectiveness of ensemble learning techniques in solving complex problems across various domains. By leveraging the strengths of multiple models, ensemble methods can significantly improve predictive accuracy and robustness compared to individual models.

Multiple Classifier Systems and Hybridization

Multiple Classifier Systems (MCS) involve combining multiple classifiers to improve the overall performance compared to individual classifiers. In MCS, combining different classifiers or techniques to leverage their strengths and mitigate weaknesses is a key concept in hybridization. Here’s an in-depth look at MCS and hybridization:

Motivation for MCS

- Limitations of single classifiers: Individual classifiers may have inherent limitations in terms of accuracy, robustness, and generalization.

- Diversity and complementarity: Combining diverse classifiers can lead to improved performance by leveraging their complementary strengths.

- Handling complex problems: MCS can tackle complex problems that may be difficult for single classifiers to solve effectively.

Types of Hybridization in MCS

- Homogeneous MCS: Combining classifiers of the same type, such as multiple neural networks or decision trees.

- Heterogeneous MCS: Combining classifiers of different types, such as neural networks, decision trees, and support vector machines.

- High-level hybridization: Combining classifiers at a high level, such as using the outputs of individual classifiers as inputs to a meta-classifier.

- Low-level hybridization: Combining classifiers at a low level, such as modifying the base classifiers or their training process.

Techniques for Hybridization

- Ensemble methods: Techniques like bagging, boosting, and stacking that combine multiple classifiers to improve performance.

- Fusion methods: Combining the outputs of individual classifiers using techniques such as majority voting, weighted averaging, or fuzzy integrals.

- Hybrid algorithms: Combining different algorithms or techniques, such as hybridizing evolutionary algorithms with particle swarm optimization for class imbalance problems.

Applications of Hybrid MCS

- Bioinformatics: Combining classifiers for tasks like protein structure prediction, gene expression analysis, and disease classification.

- Image recognition: Hybridizing classifiers for object detection, image segmentation, and facial recognition.

- Text classification: Combining classifiers for sentiment analysis, spam detection, and information retrieval.

- Medical diagnosis: Hybridizing classifiers for disease prediction and adverse drug reaction detection.

Challenges and Future Directions

- Computational complexity: Combining multiple classifiers can increase computational complexity, especially for real-time applications.

- Interpretability: Hybrid MCS can be less interpretable compared to individual classifiers, making it challenging to understand the reasoning behind predictions.

- Ensemble diversity: Ensuring diversity among base classifiers is crucial for the success of hybrid MCS, but it can be difficult to achieve.

Multiple Classifier Systems and hybridization have shown great potential in improving the performance of machine learning models across various domains. By combining the strengths of different classifiers and techniques, hybrid MCS can tackle complex problems and achieve state-of-the-art results. To address challenges related to computational complexity, interpretability, and ensemble diversity, further research is necessary.

Efficiency and Accuracy Trade-offs in Ensemble Learning

Ensemble learning is a powerful technique that combines multiple machine learning models to improve the accuracy and reliability of predictions. However, there are trade-offs between the efficiency and accuracy of ensemble methods that need to be considered.

Accuracy Improvements with Ensemble Learning

Ensemble methods can significantly improve the accuracy of machine learning models compared to using a single model alone. Some key benefits include:

- Combining models with different strengths and weaknesses can lead to better overall performance

- Ensemble methods are often the winning approach in machine learning competitions

- Ensemble models can achieve high precision and recall, especially when combining models trained on different feature sets

Efficiency Challenges with Ensemble Learning

While ensemble methods can boost accuracy, they also come with efficiency challenges:

- Training multiple models is computationally expensive and time-consuming

- Combining predictions from many models can be slow, especially for real-time applications

- Ensemble models are often more complex and harder to interpret than single models

Factors Affecting Efficiency and Accuracy Trade-offs

Several factors influence the trade-offs between efficiency and accuracy in ensemble learning:

- Number of base models: Using more models can improve accuracy but decreases efficiency

- Diversity of base models: Combining models with different architectures and training data can boost accuracy but reduce efficiency

- Complexity of base models: Using more complex models like deep neural networks as base learners improves accuracy but reduces efficiency

- Ensemble method: Different ensemble techniques have different efficiency and accuracy characteristics

Techniques to Improve Efficiency

To mitigate the efficiency challenges of ensemble learning, several techniques can be used:

- Filtering out low-performing base models: Removing models with significantly lower accuracy than others can improve efficiency without much accuracy loss

- Using simpler base models: Employing less complex models like decision trees or logistic regression as base learners reduces training and inference time

- Parallelizing model training: Training base models in parallel on multiple CPUs or GPUs can speed up the overall training process

- Optimizing ensemble method: Choosing an efficient ensemble technique like bagging or voting can improve inference speed compared to more complex methods like stacking

Choosing the Right Ensemble Approach

The optimal ensemble approach depends on the specific problem, data, and requirements. Some general guidelines:

- For high-stakes applications like healthcare: Prioritize accuracy over efficiency and use a diverse set of complex base models

- For real-time applications: Prioritize efficiency, use simpler base models, and optimize the ensemble method

- For large datasets: Parallelizing training and filtering low-performing models can improve efficiency

- For interpretability: Use simpler base models and ensemble techniques that are easier to interpret

Ensemble learning is a powerful tool for improving machine learning accuracy, but it comes with efficiency challenges. By understanding the trade-offs and using techniques to optimize efficiency, practitioners can choose the right ensemble approach for their specific use case. As with any machine learning technique, it’s important to carefully evaluate the accuracy and efficiency of ensemble models on held-out test data.

Libraries for Ensemble Learning

Ensemble learning is a powerful technique that combines multiple models to improve the overall performance of a machine learning system. Several libraries and frameworks provide implementations of ensemble learning algorithms. Here are some popular open-source libraries for ensemble learning:

Scikit-learn (Python)

Scikit-learn is a widely used machine learning library in Python that provides implementations of various ensemble learning algorithms13. Some of the ensemble methods available in Scikit-learn include:

- Bagging Classifiers (BaggingClassifier)

- Random Forest Classifiers (RandomForestClassifier)

- Extra Trees Classifiers (ExtraTreesClassifier)

- AdaBoost Classifiers (AdaBoostClassifier)

- Gradient Boosting Classifiers (GradientBoostingClassifier)

Scikit-learn also provides tools for evaluating and comparing ensemble models, such as cross-validation and metrics for classification and regression tasks.

XGBoost (Python, R, C++)

It (Extreme Gradient Boosting) is a highly efficient and scalable implementation of gradient boosting, a popular ensemble learning algorithm124. XGBoost supports several programming languages, including Python, R, and C++. It is known for its speed, performance, and ability to handle large-scale data and high-dimensional features.

Key features of XGBoost include:

- Parallel tree boosting

- Handling sparse data

- Built-in cross-validation

- Regularization to prevent overfitting

XGBoost has been widely used in machine-learning competitions and has become a go-to library for gradient-boosting tasks.

LightGBM (Python, R, C++)

LightGBM (Light Gradient Boosting Machine) is another efficient implementation of gradient boosting, developed by Microsoft24. It uses tree-based learning algorithms and is designed to be highly efficient, with low memory usage and fast training speed.

LightGBM supports several features that make it suitable for large-scale data and high-dimensional problems, such as:

- Histogram-based algorithm for faster computation

- Efficient handling of categorical features

- Parallel and GPU learning

- Automatic feature selection

LightGBM is available in Python, R, and C++, and has been used in various applications, including ranking, classification, and regression tasks.

H2O (Java, R, Python)

H2O is an open-source, distributed, in-memory machine learning platform that provides implementations of various ensemble learning algorithms. It supports several programming languages, including Java, R, and Python.

H2O offers a wide range of ensemble methods, such as:

- Distributed Random Forest (DRF)

- Gradient Boosting Machine (GBM)

- Extremely Randomized Trees (XRT)

- Stacked Ensembles

H2O is designed to be scalable and can handle large datasets and high-dimensional features. It also provides tools for model evaluation, feature engineering, and deployment.

Catboost (Python, R)

Catboost (Categorical Feature Boosting) is an open-source gradient boosting framework developed by Yandex24. It is designed to handle categorical features automatically and provides high performance on a wide range of tasks.

Catboost offers several ensemble learning algorithms, including

- Gradient Boosting

- Ordered Target Encoding

- Overfitting prevention techniques

Catboost supports Python and R and has been used in various applications, such as ranking, classification, and regression tasks.

Voting Classifier (Scikit-learn)

The Voting Classifier in Scikit-learn allows you to combine multiple estimators (e.g., classifiers or regressors) into a single estimator3. It supports two voting methods:

- Hard voting: Each classifier votes for a class, and the class with the most votes is the prediction.

- Soft voting: Each classifier provides a probability or confidence score for each class, and the class with the highest average score is the prediction.

The Voting Classifier can be used to combine different types of models, such as logistic regression, decision trees, and support vector machines, to improve the overall performance of the ensemble.

Choosing the Right Library

When selecting an ensemble learning library, consider factors such as the size and complexity of your dataset, the specific algorithms you need, and the programming language you prefer. Here are some general guidelines:

- For small to medium-sized datasets and a wide range of ensemble algorithms, Scikit-learn is a great choice due to its simplicity and wide adoption.

- For large-scale datasets and high-performance gradient boosting, XGBoost and LightGBM are excellent options, with XGBoost being more widely used and LightGBM being faster and more memory-efficient.

- If you need a comprehensive platform for distributed machine learning, H2O is a good choice, as it supports various ensemble methods and can handle large datasets.

- If you have categorical features and want automatic handling of them, Catboost is a suitable library.

Remember that the performance of ensemble models can vary depending on the specific problem and dataset, so it’s always a good idea to experiment with different libraries and algorithms to find the best fit for your use case.

Exploring Different Types of Ensemble Classifiers

Ensemble learning is a powerful machine learning approach that involves combining multiple models to enhance predictive performance. Within ensemble learning, various types of ensemble classifiers exist, each with its unique characteristics and applications. Let’s delve into the world of ensemble classifiers to understand the diversity and utility they offer in machine learning.

Types of Ensemble Classifiers

- Bagging

- Definition: Bagging, or Bootstrap Aggregating, involves training models on different subsets of the training data with replacement.

- Technique: It creates multiple bootstrap resamples and runs an algorithm on each resample to make predictions.

- Advantages: Reduces variance, minimizes overfitting, and provides an unbiased estimate of out-of-bag error.

- Disadvantages: Computationally expensive due to multiple models and can be challenging to interpret final results.

- Boosting

- Definition: Boosting builds models sequentially, where each subsequent model corrects errors made by the previous ones.

- Technique: Adaptive Boosting (AdaBoost) is a popular boosting algorithm that assigns higher weights to misclassified instances.

- Applications: Effective for binary classification tasks and improves model accuracy through iterative learning.

- Stacking

- Definition: Stacking involves training multiple diverse models independently and combining their predictions using a meta-model.

- Process: Models’ predictions serve as features for the meta-model, which learns how to best combine these predictions.

- Advantages: Produces robust predictors and can enhance model performance by leveraging diverse base learners.

- Blending

- Definition: Blending is similar to stacking but extends to include validation and testing data in the model training process.

- Approach: The final model learns from both predictions and additional data, leading to a more comprehensive understanding of the problem.

Ensemble Methods in Practice

- Random Forest: A widely used ensemble model that combines multiple decision trees trained using bagging.

- XGBoost: Extreme Gradient Boosting is scalable, efficient, and suitable for large datasets, implementing the gradient boosting framework.

- AdaBoost: Adaptive Boosting is an early successful boosting algorithm for binary classification, assigning weights to instances for iterative learning.

Evaluating Ensemble Classifiers

- Diversity: Ensembles benefit from diverse models, promoting better results through varied approaches.

- Ensemble Size: Determining the optimal number of component classifiers is crucial for accuracy and efficiency.

- Aggregating Predictions: Techniques like Max Voting and Averaging are used to combine predictions from multiple models effectively.

Ensemble learning offers a versatile and effective approach to machine learning by harnessing the collective power of diverse models. Understanding the nuances of different ensemble classifiers empowers data scientists to build robust and accurate predictive models across various domains. By leveraging the strengths of bagging, boosting, stacking, and blending, practitioners can enhance model performance and achieve superior results in classification and regression tasks.

Advanced Ensemble Learning Techniques

- Multi-level StackingExtends stacking by applying it on multiple levels to improve model performance.

- Bagging (Bootstrap Aggregating)Reduces variance in final predictions by training models on random sub-samples of the dataset.

- BoostingSelf-learning technique that assigns weights to data points based on misclassifications.

Pros and Cons of Ensemble Learning

- Pros: Improved predictive accuracy.

- Cons: Increased complexity.

Ensemble learning techniques offer a powerful approach to enhancing machine learning models’ performance, making them essential in various real-world applications and competitions. By carefully selecting and combining different models, ensemble learning can significantly boost predictive accuracy and generalization performance.

Uses of Ensemble in Machine Learning

Ensemble learning is a powerful technique in machine learning that combines multiple models to improve predictive performance and robustness. Here are some key uses of ensemble learning:

Improved Accuracy

One of the primary uses of ensemble learning is to improve the accuracy of predictions compared to individual models. By combining the strengths of multiple models, ensemble methods can capture a more comprehensive understanding of the data, leading to better overall performance.

Reduced Overfitting

Ensemble learning helps reduce overfitting by introducing randomness and diversity into the modeling process. Techniques like bagging use random subsets of the data to train each model while boosting focuses on difficult-to-classify instances, improving generalization performance.

Enhanced Robustness

Ensemble methods enhance the robustness of predictions by considering multiple models’ opinions and making consensus-based decisions. This mitigates the impact of outliers or errors in a single model, ensuring more reliable results.

Handling Complex Datasets

Ensemble learning is particularly useful when dealing with noisy, high-dimensional, or complex datasets where individual models may struggle34. By combining diverse models, ensembles can capture intricate patterns and make more accurate predictions in such scenarios.

Conclusion

In conclusion, ensemble learning is a powerful technique that combines multiple models to improve predictive performance and robustness. It offers significant benefits in terms of improved accuracy, reduced overfitting, enhanced robustness, and versatility. Ensemble learning is particularly useful when dealing with complex datasets and has a wide range of real-world applications, including healthcare, agriculture, insurance, and remote sensing. Its ability to capture intricate patterns and make consensus-based decisions makes it a valuable tool for tackling challenging machine-learning problems. As the field of machine learning continues to evolve, ensemble learning is likely to remain a key technique for achieving state-of-the-art results in various domains.

Frequently Asked Questions

1) What are the three types of ensemble learning?

Ensemble learning combines multiple models to enhance predictive performance, with common types including bagging, boosting, and stacking.

2) What is the technique for ensemble learning?

The technique for ensemble learning involves combining predictions from diverse models to achieve more accurate and robust results.

3) What is an example of ensemble in machine learning?

An example of an ensemble in machine learning is the random forest algorithm, which uses decision trees as base learners and combines their predictions.

4) How to ensemble two models?

To ensemble two models, you can combine their predictions using techniques like averaging or weighted averaging for more accurate results.

5) Which algorithm uses ensemble learning?

AdaBoost is a popular algorithm that utilizes ensemble learning by sequentially training models to correct the mistakes of previous ones, improving overall predictive accuracy.