Hyperparameter tuning is the art of finding the best settings for your machine-learning model. These settings, unlike model parameters learned from data, control the learning process itself. Think of it as fine-tuning the dials on a machine for optimal performance. By finding the right hyperparameters, you can significantly boost your model’s accuracy, generalization, and efficiency.

Definition and Importance of Hyperparameter Tuning

Hyperparameter tuning is the process of optimizing the settings or parameters of a machine learning model that are not directly learned from the training data. These parameters, known as hyperparameters, have a significant impact on the model’s performance, and finding the right combination of hyperparameters is crucial for achieving the best possible results.

Hyperparameters are the knobs and dials that you can adjust to fine-tune your machine-learning model. They are different from the model parameters, which are the internal weights and biases that the model learns during the training process. Hyperparameters are set before the training process begins and remain constant throughout the training.

Hyperparameter tuning is essential for several reasons:

- Improved Model Performance: By finding the optimal hyperparameter values, you can significantly improve the accuracy, precision, recall, and overall performance of your machine-learning model.

- Faster Convergence: Proper hyperparameter tuning can lead to faster convergence during the training process, reducing the time and computational resources required to train the model.

- Generalization Ability: Tuning hyperparameters can help your model generalize better to new, unseen data, reducing the risk of overfitting or underfitting.

- Reduced Bias and Variance: Hyperparameter tuning can help you strike the right balance between bias and variance, ensuring that your model is not too simple (high bias) or too complex (high variance).

Impact of Hyperparameters on Model Performance

Hyperparameters can have a significant impact on the performance of your machine-learning model. Some common examples of hyperparameters and their effects include:

- Learning Rate: The learning rate determines the step size taken during the optimization process. A high learning rate can lead to faster convergence but may cause the model to overshoot the optimal solution, while a low learning rate can result in slower convergence.

- Regularization Parameters: Regularization techniques, such as L1 (Lasso) or L2 (Ridge) regularization, help prevent overfitting by adding a penalty term to the loss function. The strength of the regularization is controlled by the regularization parameter.

- Number of Layers and Neurons: In neural networks, the number of layers and the number of neurons per layer can greatly affect the model’s capacity to learn complex patterns.

- Batch Size: The batch size determines the number of samples used in each iteration of the training process. A larger batch size can lead to more stable gradients, but a smaller batch size can help the model escape local minima.

- Number of Estimators: In ensemble methods, such as Random Forest or Gradient Boosting, the number of estimators (trees) can impact the model’s performance and complexity.

Proper hyperparameter tuning requires a systematic approach, such as grid search, random search, or more advanced techniques like Bayesian optimization or evolutionary algorithms. These methods help you explore the hyperparameter space efficiently and find the optimal combination of hyperparameters for your specific problem and dataset.

Hyperparameters for Specific Models

These hyperparameters are specific to the architecture or algorithm of the machine learning model being used. They control the complexity and capacity of the model and can have a significant impact on its performance. Examples include:

Decision Trees and Ensemble Methods:

- Maximum Depth: The maximum depth of the decision tree, which controls the complexity of the model.

- Minimum Samples Split: The minimum number of samples required to split a node in the decision tree.

- Minimum Samples Leaf: The minimum number of samples required to be at a leaf node.

- Number of Estimators: The number of trees in an ensemble method, such as Random Forest or Gradient Boosting.

Neural Networks:

- Number of Layers: The number of hidden layers in the neural network.

- Number of Neurons per Layer: The number of neurons in each hidden layer.

- Activation Functions: The non-linear activation functions used in the hidden layers.

- Dropout Rate: The probability of randomly dropping out neurons during training to prevent overfitting.

Support Vector Machines (SVMs):

- Kernel Type: The type of kernel function used, such as linear, polynomial, or radial basis function (RBF).

- Kernel Parameters: Parameters specific to the chosen kernel function, such as the gamma parameter for the RBF kernel.

- Regularization Parameter (C): The trade-off between the margin and the number of misclassified samples.

Gradient Boosting:

- Learning Rate: The step size taken during the boosting process.

- Maximum Depth: The maximum depth of the decision trees used in the boosting algorithm.

- Minimum Samples Split: The minimum number of samples required to split a node in the decision trees.

- Minimum Samples Leaf: The minimum number of samples required to be at a leaf node in the decision trees.

Proper selection and tuning of these hyperparameters is crucial for achieving optimal performance from your machine learning models. The process of finding the best combination of hyperparameters is known as hyperparameter tuning, which we will explore in the next section.

The Hyperparameter Tuning Process

Steps to Perform Hyperparameter Tuning

Hyperparameter tuning is a crucial step in optimizing the performance of machine learning models. Here are the key steps involved in the hyperparameter tuning process:

- Define the Hyperparameter Space: Identify the hyperparameters that need to be tuned for your specific model.

- Choose a Search Strategy: Select a hyperparameter optimization technique, such as grid search, random search, Bayesian optimization, or evolutionary algorithms.

- Set Evaluation Criteria: Define the metric or metrics that will be used to evaluate the performance of the model.

- Perform Hyperparameter Optimization: Run the chosen optimization algorithm to search for the best combination of hyperparameters.

- Select the Best Hyperparameters: Identify the set of hyperparameters that result in the best model performance according to the evaluation criteria.

- Fine-tune the Model: Refine the selected hyperparameters further if necessary to achieve even better performance.

Hyperparameter Tuning and the Training Process

Model Parameters vs. Hyperparameters

- Model Parameters: Model parameters are learned during the training process.

- Hyperparameters: Hyperparameters are set before the training process begins and remain constant throughout training.

Hyperparameter Tuning as a Meta-Optimization Task

Hyperparameter tuning can be viewed as a meta-optimization task, where the goal is to find the best configuration of hyperparameters that optimize the performance of the model. Here are some key points to consider:

- Search Space Exploration: Hyperparameter tuning involves exploring a high-dimensional search space to find the optimal set of hyperparameters.

- Trade-off between Exploration and Exploitation: The optimization algorithm must balance exploration (searching for new promising regions in the hyperparameter space) and exploitation (exploiting known good regions) to find the optimal hyperparameters.

- Computational Resources: Hyperparameter tuning can be computationally expensive, especially for complex models and large datasets.

- Generalization and Overfitting: Care must be taken to ensure that the hyperparameters selected during tuning generalize well to unseen data.

- Iterative Process: Hyperparameter tuning is often an iterative process that involves multiple rounds of optimization and evaluation.

By understanding the distinction between model parameters and hyperparameters, and viewing hyperparameter tuning as a meta-optimization task, you can effectively optimize the performance of your machine-learning models and achieve better results.

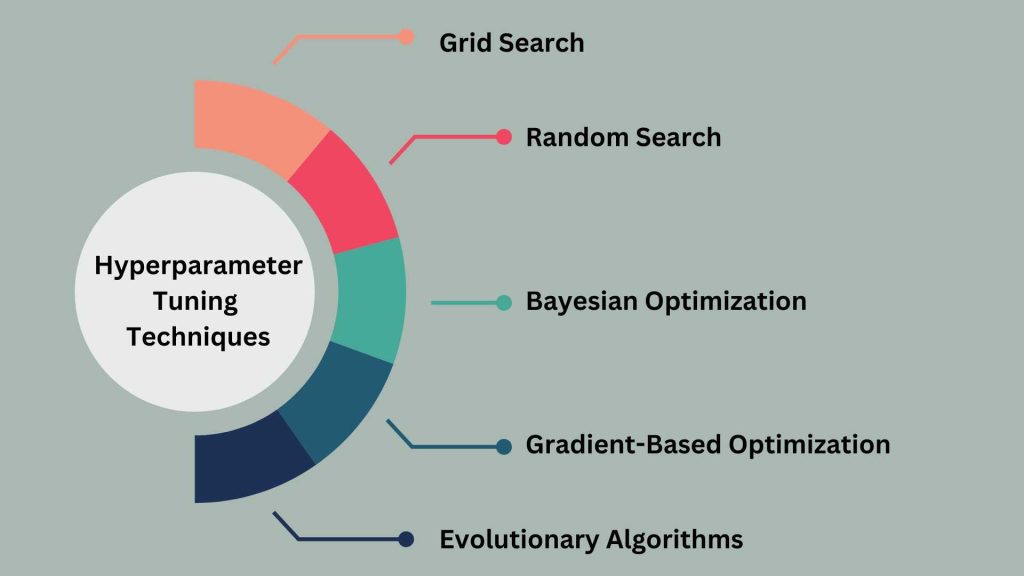

Hyperparameter Tuning Techniques

When it comes to optimizing the performance of machine learning models, there are several techniques available for tuning hyperparameters. Let’s explore some of the commonly used methods:

Grid Search

- Definition: Grid search is a simple and exhaustive hyperparameter tuning technique that involves defining a grid of hyperparameter values and evaluating the model performance for each combination.

- Process: Define a grid of hyperparameter values for each hyperparameter to be tuned.

- Pros: Easy to implement and understand.

- Cons: Computationally expensive for large hyperparameter spaces.

Random Search

- Definition: Random search is a hyperparameter tuning technique that randomly samples hyperparameter values from predefined distributions and evaluates the model performance for each random combination.

- Process: Define distributions for each hyperparameter.

- Pros: More efficient than grid search for high-dimensional or continuous hyperparameter spaces.

- Cons: This does not guarantee finding the best hyperparameters.

Bayesian Optimization

- Definition: Bayesian optimization is a sequential model-based optimization technique that uses probabilistic models to predict the performance of different hyperparameter configurations and guides the search towards promising regions.

- Process: Build a probabilistic model of the objective function based on evaluated hyperparameter configurations.

- Pros: Efficient for high-dimensional and complex hyperparameter spaces.

- Cons: Requires tuning of its hyperparameters.

Gradient-Based Optimization

- Definition: Gradient-based optimization methods use gradients of the objective function concerning hyperparameters to iteratively update the hyperparameter values and optimize the model performance.

- Process: Compute the gradients of the objective function concerning hyperparameters.

- Pros: Efficient for differentiable hyperparameters and objective functions.

- Cons: Limited to differentiable hyperparameters and objective functions.

Evolutionary Algorithms

- Definition: Evolutionary algorithms are population-based optimization techniques inspired by the process of natural selection and evolution. They maintain a population of candidate solutions and evolve them over generations to find optimal hyperparameters.

- Process: Initialize a population of candidate hyperparameter configurations.

- Pros: Can handle non-differentiable and complex hyperparameter spaces.

- Cons: Computationally expensive for large populations and generations.

Each of these hyperparameter tuning techniques has its strengths and weaknesses, and the choice of method depends on the specific characteristics of the problem, such as the dimensionality of the hyperparameter space, the computational resources available, and the desired level of optimization. Experimenting with different techniques and understanding their trade-offs can help you effectively tune hyperparameters and improve the performance of your machine-learning models.

Challenges in Hyperparameter Tuning

Hyperparameter tuning is a critical aspect of optimizing machine learning models, but it comes with its own set of challenges. Let’s explore some common challenges and considerations when tuning hyperparameters:

Dealing with High-Dimensional Hyperparameter Spaces

- Challenge: High-dimensional hyperparameter spaces can exponentially increase the search space, making it computationally expensive and challenging to explore.

- Considerations: Dimensionality Reduction

Handling Expensive Function Evaluations

- Challenge: Evaluating the performance of a model for each set of hyperparameters can be time-consuming and computationally expensive, especially for complex models or large datasets.

- Considerations: Parallelization

Incorporating Domain Knowledge

- Challenge: Domain knowledge can provide valuable insights into which hyperparameters are likely to be more influential or which ranges are more promising, but integrating this knowledge effectively can be challenging.

- Considerations: Feature Engineering

Developing Adaptive Hyperparameter Tuning Methods

- Challenge: Static hyperparameter tuning methods may not adapt well to changing data distributions, model complexities, or optimization landscapes.

- Considerations: Dynamic Search Spaces

Addressing these challenges in hyperparameter tuning requires a combination of thoughtful strategies, efficient algorithms, and domain expertise. By understanding the complexities involved and implementing appropriate solutions, you can enhance the effectiveness of hyperparameter tuning and improve the performance of your machine-learning models.

Hyperparameter Tuning and Model Generalization

When it comes to hyperparameter tuning in machine learning, ensuring model generalization is crucial for building robust and reliable models. Let’s delve into the relationship between hyperparameter tuning, overfitting, and the role of cross-validation in achieving model generalization:

Overfitting and the Role of Hyperparameters

- Overfitting: Overfitting occurs when a model learns the noise and specific patterns in the training data rather than the underlying relationships, leading to poor performance on unseen data.

- Role of Hyperparameters: Hyperparameters play a significant role in controlling the complexity of a model and its ability to generalize to new, unseen data.

- Strategies to Address Overfitting: Regularization: Adjusting regularization hyperparameters like L1 or L2 regularization can help prevent overfitting by penalizing overly complex models.

Hyperparameter Tuning and Cross-Validation

- Cross-Validation: Cross-validation is a technique used to assess the generalization performance of a model by splitting the data into multiple subsets for training and evaluation.

- Role of Cross-Validation in Hyperparameter Tuning: Cross-validation allows for a more robust evaluation of different hyperparameter configurations by averaging performance across multiple folds.

- Strategies for Cross-Validation in Hyperparameter Tuning: K-Fold Cross-Validation

By incorporating cross-validation into the hyperparameter tuning process, you can effectively assess the generalization performance of your models and mitigate the risk of overfitting. Hyperparameter tuning, when combined with proper validation techniques like cross-validation, can lead to more robust and reliable machine learning models that perform well on unseen data.

Hyperparameter Optimization

The problem of choosing optimal hyperparameters for a learning algorithm

| Definition | Process of selecting the best set of hyperparameters for a machine learning algorithm |

| Objective | To find a tuple of hyperparameters that minimizes a predefined loss function on given data |

| Method | Uses cross-validation to estimate generalization performance and determine the best hyperparameter values |

Hyperparameter Tuning Best Practices and Considerations

Difference between Parameter Tuning and Hyperparameter Tuning

- Parameters vs. Hyperparameters: Parameters are the learned coefficients during model training, while hyperparameters control the learning process itself.

- Purpose: Parameter tuning optimizes the model’s performance, while hyperparameter tuning fine-tunes the learning process.

- Impact: Hyperparameters influence how the model learns and generalizes, while parameters are adjusted by the optimization algorithm.

Which Hyperparameter to Tune First?

- Importance of Hyperparameters: Hyperparameters like learning rate are crucial for model performance.

- Order of Tuning: Start with essential hyperparameters like learning rate before moving to less critical ones.

- Model and Dataset Dependency: The order of tuning can vary based on the specific model and dataset characteristics.

- Rule of Thumb: Begin with hyperparameters that have the most significant impact on model performance.

Best Practices in Hyperparameter Tuning

- Cross-Validation: Evaluate model performance using cross-validation to ensure robustness.

- Surrogate Models: Use Gaussian processes, random forest regression, or TPE for efficient hyperparameter predictions.

- Bayesian Optimization: Implement Bayesian optimization for optimal hyperparameter combinations.

- Challenges: Address challenges like high-dimensional hyperparameter spaces and expensive function evaluations.

- Adaptive Methods: Develop adaptive tuning methods to adjust parameters during training.

- Applications: Apply hyperparameter tuning for model selection, regularization, feature preprocessing, and algorithmic parameter tuning.

Considerations in Hyperparameter Tuning

- Improved Performance: Hyperparameter tuning enhances model accuracy, generalizability, and efficiency.

- Resource Optimization: Optimize computational resources and time by finding the right hyperparameters.

- Risk Mitigation: Reduce the risk of overfitting and underfitting through effective hyperparameter tuning.

- Expertise Requirement: Hyperparameter tuning demands expertise and understanding of the model and data.

- Optimal Performance: While hyperparameter tuning boosts performance, there is no guarantee of achieving optimal results.

By following these best practices and considerations, data scientists and machine learning engineers can effectively optimize hyperparameters, leading to improved model performance, reduced overfitting, and enhanced generalizability.

Advanced Hyperparameter Optimization Methods

Bayesian Optimization

- Bayesian Inference: Bayesian optimization uses Bayesian inference to model the objective function and select the next hyperparameter combination based on the uncertainty of the model.

- Gaussian Processes: Gaussian processes are used to model the objective function, allowing for efficient exploration of the hyperparameter space.

- Acquisition Functions: Acquisition functions are used to select the next hyperparameter combination based on the uncertainty of the model and the potential improvement in the objective function.

- Efficient Exploration: Bayesian optimization efficiently explores the hyperparameter space by selecting the next combination based on the uncertainty of the model.

- Robustness: Bayesian optimization is robust to noisy objective functions and can handle large hyperparameter spaces.

Gradient-Based Optimization

- Gradient Descent: Gradient-based optimization uses gradient descent to optimize the hyperparameters.

- Gradient Descent Variants: Variants of gradient descent, such as Adam and RMSProp, are used to optimize the hyperparameters.

- Efficient Optimization: Gradient-based optimization is efficient for optimizing hyperparameters in large models.

- Robustness: Gradient-based optimization is robust to noisy objective functions.

Evolutionary Algorithms

- Evolutionary Principles: Evolutionary algorithms use evolutionary principles to optimize the hyperparameters.

- Population-Based Search: Evolutionary algorithms use a population-based search to explore the hyperparameter space.

- Selection and Mutation: Selection and mutation operators are used to evolve the hyperparameters.

- Efficient Exploration: Evolutionary algorithms efficiently explore the hyperparameter space by using a population-based search.

- Robustness: Evolutionary algorithms are robust to noisy objective functions and can handle large hyperparameter spaces.

Comparison of Methods

- Advantages and Disadvantages: Each method has its advantages and disadvantages, and the choice of method depends on the specific problem and dataset.

- Efficiency and Robustness: Bayesian optimization is efficient and robust, while gradient-based optimization is efficient but may not be robust to noisy objective functions.

- Exploration and Exploitation: Evolutionary algorithms balance exploration and exploitation, making them suitable for complex hyperparameter spaces.

Implementation and Tools

- Python Libraries: Python libraries such as Optuna, Hyperopt, and Scikit-Optimize provide implementations of Bayesian optimization, gradient-based optimization, and evolutionary algorithms.

- Integration with Machine Learning Frameworks: These libraries can be integrated with popular machine learning frameworks such as TensorFlow and PyTorch.

- Ease of Use: The libraries provide an easy-to-use interface for implementing advanced hyperparameter optimization methods.

Applications and Case Studies

- Real-World Applications: Advanced hyperparameter optimization methods have been applied to real-world problems in computer vision, natural language processing, and recommender systems.

- Case Studies: Case studies demonstrate the effectiveness of advanced hyperparameter optimization methods in improving model performance and reducing the time and cost of hyperparameter tuning.

- Future Directions: Future directions include the development of more efficient and robust optimization methods and the integration of advanced hyperparameter optimization methods with other machine learning techniques.

By understanding and applying advanced hyperparameter optimization methods, data scientists and machine learning engineers can significantly improve the performance of their models and reduce the time and cost of hyperparameter tuning.

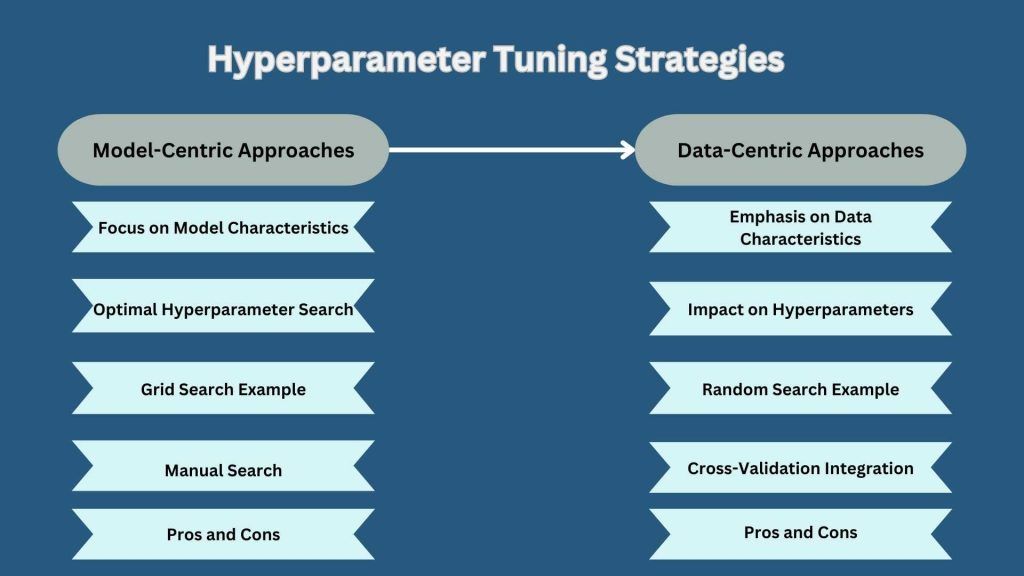

Hyperparameter Tuning Strategies

Model-Centric Approaches

- Focus on Model Characteristics: Model-centric approaches concentrate on the model’s intrinsic properties, such as its structure and algorithms used.

- Optimal Hyperparameter Search: These approaches involve searching for the best hyperparameter combination within a predefined set of values.

- Grid Search Example: Grid search is a model-centric approach where all possible hyperparameter combinations are evaluated exhaustively.

- Manual Search: Manual search is another model-centric method where hyperparameters are manually adjusted by the data scientist.

- Pros and Cons: Model-centric approaches offer fine control over hyperparameters but can be time-consuming and prone to human error.

Data-Centric Approaches

- Emphasis on Data Characteristics: Data-centric approaches focus on the dataset’s properties and characteristics.

- Impact on Hyperparameters: These approaches consider how data features influence the choice of hyperparameters.

- Random Search Example: Random search is a data-centric approach where hyperparameter combinations are randomly selected and evaluated.

- Cross-Validation Integration: Data-centric approaches often incorporate cross-validation to ensure robust hyperparameter selection.

- Pros and Cons: Data-centric approaches leverage dataset insights for hyperparameter tuning but may require more computational resources.

Comparison and Selection

- Choosing the Right Approach: The selection between model-centric and data-centric approaches depends on the specific model and dataset.

- Hybrid Strategies: Some scenarios may benefit from a combination of model-centric and data-centric techniques.

- Efficiency vs. Precision: Model-centric approaches offer precision in hyperparameter tuning, while data-centric approaches leverage dataset nuances for optimization.

- Iterative Process: Hyperparameter tuning is an iterative process that may involve multiple rounds of experimentation and evaluation.

- Performance Evaluation: Both approaches aim to enhance model performance, generalization, and efficiency through optimal hyperparameter selection.

Implementation and Best Practices

- Tool Integration: Utilize tools like Optuna, Hyperopt, or Scikit-Optimize for efficient hyperparameter optimization.

- Cross-Validation Usage: Incorporate cross-validation within hyperparameter tuning to ensure model robustness.

- Experiment Tracking: Maintain a systematic approach to track hyperparameter tuning experiments for reproducibility.

- Continuous Improvement: Hyperparameter tuning is an ongoing process that requires continuous refinement based on model performance.

- Expertise Development: Enhance expertise in hyperparameter tuning through practice, experimentation, and staying updated on advanced techniques.

By understanding and applying both model-centric and data-centric hyperparameter tuning strategies, data scientists can optimize machine learning models effectively, leading to improved performance, generalization, and efficiency in various applications.

Importance of Hyperparameter Tuning

Impact on Model Performance and Efficiency

- Optimal Performance: Hyperparameter tuning is critical to achieving optimal performance in machine learning models. A poorly tuned model can result in underfitting or overfitting, leading to inaccurate predictions and poor performance on new data.

- Efficiency: Hyperparameter tuning can significantly impact the efficiency of a model. The model can learn more effectively and generalize better to new data by finding the optimal set of hyperparameters, reducing the need for additional training or data collection.

- Model Complexity: Hyperparameter tuning allows for balancing model complexity and performance. Adjusting hyperparameters makes the model more or less complex, depending on the tradeoffs between model performance and computational resources.

Enhancing Model Generalization and Robustness

- Generalization: Hyperparameter tuning is essential for enhancing model generalization. By finding the optimal set of hyperparameters, the model can learn to generalize well to new, unseen data, reducing overfitting and improving performance on out-of-sample data.

- Robustness: Hyperparameter tuning can also enhance model robustness. By tuning hyperparameters, the model can be made more resistant to changes in the data distribution or to outliers, improving its overall performance and reliability.

- Domain Knowledge: Hyperparameter tuning requires domain knowledge and understanding of the problem and data. This knowledge is essential for defining the hyperparameter search space, evaluating different hyperparameters, and making informed decisions about the optimal set of hyperparameters.

In conclusion, hyperparameter tuning is a crucial step in the machine-learning process that can have a significant impact on model performance, efficiency, generalization, and robustness. By understanding the importance of hyperparameter tuning and the various techniques used to tune hyperparameters, data scientists and machine learning engineers can develop models that are optimized for their specific problem and dataset, leading to better performance and more accurate predictions.

Future Trends in Hyperparameter Tuning

Automated Hyperparameter Tuning Solutions

- There is a growing trend towards the development of automated hyperparameter tuning solutions.

- These solutions aim to reduce the manual effort required in hyperparameter tuning by automating the process.

- Techniques like Bayesian optimization, gradient-based optimization, and evolutionary algorithms are being increasingly used to automate hyperparameter tuning.

- The goal is to create self-tuning machine-learning models that can adjust their hyperparameters during the training process.

Integration of Machine Learning and Optimization Techniques

- Researchers are exploring ways to better integrate machine learning and optimization techniques for hyperparameter tuning1.

- This includes developing gradient-based optimization methods that can handle complex response surfaces and deal with high-dimensional hyperparameter spaces.

- Techniques like differentiable neural architecture search and hypernetworks are emerging as ways to optimize discrete hyperparameters using gradient-based methods.

- There is also a focus on developing more efficient and robust optimization algorithms, such as early stopping-based methods, to handle the computational challenges of hyperparameter tuning.

- The integration of machine learning and optimization is expected to lead to more powerful and automated hyperparameter tuning solutions in the future.

In summary, the future trends in hyperparameter tuning involve the development of more automated and integrated solutions that leverage advanced optimization techniques and machine learning methods to streamline the hyperparameter tuning process and improve model performance.

Conclusion:

In conclusion, hyperparameter tuning plays a crucial role in optimizing machine learning models for enhanced performance and efficiency. By fine-tuning hyperparameters, models can achieve better generalization and robustness. Future trends point towards automated solutions and the integration of machine learning and optimization techniques, promising more efficient and effective hyperparameter tuning processes. Embracing these advancements will lead to improved model accuracy and scalability in the ever-evolving field of machine learning.

Frequently Asked Questions (FAQs)

1) Why are hyperparameters important in machine learning?

Hyperparameters are crucial in machine learning as they control the learning process and significantly impact a model’s performance and efficiency

2) What does hyperparameter tuning do in machine learning?

Hyperparameter tuning optimizes a model’s hyperparameters to enhance performance, prevent overfitting, and improve generalization to new data

3) What is the best way to tune hyperparameters?

The best way to tune hyperparameters involves techniques like grid search, random search, Bayesian optimization, and manual tuning, depending on the problem and dataset

4) What are hyperparameters in machine learning code?

Hyperparameters in machine learning code are configuration variables that control the learning process, such as learning rate, number of layers, and activation functions

5) Which dataset is used for tuning hyperparameters?

Various datasets can be used for tuning hyperparameters, depending on the specific problem and the need to evaluate model performance on different data distributions.