Machine learning algorithms are the backbone of modern data-driven solutions, enabling computers to learn from data and make predictions or decisions without being explicitly programmed. These algorithms form the foundation of various applications across industries, from recommendation systems in e-commerce to predictive maintenance in manufacturing. At its core, machine learning involves the development and deployment of algorithms that allow computers to identify patterns and relationships within data, ultimately extracting valuable insights or making accurate predictions.

These algorithms can be broadly categorized into supervised, unsupervised, and reinforcement learning, each serving different purposes based on the nature of the data and the desired outcome. Understanding the principles behind these algorithms is essential for data scientists and machine learning practitioners to effectively leverage their capabilities and address complex real-world problems. As the field of machine learning continues to evolve, mastering these algorithms becomes increasingly crucial for unlocking the full potential of data-driven decision-making in various domains.

Understanding Machine Learning Algorithms

What are Machine Learning Algorithms?

Machine learning algorithms are computational procedures designed to enable machines to learn from data and improve their performance on a specific task over time, without being explicitly programmed. These algorithms allow computers to identify patterns, extract meaningful insights, and make predictions or decisions based on the data they analyze. In essence, machine learning algorithms provide the framework for automating the process of learning from data, enabling systems to adapt and evolve in response to new information or changing circumstances. By leveraging statistical techniques and mathematical models, these algorithms can uncover complex relationships within datasets and generate actionable results that drive decision-making and problem-solving in various domains.

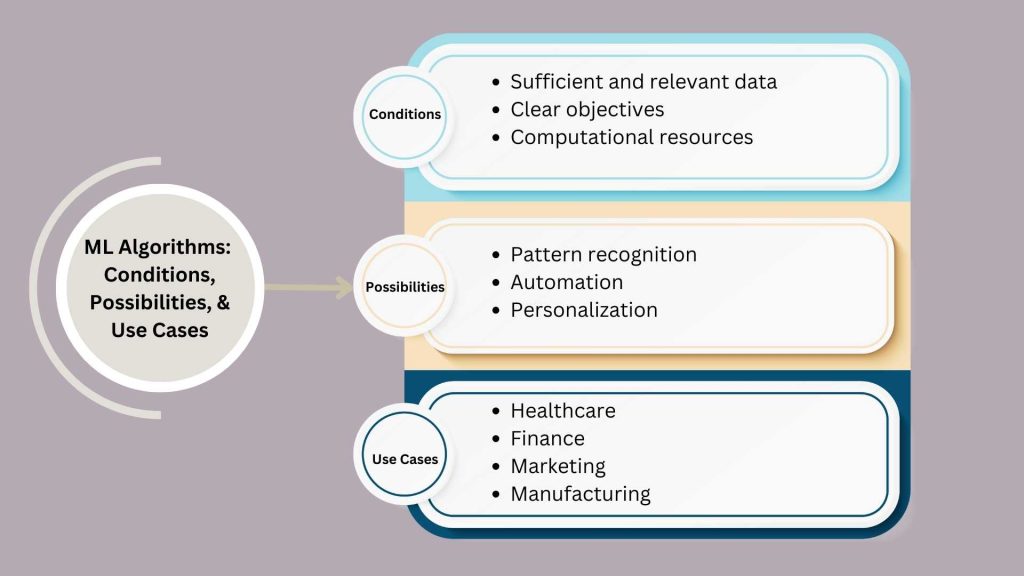

ML Algorithms: Look Conditions, Possibilities, & Use Cases

- Conditions: Machine learning algorithms require certain conditions to be met for effective application, including:

- Sufficient and relevant data: Algorithms rely on data to learn patterns and make predictions, so having high-quality and diverse datasets is essential.

- Clear objectives: Defining the problem statement and desired outcomes helps in selecting the appropriate algorithm and evaluating its performance.

- Computational resources: Some algorithms may require significant computational power or memory, so the availability of resources is a consideration.

- Possibilities: Machine learning algorithms offer a range of possibilities, such as:

- Pattern recognition: Algorithms can identify hidden patterns and correlations within data, enabling predictive analytics and decision-making.

- Automation: By automating tasks like image recognition, natural language processing, and anomaly detection, algorithms streamline processes and improve efficiency.

- Personalization: Algorithms power recommendation systems and personalized user experiences by analyzing user behavior and preferences.

- Use Cases: Machine learning algorithms find applications across various domains, including:

- Healthcare: Predictive models assist in disease diagnosis, treatment planning, and patient monitoring.

- Finance: Algorithms are used for fraud detection, risk assessment, and algorithmic trading.

- Marketing: Recommendation systems and customer segmentation algorithms optimize marketing campaigns and improve customer engagement.

- Manufacturing: Predictive maintenance algorithms help in identifying equipment failures before they occur, minimizing downtime and costs.

Use Machine Learning Models Examples Practical Application

Machine learning models are the practical application of machine learning algorithms. These models take the learnings from trained algorithms and use them to perform specific tasks. Here are some examples of different machine learning models in action:

- Recommendation Systems: These systems are a type of information filtering system that seeks to predict the rating or preference a user would give to an item.

- They are prevalent on platforms like Netflix, Amazon, and Spotify.

- For example, Netflix uses your viewing history to recommend other shows you might like, while Amazon suggests products based on your browsing and purchasing history.

- These systems typically use collaborative filtering, content-based filtering, or a hybrid approach combining both methods.

- Image Recognition: This involves computer systems being able to identify objects, places, people, writing, and actions in images.

- Image recognition technology uses neural networks that have been trained on millions of images. In smartphones, this technology helps in facial recognition for security (unlocking devices).

- Autonomous vehicles, help in identifying obstacles, traffic signs, and other important elements to navigate safely.

- Spam Filtering: Email providers utilize machine learning to filter out spam from a user’s inbox.

- These models analyze various aspects of incoming emails, such as the sender’s information, the content of the message, and how similar messages have been handled in the past.

- Over time, these systems learn from flagged emails to improve their accuracy in identifying unwanted emails.

- Fraud Detection: In the financial industry, machine learning models are vital in identifying unusual patterns that may suggest fraudulent activities.

- By analyzing millions of transactions, these systems can detect anomalies that deviate from typical user behavior and flag them for further investigation, thus helping to prevent potential financial losses.

- Speech Recognition: This technology converts spoken language into text.

- Virtual assistants like Siri, Alexa, and Google Assistant use speech recognition to understand user commands and perform actions accordingly.

- These systems are continuously refined to better understand various accents, dialects, and languages, making them more versatile and user-friendly.

- Machine Translation: Services like Google Translate use machine learning to convert text or spoken words from one language to another.

- These models have significantly improved over the years, providing more accurate and contextually appropriate translations.

- They work by analyzing large datasets of existing translations and learning linguistic nuances.

- Stock Price Prediction: Some machine learning models are designed to analyze historical data and market trends to predict future stock prices.

- Although these predictions are not always perfect due to the volatile nature of the stock market, they can provide valuable insights and help investors make informed decisions.

These are just a few examples, and new applications for machine learning models are being developed all the time. As machine learning algorithms become more sophisticated and data becomes more plentiful, we can expect even more innovative applications to emerge in the future. As MLOps Engineers advance their expertise and data availability increases, anticipate the emergence of even more groundbreaking applications.

Factors to Consider When Choosing an Algorithm

Choosing the right machine learning algorithm for a specific problem can be a crucial decision in the success of a project. Here are several factors to consider when selecting an algorithm:

- Problem Type:

- Classification: If you need to categorize data into predefined labels, classification algorithms like Logistic Regression, Decision Trees, or Neural Networks are appropriate.

- Regression: For predicting continuous values, consider regression algorithms such as Linear Regression, Support Vector Regression, or Random Forest Regression.

- Clustering: If the task involves grouping a set of objects in such a way that objects in the same group are more similar to each other than to those in other groups, clustering algorithms like K-Means or Hierarchical Clustering are suitable.

- Dimensionality Reduction: Techniques like PCA (Principal Component Analysis) or t-SNE are used when you need to reduce the number of random variables under consideration.

- Data Size and Quality:

- Size: Some algorithms, like Support Vector Machines, can be inefficient with large datasets. In contrast, algorithms like Stochastic Gradient Descent or Mini-batch K-means are better suited for large datasets.

- Quality: If your data has many missing values or noise, you might need algorithms that are robust to such issues, like Random Forest or Decision Trees, which can handle missing values and outliers.

- Accuracy vs. Interpretability:

- Some complex models like Neural Networks and Ensemble Methods (e.g., Random Forests, Gradient Boosting Machines) provide high accuracy but are less interpretable.

- Simpler models like Decision Trees or Linear Regression offer more interpretability but might not achieve the same level of accuracy.

- Training Time:

- Consider the training time available and the urgency of the deployment. Algorithms like Naive Bayes and Linear Regression are faster to train compared to more complex models like Neural Networks or Ensemble Methods.

- Number of Features:

- For datasets with a large number of features, dimensionality reduction techniques or models that have built-in feature selection capabilities like Regularized Regression (Lasso, Ridge) or Tree-based methods (which can provide feature importance scores) can be beneficial.

- Nonlinearity:

- If the relationship between the independent and dependent variables is non-linear, linear models like Linear Regression will not perform well.

- In such cases, consider using algorithms that can model non-linear relationships like Decision Trees, SVM with non-linear kernels, or Neural Networks.

- Scalability:

- Consider whether the algorithm can scale efficiently as the amount of data grows. Some algorithms inherently scale well horizontally (e.g., Deep Learning with distributed computing frameworks), while others might require significant computational resources as data volume increases.

- Updates and New Data:

- If the model needs to be updated frequently with new data, consider using algorithms that support online learning, where the model can be updated incrementally (e.g., Online Gradient Descent, Adaptive Boosting).

- Hardware Resources:

- Some algorithms, especially deep learning models, may require substantial hardware resources (like GPUs) for training. Ensure that the chosen algorithm is compatible with the available hardware.

By carefully considering these factors, you can choose a machine learning algorithm that best fits the needs of your project, balancing between accuracy, interpretability, training time, and computational resources.

Algorithm Selection: Machine Learning Algorithms Components

Scalability

- Computational Efficiency: Evaluate the algorithm’s scalability in terms of computational complexity and memory requirements.

- Parallelization: Assess whether the algorithm can be parallelized to leverage distributed computing resources for faster processing.

- Incremental Learning: Consider the algorithm’s ability to handle streaming data or adapt to changes over time without retraining the entire model.

Computational Resources

- Hardware Requirements: Determine the hardware resources needed to train and deploy the algorithm, such as CPU, GPU, or specialized hardware accelerators.

- Memory Usage: Assess the algorithm’s memory footprint during training and inference to ensure compatibility with available resources.

- Cost Considerations: Evaluate the cost implications of using the algorithm in terms of hardware, software licenses, and maintenance.

Popular ML Algorithms: Unveiling To Be Linear Regression

Linear Regression:

- Used for predicting continuous values based on a linear relationship between features and target variables.

- Imagine trying to fit a straight line through a set of points to model the relationship between features and the target variable.

Logistic Regression:

- Used for classification problems with binary outcomes (0 or 1).

- Predicts the probability of an event happening based on features.

- Common use case: Spam filtering (classifying emails as spam or not spam).

Decision Trees:

- The tree-like structure classifies data by asking a series of questions about features.

- Each question splits the data into smaller and smaller groups based on the answer.

- Easy to interpret the logic behind the decision-making process.

Random Forests:

- An ensemble method combining multiple decision trees for improved accuracy and reduced overfitting.

- Overfitting is when a model performs well on training data but poorly on unseen data.

- Random Forests address this by training each tree on a random subset of features.

Support Vector Machines (SVM):

- Finds a hyperplane that best separates data points belonging to different classes.

- A hyperplane is a generalization of a line in higher dimensions.

- SVMs are well-suited for high-dimensional data and small datasets.

K-Nearest Neighbors (KNN):

- Classifies data points based on the majority vote of its k nearest neighbors.

- We need to tune the value of k for optimal performance.

- Simple and easy to implement, but can be computationally expensive for large datasets.

Exploring Enormous Machine Learning Naive Bayes Vs Neural Nets

Naive Bayes:

- Classifies data based on Bayes’ theorem assuming independence between features.

- Naive Bayes is efficient and works well for some problems, but the assumption of independence between features may not always hold.

Neural Networks:

- Inspired by the human brain, these models learn complex patterns from data through interconnected layers.

- Neural networks are powerful and versatile, but they can be computationally expensive to train and require a lot of data.

Clustering Algorithms:

- Used to group similar data points without predefined class labels.

- Useful for tasks like customer segmentation or image segmentation.

- There are many different clustering algorithms, each with its strengths and weaknesses.

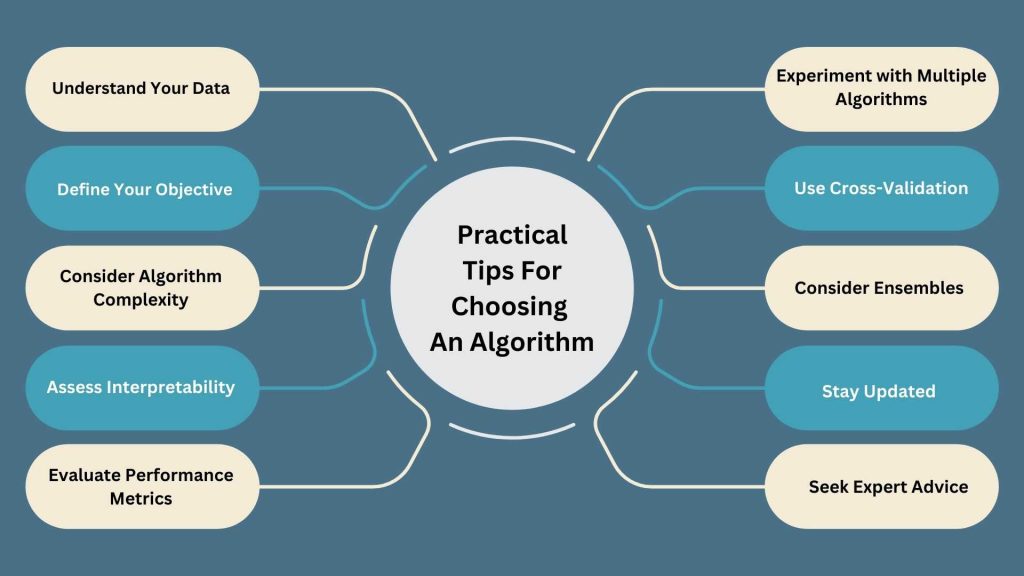

Revealing Best Practical Tips For Choosing ML Algorithms

- Understand Your Data: Gain a deep understanding of your dataset, including its characteristics, size, and quality, before selecting an algorithm.

- Define Your Objective: Clearly define the problem you’re trying to solve and the desired outcome to narrow down the choice of algorithms.

- Consider Algorithm Complexity: Evaluate the complexity of the algorithm in terms of computational resources required and scalability to your dataset size.

- Assess Interpretability: Depending on your needs, consider the interpretability of the algorithm and how easily you can explain its results to stakeholders.

- Evaluate Performance Metrics: Choose appropriate performance metrics based on your problem type (e.g., accuracy, precision, recall) and evaluate how well each algorithm performs against these metrics.

- Experiment with Multiple Algorithms: Don’t limit yourself to a single algorithm; experiment with multiple algorithms to see which one performs best on your data.

- Use Cross-Validation: Employ techniques like cross-validation to assess the generalization performance of each algorithm and ensure it’s not overfitting to the training data.

- Consider Ensembles: Combine multiple algorithms through ensemble methods like bagging or boosting to improve predictive accuracy and robustness.

- Stay Updated: Keep abreast of the latest advancements in machine learning algorithms and techniques to continuously refine your approach and stay competitive.

- Seek Expert Advice: When in doubt, seek advice from experts or consult with colleagues who have experience in machine learning to guide your algorithm selection process.

Conclusion

In conclusion, understanding machine learning algorithms is essential for leveraging the power of data-driven solutions across various domains. From linear regression to neural networks and clustering algorithms, each algorithm offers unique strengths and capabilities suited to different types of data and tasks.

Factors such as data characteristics, problem type, and algorithm complexity play crucial roles in selecting the most appropriate algorithm for a given task. Additionally, successful implementation requires considering practical aspects such as interpretability, scalability, and computational resources. By following practical tips for algorithm selection and staying updated on advancements in the field, practitioners can harness the full potential of machine learning to solve complex problems, make informed decisions, and drive innovation in diverse industries.

Frequently Asked Questions

1) What are the four 4 types of machine learning algorithms?

The four types of machine learning algorithms are supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

2) What are the six machine learning algorithms?

There are many machine learning algorithms, including linear regression, logistic regression, decision trees, random forests, support vector machines (SVM), and k-nearest neighbors (KNN).

3) How do I choose which ML algorithm to use?

Choose an ML algorithm based on factors like your problem type, data characteristics, algorithm complexity, interpretability, scalability, and available computational resources.

4) How to determine which algorithm is better in machine learning?

Determine the algorithm’s performance by evaluating metrics like accuracy, precision, recall, and F1 score using techniques such as cross-validation and comparing against baseline models.

5) What type of machine learning algorithm should I use?

The type of machine learning algorithm to use depends on your data, problem type, and desired outcome; for example, use supervised learning for labeled data and unsupervised learning for exploring patterns in unlabeled data.