Mastering MLOps: The Rise and Impactful of ML Models

Machine learning (ML) has rapidly transformed various industries, leading to significant advancements in fields like healthcare, finance, and also technology. However, successfully deploying and also managing ML models in production environments requires a specialized skillset known as Mastering MLOps (Machine Learning Operations).

MLOps bridges the gap between data science and also software engineering, ensuring seamless integration of ML models into existing infrastructure. This includes tasks like

- Model deployment and scaling: Deploying models to production environments and also scaling them efficiently to handle increasing workloads.

- Model monitoring and management: Continuously monitoring model performance, detecting issues, and performing corrective actions.

- Model versioning and governance: Maintaining track of different model versions and also ensuring compliance with ethical and regulatory standards.

Career Stage And Benefits: Optimizing With ML Models

This article provides information and guidance for individuals at various stages of their career or pre-qualification who are interested in mastering MLOps (Machine Learning Operations). Let’s break down the relevance for different stages:

Students or Aspiring Data Scientists:

- Relevance: This article is highly relevant for students or individuals aspiring to enter the field of machine learning and data science. It introduces the importance of MLOps, the skills required, and the tools and technologies involved.

- Action Steps: As a student, you can benefit by understanding the key concepts, tools, and technologies mentioned in the article. Consider enrolling in MLOps-related courses, workshops, or internships to gain practical experience.

Early-Career Data Scientists or Software Engineers:

- Relevance: The article outlines the significance of MLOps in today’s competitive landscape. It emphasizes the role of MLOps in reducing time to market, improving model performance, and ensuring reliability and security.

- Action Steps: If you are early in your career, consider specializing in MLOps. Explore the mentioned tools and technologies, and look for opportunities to apply MLOps principles in your projects. Consider enrolling in the Mastering MLOps Internship Program mentioned in the article.

Experienced Data Scientists or Software Engineers:

- Relevance: For those with experience in data science or software engineering, the article provides insights into the growing demand for MLOps professionals. It highlights the industry trends and demands across various sectors.

- Action Steps: If you’re experienced, evaluate your current skill set against the skills mentioned in the article. Consider upskilling in MLOps tools and technologies to stay competitive. The article also suggests exploring real-world industry practices, which can be beneficial for experienced professionals.

Navigating Innovation: Insights from Tech Managers

- Relevance: Decision-makers in tech companies can benefit from the article’s insights into the importance of MLOps for business success. It discusses the impact of MLOps on innovation, career acceleration, and real-world impact.

- Action Steps: If you are in a managerial role, consider investing in MLOps training for your team. Understand the tools and frameworks mentioned to make informed decisions about integrating MLOps practices into your organization.

Data Science Enthusiasts or Career Switchers:

- Relevance: Individuals looking to switch careers or enter the field of data science can use this article as a roadmap. It provides an overview of the skills and qualifications required for mastering MLOps.

- Action Steps: Start by acquiring the foundational knowledge in data science and then gradually delve into MLOps concepts. Explore the tools and frameworks mentioned in the article through hands-on projects.

Anyone Interested in Machine Learning and Technology Trends:

- Relevance: The article is suitable for anyone interested in staying informed about the latest trends in machine learning and technology. It discusses the future of MLOps and the increasing demand for professionals in this field.

- Action Steps: Stay updated on industry trends by exploring the suggested tools, technologies, and frameworks. Attend relevant workshops, webinars, or conferences to gain more insights.

- In summary, the article caters to a broad audience, including students, early-career professionals, experienced individuals, managers, and enthusiasts interested in the intersection of machine learning and operations. The action steps vary based on individual goals and career stages.

Why Mastering Machine Learning Operations Matters:

In today’s competitive landscape, organizations need to deploy ML models quickly and efficiently to gain an edge. MLOps plays a critical role in this process by:

- Reducing time to market: Faster model deployment leads to a quicker realization of business value and competitive advantage.

- Improving model performance: Continuous monitoring and optimization ensure models maintain high accuracy and performance in real-world scenarios.

- Scaling models efficiently: MLOps infrastructure allows models to scale seamlessly to accommodate growing data volumes and user demands.

- Ensuring model reliability and security: Robust MLOps practices ensure models operate reliably and securely, mitigating potential risks and also vulnerabilities.

The Mastering MLOps Internship Program:

This internship program provides aspiring MLOps engineers with the knowledge and also practical experience necessary to thrive in this rapidly growing field. Through a combination of theoretical learning and hands-on projects, you will gain expertise in:

- Mastering MLOps tools and technologies: Learn about popular tools like Kubernetes, TensorFlow, Kubeflow, and also MLflow for building and managing MLOps pipelines.

- Cloud platforms: Understand how to deploy and also manage ML models on leading cloud platforms like AWS, Azure, and Google Cloud.

- DevOps principles: Integrate DevOps principles into your MLOps workflow for increased efficiency and also automation.

- Real-world industry practices: Gain practical experience by working on actual MLOps projects relevant to various industry domains.

Relevance and Industry Importance of Mastering MLOps

The MLOps Internship Program is a gateway to unlocking unparalleled opportunities in the dynamic landscape of machine learning. This program holds immense importance as it equips participants with the essential skills and also hands-on experience needed to navigate the intersection of data science and also software engineering.

Key Significance of Mastering Machine Learning Operations:

Industry Relevance: In a world where the demand also for MLOps professionals is soaring, this program ensures participants are not just job-ready but stand out in a fiercely competitive market.

Innovation Catalyst: By bridging the gap between theory and also practical application, participants are poised to contribute to cutting-edge projects. This isn’t just an internship; it’s a launchpad for driving innovation and also positive change.

Career Acceleration: The program isn’t just about learning; it’s about transformation. It provides a fast track to career growth by instilling a robust foundation in MLOps, setting participants on a trajectory toward achieving their long-term professional aspirations.

Real-World Impact: Participants aren’t just observers; they are active contributors to the development and implementation of revolutionary ML solutions. This hands-on experience ensures they are at the forefront of technological advancement, ready to make a tangible impact on industries and lives.

In essence, the MLOps Internship Program isn’t just an educational journey; it’s a strategic investment in a future where expertise in machine learning operations is a defining factor for success. Enroll now to be a catalyst for change and a leader in the era of machine learning innovation.

Scope of the Mastering Machine Learning Operations Era

Mastering MLOps encompasses a wide range of activities

- Model development: Building, testing, and validating machine learning models.

- Model deployment: Integrating models into production environments and deploying them to various platforms.

- Model monitoring: Tracking the performance of models in production and identifying issues.

- Model Management: Versioning, controlling, and governing the use of models.

- Infrastructure automation: Automating the deployment and management of infrastructure for machine learning pipelines.

- Observability and logging: Collecting and analyzing data to understand how models are performing.

- Security and compliance: Ensuring that models are secure and compliant with relevant regulations.

Industry Trends and Demands:

The demand for Mastering MLOps professionals is rapidly increasing across various industries, including:

- Technology: Tech giants like Google, Amazon, and also Microsoft are heavily investing in MLOps to improve the efficiency and also scalability of their machine learning initiatives.

- Finance: Banks and financial institutions are using MLOps to develop fraud detection models, personalize financial products, and optimize investment decisions.

- Healthcare: Healthcare organizations are leveraging MLOps to develop diagnostic tools, analyze medical images, and also predict patient outcomes.

- Retail: Retailers are using MLOps to personalize customer experiences, optimize pricing strategies, and also manage inventory.

- Manufacturing: Manufacturing companies are using Mastering MLOps to predict equipment failures, optimize production processes, and also improve quality control.

This increasing demand is driven by several factors:

- Growing adoption of machine learning: Organizations are increasingly turning to machine learning to solve complex business problems, leading to a greater need for MLOps expertise.

- Complexity of machine learning pipelines: Building and managing machine learning pipelines can be complex, requiring specialized skills and tools.

- Need for scalability and efficiency: As organizations scale their machine learning initiatives, they need efficient and automated ways to manage their models.

- Focus on data-driven decision-making: MLOps enables organizations to make data-driven decisions by providing insights into how their models are performing.

Skills and Qualifications of Mastering MLOps

To succeed in Mastering MLOps, individuals need a combination of technical and soft skills, including:

- Technical skills: Machine learning, software development, cloud computing, data analysis, and DevOps.

- Soft skills: Communication, collaboration, problem-solving, critical thinking, and project management.

Future of Mastering MLOps:

The future of MLOps is bright, with the market expected to grow significantly in the coming years. As machine learning becomes even more ubiquitous, the demand for Mastering MLOps professionals will continue to rise.

In summary, MLOps is a critical practice for ensuring the successful development and deployment of machine learning models. It is a rapidly growing field with significant demand for skilled professionals. By acquiring the necessary skills and knowledge, individuals can position themselves for exciting career opportunities in MLOps.

Essential MLOps: Tools, Tech, Frameworks Demystified

The success of Machine Learning Operations (MLOps) relies heavily on a robust technological foundation. This foundation comprises a diverse range of tools, technologies, and frameworks, each playing a crucial role in the entire ML lifecycle. Here’s a brief overview of the essential elements within the Mastering MLOps technology stack:

Model Training and Development:

- Machine Learning Frameworks: A machine learning framework is a software platform that provides the building blocks for developing and deploying ML models. It’s like your AI kitchen, equipped with all the utensils, ingredients, and recipes you need to cook up some groundbreaking solutions.

PyTorch: A rising star, loved for its dynamic nature, intuitive API, and ease of experimentation. Imagine a Lego set for building custom neural networks. - TensorFlow: The reigning champion, known for its scalability, flexibility, and vast community support. Think of it as the Swiss Army knife of ML frameworks.

- Scikit-learn: The Python go-to for traditional ML algorithms like decision trees and support vector machines. It’s your reliable companion for tackling everyday ML tasks.

- Version Control Systems: Version control systems (VCS) are software tools that track and manage changes to files over time. They’re like digital time machines for your code, design documents, or any other file you work on.

- What VCS do:

- Record changes: Every time you edit a file, the VCS takes a snapshot of it, creating a new version. This way, you can always go back to a previous version if needed.

- Prevent conflicts: VCS helps teams collaborate on files without overwriting each other’s work. They track who made what changes and when so you can easily merge edits without losing anything.

- Track history: VCS keeps a detailed log of changes made to each file, including who made them and why. This makes it easy to see how a project evolved and who contributed what.

- Share and collaborate: VCS makes it easy to share different versions of files with others. You can control who has access to what versions and track who’s working on what.

Git & Jupyter: Version Control, Code & Explore One Spot!

- Git: The most widely used VCS, known for its flexibility and distributed nature. allows for version control of code and model artifacts, ensuring reproducibility and collaboration.

- Jupyter Notebooks: Interactive playgrounds for code and exploration! Think of it as a digital lab notebook where you can run code, see results, and write explanations all in one place. Great for learning, tinkering, and sharing ideas visually.

- IDEs: Powerful workbenches for serious development. Imagine a feature-packed toolbox with code completion, debugging tools, and project management. Ideal for building large-scale applications and working efficiently on complex projects.

Data Preprocessing and Feature Engineering Libraries:

- Data is the fuel that powers machine learning models, but just like a car needs refined gasoline, raw data requires some processing to unlock its true potential. This is where data preprocessing and feature engineering libraries come in, acting as your skilled mechanics, transforming messy data into a clean, high-octane fuel for your models to thrive.

- Pandas: A powerful and versatile library for data cleaning, manipulation, and analysis.

- Dplyr (R): A grammar-based library for data manipulation in R, making it efficient and readable.

Model Deployment and Serving:

- Containerization Technologies: Containers are lightweight, isolated software packages that bundle your application code, libraries, dependencies, and runtime environment into a single, portable unit. Think of them as self-contained shipping containers for your code, ensuring it runs consistently regardless of the underlying infrastructure.

- Benefits of Containerization:

- Portability: Containers run seamlessly across different operating systems and hardware, making them truly portable. No more worrying about compatibility issues!

- Scalability: Easily scale your applications up or down by adding or removing containers, making resource management a breeze.

- Isolation: Each container runs in its own isolated environment, preventing conflicts and ensuring application stability.

- Efficiency: Containers are resource-efficient, requiring fewer resources than traditional virtual machines, leading to cost savings and faster deployment times.

- DevOps Magic: Containers simplify collaboration between developers and operations teams, enabling faster development cycles and streamlined deployments.

- Popular Containerization Technologies:

- Docker: The most widely used containerization platform, offering a user-friendly interface and a vast ecosystem of tools and resources.

- Kubernetes: An open-source system for automating container deployment, scaling, and management across clusters of machines.

- Cloud Platforms: Amazon SageMaker, Azure Machine Learning, Google Cloud AI Platform – provide managed services for deploying and managing ML models at scale.

- Model Serving Frameworks: TensorFlow Serving, PyTorch Mobile – handle model inference and scoring in production environments.

- APIs and Microservices: Enable integration of ML models into existing applications and services.

Model Monitoring and Optimization:

- Metrics and Logging Tools: Prometheus, Grafana – collect and visualize metrics related to model performance and health.

- Model Explainability Tools: LIME, SHAP – provide insights into model behavior and predictions, aiding debugging and fairness assessments.

- AutoML Tools: Auto-sklearn, Hyperopt – automate the process of model selection and hyperparameter tuning.

- Continuous Integration and Continuous Delivery (CI/CD): Jenkins, GitLab CI/CD – automate the deployment and delivery pipeline for ML models.

Orchestration and Automation:

- Workflow Management Systems: Airflow, Kubeflow – facilitate the orchestration of complex ML pipelines and workflows.

- Infrastructure Provisioning Tools: Terraform, Ansible – automate the provisioning and management of infrastructure resources used for MLOps.

- Monitoring and Alerting Tools: Datadog, and PagerDuty – provide real-time monitoring and alerting capabilities for the Mastering MLOps environment.

Collaboration and Security:

- Model Registries: MLflow, Vertex AI Explainable AI Service – store and track models, versions, and metadata in a centralized repository.

- Versioning and Governance Tools: MLflow, GitOps – enable versioning of models and ensuring compliance with governance policies.

- Identity and Access Management (IAM): AWS IAM, Azure AD – control access to models and resources within the MLOps platform.

This list provides an overview of the essential tools and technologies used in Mastering MLOps. The specific choices may vary depending on the specific needs and resources of an organization. However, understanding the fundamental components of the MLOps technology stack is crucial for aspiring MLOps engineers to navigate the field and build successful machine learning systems.

Foundational Knowledge:

Data Science Foundations:

Data science is a multidisciplinary field that blends statistics, computer science, and domain expertise to extract insights from data. It involves several key steps:

- Data Acquisition and Cleaning: Collecting, organizing, and pre-processing data from various sources. This often involves identifying and correcting errors, inconsistencies, and missing values.

- Exploratory Data Analysis (EDA): Analyzing and visualizing the data to understand its characteristics, patterns, and relationships between variables.

- Model Building and Training: Selecting and implementing appropriate machine learning algorithms, training them on the prepared data, and optimizing their performance.

- Model Evaluation and Deployment: Assessing the model’s accuracy and generalizability, and deploying it to production for real-world use.

- Monitoring and Improvement: Continuously monitoring the model’s performance, identifying potential issues, and iteratively improving it based on new data and feedback.

Data Science in Mastering MLOps:

Mastering MLOps, short for Machine Learning Operations, is the practice of automating and streamlining the ML lifecycle from development to deployment and maintenance. Data science plays a crucial role in Mastering MLOps through:

- Data Engineering: Building and maintaining data pipelines for efficient data acquisition, transformation, and storage.

- Feature Engineering: Creating relevant features from raw data to improve model performance.

- Model Training and Deployment: Automating model training and deployment processes, ensuring their scalability and reliability in production environments.

- Monitoring and Feedback: Implementing automated monitoring systems to track model performance and gather feedback for continuous improvement.

Introduction to Machine Learning Concepts:

Machine learning empowers data science by enabling computers to learn from data without explicit programming. Here are some key concepts:

- Supervised Learning: Algorithms learn from labeled data (inputs and desired outputs) to make predictions on new, unseen data. Examples include regression and classification.

- Unsupervised Learning: Algorithms discover patterns and hidden structures in unlabeled data without prior knowledge. Examples include clustering and dimensionality reduction.

- Reinforcement Learning: Algorithms learn through trial and error in interactive environments, receiving rewards for desired behaviors. This is often used in robotics, game-playing, and also control systems.

Further Exploration:

This brief overview provides a starting point. To delve deeper, consider exploring:

- Specific machine learning algorithms: Learn about popular algorithms like Linear Regression, Decision Trees, Support Vector Machines, and Neural Networks.

- Data science tools and libraries: Familiarize yourself with tools like Python, R, Pandas, NumPy, Scikit-learn, and TensorFlow.

- Mastering MLOps platforms and tools: Explore platforms like Kubeflow, MLflow, and Argo CD that automate ML workflows.

By understanding the fundamentals of data science and its role in Mastering MLOps, you can unlock the power of machine learning to extract valuable insights from data and also solve real-world problems in various fields.

Practical Use of Popular ML Libraries and Frameworks: Building Basic Models for Hands-on Experience

Ready to dive into the world of Machine Learning (ML) with practical projects? Let’s explore how popular libraries and frameworks can help you build basic models and also gain valuable hands-on experience.

Popular Libraries and Frameworks:

- Python: The de facto standard for ML, offering rich libraries like NumPy, Pandas, Scikit-learn, TensorFlow, PyTorch, and Keras.

- R: Another popular language, known for its statistical analysis capabilities and libraries like ggplot2, caret, and dplyr.

- Java: Popular for enterprise applications, with libraries like Weka, H2O, and Deeplearning4j.

Building Basic Models:

- Linear Regression: Predict a continuous target variable based on a linear relationship with features. (e.g., predicting house prices based on size and location).

- Logistic Regression: Classify data points into two categories based on features. (e.g., predicting whether an email is spam or not).

- K-Nearest Neighbors: Classify data points based on the closest neighbors in the feature space. (e.g., recommending similar products based on past purchases).

- Decision Tree: Classify data points by splitting them based on features, forming a tree-like

Hands-on Experience:

- Choose a dataset: Find publicly available datasets relevant to your interests, like housing prices, movie ratings, or customer reviews.

- Select a library/framework: Start with a beginner-friendly option like Scikit-learn or Keras.

- Preprocess the data: Clean and prepare your data for model training, handling missing values and scaling features.

- Train the model: Choose your model and train it on the prepared data, adjusting hyperparameters for optimal performance.

- Evaluate the model: Assess the model’s accuracy using metrics like accuracy, precision, recall, or F1-score.

- Visualize the results: Explore how the model performs and identify any patterns or insights.

Additional Tips:

- Start with small, simple datasets to build confidence.

- Focus on understanding the core concepts behind each model.

- Utilize online tutorials, documentation, and communities for support.

- Participate in online competitions or hackathons for practical challenges.

- Don’t be afraid to experiment and try different models and techniques.

By building basic models with popular libraries and frameworks, you’ll gain valuable hands-on experience and lay the foundation for your ML journey. Remember, the key is to start small, experiment, and learn from each step along the way.

Data Analysis in MLOps: A Crucial Step for ML Models

MLOps, or Machine Learning Operations, focuses on automating and managing the entire machine learning lifecycle, from data acquisition to model deployment and monitoring. Data analysis plays a crucial role throughout this workflow, providing insights and guiding decision-making at every stage.

Here’s an overview of the ML workflow and how data analysis integrates within each stage:

Data Acquisition and Preparation:

- Data Analysis: Identifying and accessing relevant data sources, assessing data quality and completeness, and performing basic cleaning and pre-processing tasks.

- Tools: Data wrangling tools like Pandas, Spark, and Sqoop for data extraction, transformation, and loading (ETL).

Model Training and Development:

Data Analysis:

- EDA Insights: Uncover data patterns, outliers, and relationships for informed modeling decisions.

- Feature Engineering Boost: Enhance model accuracy by crafting impactful features through strategic transformations.

- Tools: Jupyter notebooks, RStudio, and statistical software like SAS and SPSS for data visualization and advanced analysis.

Model Training and Experimentation:

- Data Analysis: Splitting data into training, validation, and testing sets for model evaluation. Analyzing model performance metrics like accuracy, precision, and recall to select the best model.

- Tools: Machine learning libraries like TensorFlow, PyTorch, and scikit-learn for model development, training, and evaluation.

ML Models Deployments & Monitoring: Best Practices

Model Deployment and Monitoring with ML Models:

- Data Analysis: Monitoring model performance in production, identifying potential drift or bias, and analyzing logs for debugging and troubleshooting.

- Tools: Model monitoring and logging tools like Prometheus and Grafana for real-time insights into model performance and behavior.

Model Optimization and Retraining:

- Data Analysis: Analyzing feedback and logs to identify areas for improvement in the model. Re-training the model with new data or adjusting hyperparameters to address performance issues.

- Tools: Continuous integration/continuous delivery (CI/CD) pipelines for automating model deployment and retraining processes.

Benefits of Data Analysis in MLOps:

- Improved model performance: Data analysis helps build better models by identifying relevant features, optimizing training data, and addressing potential biases.

- Reduced risk of errors: Analyzing logs and monitoring performance helps identify and prevent model errors before they impact production.

- Increased efficiency and automation: Data analysis tools and pipelines can automate repetitive tasks and streamline the ML workflow.

- Enhanced decision-making: Data-driven insights inform decisions at every stage of the ML lifecycle, leading to better outcomes.

Mastering Machine Learning Operations Essentials:

Role of MLOps in the ML Lifecycle

Mapping MLOps to the Machine Learning Lifecycle: Identifying Key Milestones

MLOps (Machine Learning Operations) is the practice of automating and managing the entire machine learning lifecycle, from data acquisition and model development to deployment and monitoring. It aims to bridge the gap between the development and production of ML models, ensuring smooth and efficient delivery of business value.

Key Milestones in the Mastering MLOps lifecycle:

Data Acquisition and Preparation:

- Milestone: Secure and reliable data sources identified and ingested.

- Activities: Data pipeline development, data cleaning, pre-processing, versioning, and governance.

Model Development and Training:

- Milestone: Initial model training completed and producing accurate results.

- Activities: Feature engineering, model selection, and training, hyperparameter tuning, and model validation.

Deploying and monitoring the model.

- Milestone: Model deployed in production environment and generating insights.

- Activities: Model packaging and containerization, infrastructure provisioning, deployment automation, monitoring, and logging.

Model Refinement and Retraining:

- Milestone: Model performance maintained and improved based on production data and also feedback.

- Activities: Model monitoring for drift and anomalies, retraining with new data, A/B testing of different models.

Governance and Security:

- Milestone: Secure and responsible use of ML models throughout their lifecycle.

- Activities: Model explainability and fairness, bias detection and mitigation, data privacy, and also security compliance.

- Benefits of Mapping Mastering MLOps to the Machine Learning Lifecycle:

- Clarity and transparency: Identifies the crucial steps and their dependencies in building and deploying ML models.

- Improved efficiency: Streamlines the workflow and allows for automation of repetitive tasks.

- Reduced risk: Enables early identification and mitigation of potential issues.

- Scalability and agility: Supports faster development and deployment cycles, allowing for quicker adaptation to changing business needs.

Expanding on these points:

- Each milestone can be further broken down into smaller, achievable tasks, making the Mastering MLOps process more manageable.

- Tools and also technologies specific to each stage can be identified and implemented to optimize the process.

- Measuring key metrics at each stage can provide valuable insights and also track progress toward goals.

- Continuous feedback loops and collaboration between data scientists, engineers, and also other stakeholders are crucial for successful MLOps implementation.

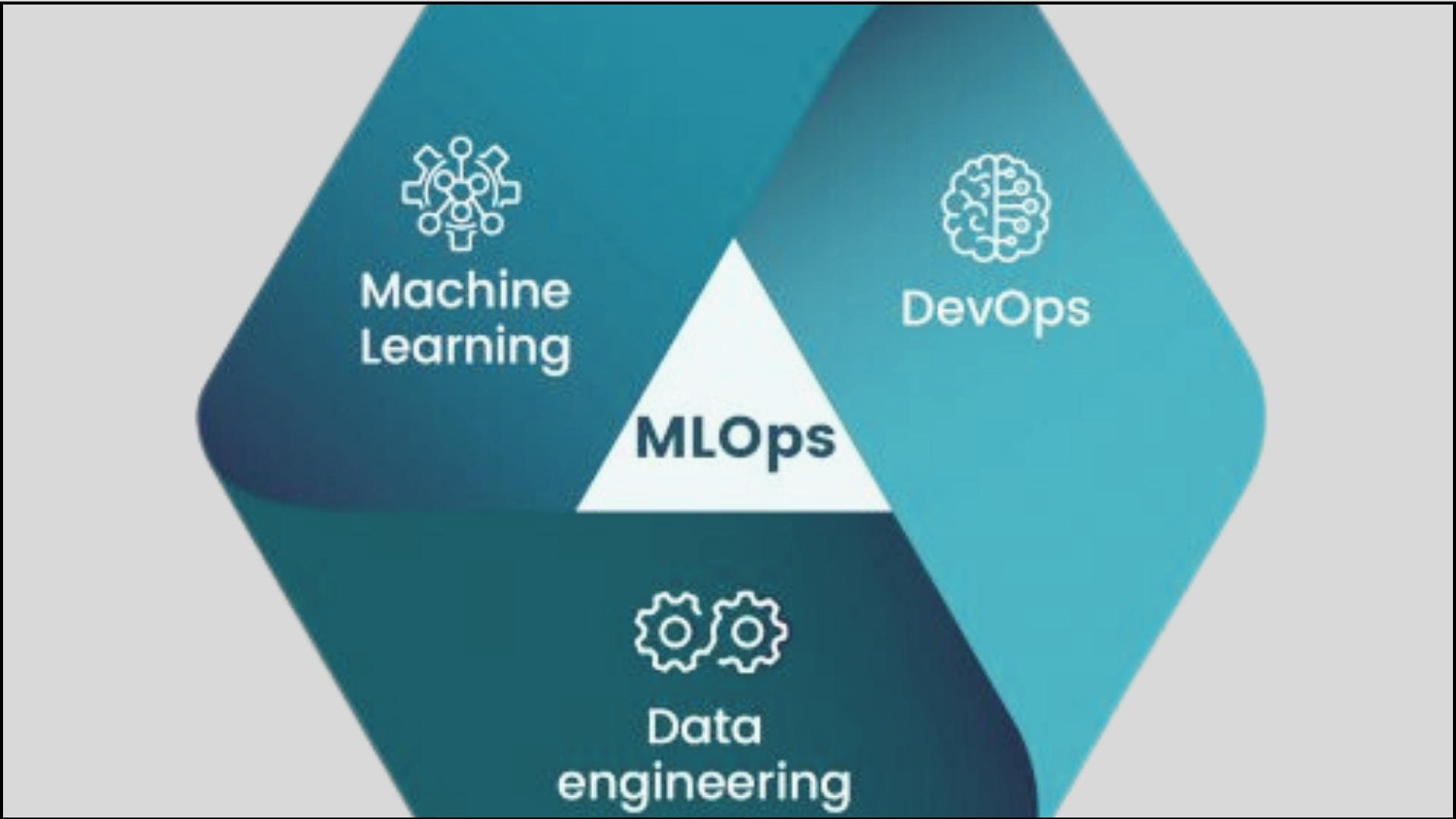

Integration of DevOps Principles

- Understanding DevOps in the Context of MLOps: Synergies and Differences

- DevOps and also MLOps share a common DNA but with distinct nuances tailored to their respective domains. Here’s a breakdown:

DevOps:

- Focus: Streamlining software development and also operations, fostering collaboration and also faster delivery of software applications.

- Key principles: Continuous integration/continuous delivery (CI/CD), automation, infrastructure as code, and also cultural shift towards collaboration and also shared responsibility.

- Benefits: Faster release cycles, improved software quality, reduced costs, and also enhanced team agility.

MLOps:

- Focus: Applying DevOps principles to the machine learning lifecycle, from model development and deployment to monitoring and also optimization.

- Key principles: Adapting CI/CD for ML models, version control for code and models, monitoring and logging for model performance, and also scalable infrastructure for model training and deployment.

- Benefits: Faster deployment of ML models, improved model performance and reliability, reduced risk of errors, and also increased operational efficiency.

Synergies between DevOps and Mastering MLOps:

- Common principles: Both rely on automation, continuous improvement, and collaboration. This creates a shared foundation for building and deploying ML models.

- Shared tools and methodologies: Many DevOps tools and best practices can be adapted for Mastering MLOps, such as CI/CD pipelines, containerization, and infrastructure as code.

- Improved communication and collaboration: DevOps principles encourage breaking down silos between development and operations. This benefits MLOps by fostering closer collaboration between data scientists, engineers, and IT teams.

- Faster ML adoption: Streamlined workflows and automated processes enable faster development and deployment of ML models, accelerating time to value.

Differences between DevOps and Mastering MLOps:

- Model complexity: ML models are more complex and dynamic than software applications, requiring specialized tools and also techniques for managing their lifecycle.

- Data and model versioning: MLOps needs robust version control systems for both code and also models to track changes and also ensure reproducibility.

- Monitoring and explainability: Monitoring model performance and also ensuring explainability are crucial for MLOps, requiring specialized tools and also techniques.

- Scalability and infrastructure: MLOps often involves managing complex and also scalable infrastructure for training and deploying models.

In conclusion, MLOps builds upon the foundation of DevOps but adds specific practices and also tools tailored to the unique needs of managing ML models. By leveraging these synergies, organizations can accelerate their machine-learning journey and also unlock the full potential of their data.

Further Exploration:

- Dive deeper into specific MLOps tools and also platforms like Kubeflow, MLflow, and also TensorFlow Serving.

- Explore case studies of successful MLOps implementations across different industries.

- Consider attending workshops or training programs to gain practical MLOps skills.

Version Control Systems

Importance of Version Control in ML Projects

Version control is crucial for successful ML projects due to several key reasons:

Collaboration and reproducibility:

- Multiple team members can work on and also track changes to code, data, and also models simultaneously.

- Versions can be easily reverted to, ensuring the reproducibility of results and also preventing regressions.

Experimentation and iteration:

- Different model configurations and hyperparameters can be tried and compared without overwriting previous work.

- Lessons learned from failed experiments can be preserved and also analyzed.

Error tracking and debugging:

- Changes that introduce bugs can be quickly identified and also rolled back.

- Code and data versions associated with successful results can be easily identified and also reused.

Scalability and project management:

- Large projects can be organized into manageable units with clear version history.

- Collaboration and also communication between team members are facilitated.

Sharing and collaboration:

- Projects can be easily shared with collaborators and also stakeholders for review and feedback.

- Open-source contributions can be made with proper versioning and also attribution.

- Hands-on Experience with Version Control Systems

- Here are two popular version control systems (VCS) for ML projects:

- A distributed VCS, meaning each team member has a complete copy of the repository.

- Offers branching and also merging features for collaborative development.

- Widely used in the software development community and also integrated with many ML tools.

- Open-source platform for managing the ML lifecycle, including version control.

- Tracks experiments, models, and also data versions alongside code changes.

- Integrates with popular ML frameworks like TensorFlow and also PyTorch.

- Let’s explore some hands-on activities to gain experience:

Setting up a Git repository for your ML project:

- Choose a hosting platform like GitHub or GitLab.

- Create a repository and also connect it to your local project directory.

- Track and also commit changes to your code, data, and models.

- Understand branching and also merging for collaboration.

Using MLflow for version control and tracking:

- Install MLflow and also connect it to your project.

- Track experiments with different model configurations and also hyperparameters.

- Register and also compare different model versions.

- Use MLflow to share and also deploy your model.

Exploring other VCS options:

- Consider Mercurial, another distributed VCS, or SVN, a centralized system.

- Each VCS has its strengths and also weaknesses, so choose the one that best suits your needs.

Tool Proficiency:

In-depth Training on Mastering MLOps Tools

Docker, Kubernetes, and also Airflow: The Power Trio of MLOps

The rise of Machine Learning (ML) has created a complex landscape of tools and also processes. Managing these complexities efficiently requires a powerful trio: Docker, Kubernetes, and also Airflow. Let’s break down their roles in MLOps:

Docker: Building and Packaging ML Models Applications

Docker is a containerization platform that packages applications and also their dependencies into isolated “containers.” Think of it like a self-contained box with everything your application needs to run, regardless of the underlying environment. This offers several benefits for MLOps:

- Portability: Docker containers run consistently across different operating systems and also cloud environments, simplifying deployment and also scaling.

- Isolation: Each container runs in its own isolated space, preventing conflicts between applications and also dependencies.

- Reproducibility: Docker images, by capturing the exact environment needed to run your ML models, ensure consistent results. Moreover, this approach provides a seamless and reproducible deployment process, fostering reliability and efficiency in the execution of machine learning workflows.

Kubernetes: Orchestrating the ML Pipeline

Kubernetes orchestrates deployments, scaling, and also networking of containerized applications. In MLOps, it takes the reins of your Docker containers, managing their lifecycle throughout the ML pipeline:

- Deployment: Kubernetes automates the deployment of your ML models across different environments, from development to production.

- Scaling: It automatically scales your containerized applications up or down based on demand also, ensuring efficient resource utilization.

- Self-healing: Kubernetes can automatically restart containers that fail, ensuring continuous operation of your ML pipeline.

Airflow: Automating the Workflow

- Airflow is a workflow automation platform that schedules and also manages tasks in your ML pipeline. It provides a visual interface to define the steps involved in training, and also deploying.

Monitoring your models, ensuring:

- Automation: Airflow automates repetitive tasks, freeing up your time for more strategic work.

- Visibility: It provides a centralized view of your entire ML pipeline, making it easier to track progress and also identify bottlenecks.

- Reliability: Airflow ensures tasks are executed in the correct order and also handles failures gracefully.

These tools form a powerful combination for MLOps:

- Docker builds and also packages your ML applications in portable containers.

- Kubernetes orchestrates the deployment and also scaling of these containers across your infrastructure.

- Airflow automates the workflow, ensuring tasks are executed efficiently and also reliably.

CI/CD Pipelines for Machine Learning Projects

- Implementing CI/CD Pipelines in Mastering MLOps: Best Practices for Continuous Integration and also Deployment

- MLOps (Machine Learning Operations) is all about streamlining the development and also deployment of machine learning models. A crucial aspect of MLOps is implementing CI/CD pipelines, which automate the process of integrating code changes, testing models, and also deploying them to production.

- Here’s a brief overview of implementing CI/CD pipelines in MLOps and also some best practices for continuous integration and deployment:

Building the Pipeline of Version control:

- Version control: Integrate your ML code and model artifacts with a version control system like Git to track changes and also facilitate collaboration.

Continuous Integration (CI):

- Code testing: Automate unit and also integration tests for your ML code and also dependencies.

- Model testing: Implement automated tests to evaluate the model’s performance on different metrics like accuracy, precision, and also recall.

- Packaging: Package your model and also its dependencies into a deployable format like Docker containers.

Continuous Deployment (CD):

- Deployment automation: Automate the deployment of your model to the production environment upon successful CI tests.

- Infrastructure management: Use tools like Kubernetes to manage and also scale your ML infrastructure.

- Monitoring and feedback: Continuously monitor your model’s performance in production and also implement feedback loops to improve its accuracy and also reliability.

Best Practices of Mastering MLOps

- Modularize your code: Break down your ML workflow into smaller and independent modules for easier testing and also deployment.

- Use containerization: Containerize your model and also its dependencies for portability and also reproducibility across different environments.

- Leverage cloud platforms: Utilize cloud platforms like AWS, Azure, or GCP for their managed services and also automated infrastructure management capabilities.

- Focus on observability: Implement comprehensive monitoring and also logging to track model performance, identify issues, and also diagnose errors quickly.

- Automate as much as possible: Strive to automate all aspects of your CI/CD pipeline to reduce manual intervention and potential errors.

- Test, test, test: Ensure thorough testing of your code, model, and also deployment process to ensure quality and reliability.

- Iterate and improve: Continuously monitor and also analyze your ML pipeline, identify areas for improvement, and implement changes to optimize performance and also efficiency.

Keywords: Machine Learning Operations (MLOps)| MLOps Internship Program| ML Models| DevOps Principles in MLOps| Version Control Systems (VCS).

FAQs (Frequently Asked Questions)

What is MLOps ?

Machine learning models are computer programs that are used to recognize patterns in data or make predictions. Machine learning models are created from machine learning algorithms, which undergo a training process using either labeled, unlabeled, or mixed data.

What are the 4 kinds of machine learning model?

- Supervised: You show them examples (good moves) and tell them the results (win/lose).

- Unsupervised: You give them a bunch of game states and let them figure out what works.

- Semi-supervised: You show them a few good moves, then let them play on their own.

- Reinforcement: You reward them for good moves (points!) so they learn by playing.

Which is the best ML model?

There’s no single “best” one because each is good for a specific job. Some models are good for learning from examples. Others are good at spotting patterns in unlabeled data.

How many models are in ML?

There are many different models, but they can be grouped into a few categories