Machine learning (ML) has revolutionized various industries, offering powerful tools for uncovering patterns, making predictions, and automating tasks. But with a vast array of algorithms available, choosing the right one for a specific problem can feel like navigating a labyrinth. The Machine Learning Operations Lifecycle (MLOps lifecycle) provides a structured framework to ensure efficient and reliable development, deployment, and management of ML models. This chapter delves deep into the crucial stage of Choosing the Right Machine Learning Algorithm. Here, we explore common supervised and unsupervised learning algorithms, analyzing their strengths, weaknesses, and suitability for different tasks.

Machine Learning tasks: Navigating the MLOps Stages

The MLOps lifecycle is a cyclical process that orchestrates various stages, each playing a vital role in the success of the final ML solution. These stages can be summarized as follows:

- Problem Definition and Data Understanding: This initial stage sets the course for the entire project. It involves defining the business problem and aligning it with potential ML solutions, followed by a thorough exploration and understanding of the available data.

- Data Acquisition and Preparation: Here, the necessary data is collected and readied for model training. Data cleaning, feature engineering, and transformation are crucial for ensuring high-quality model inputs.

- Model Development and Training: This stage focuses on building and training the ML model. It encompasses selecting an appropriate algorithm, tuning hyperparameters, and iteratively improving the model’s performance. (This is the current stage we are focusing on)

- Model Evaluation and Selection: The trained model’s performance is evaluated on unseen data to assess its effectiveness in solving the defined problem. Metrics like accuracy, precision, and recall become the judges, helping select the best-performing model for deployment.

- Model Deployment and Monitoring: The chosen model is deployed into production, where it interacts with real-world data and generates predictions. Continuous monitoring ensures the model’s performance remains optimal over time.

- Model Governance and Feedback: Ethical considerations, fairness, and explainability are addressed throughout the lifecycle. Feedback loops are established to capture real-world performance insights and incorporate them into future iterations.

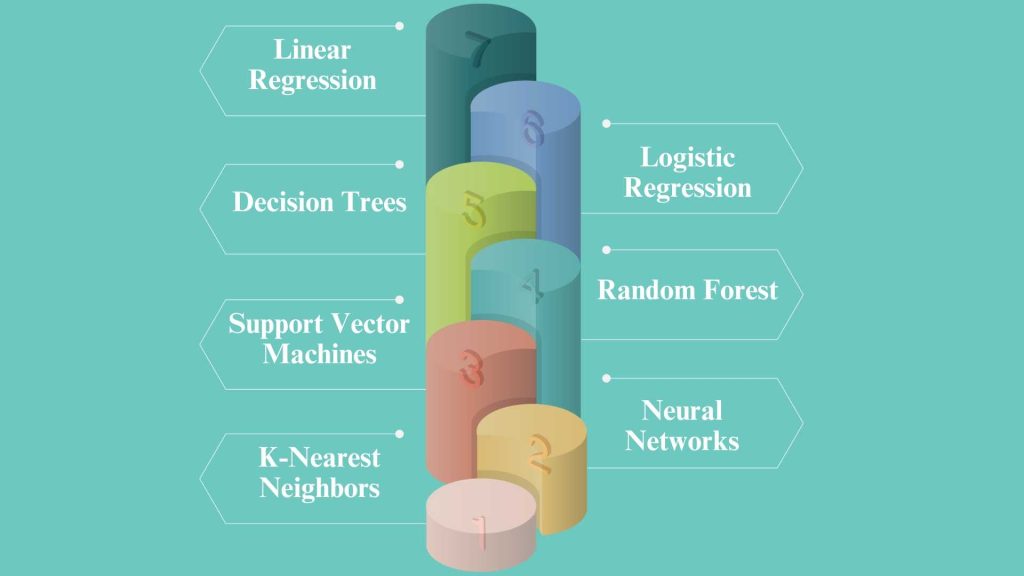

The Algorithm Arsenal: Supervised Learning Powerhouses

Supervised learning algorithms excel at learning from labeled data, where data points are associated with a desired output (target variable). Here, we explore some of the most common supervised learning algorithms and their ideal applications:

- Linear Regression

- Mechanism: Linear regression predicts a dependent variable value (y) based on a given independent variable (x). It assumes a linear relationship between x and y.

- Applications: It is used in predicting real-valued outputs like house prices or stock prices.

- Suitability: Best suited for problems with linear relationships and when the data is not too complex.

- Logistic Regression

- Mechanism: Despite its name, logistic regression is used for classification problems, not regression. It estimates probabilities using a logistic function.

- Applications: Commonly used for binary classification tasks like spam detection or determining if a customer will purchase a product.

- Suitability: Effective when the dataset features have a linear decision boundary.

- Decision Trees

- Mechanism: This algorithm splits the data into branches to form a tree structure. Each node of the tree represents a feature in a dataset, and the branches represent decision rules.

- Applications: Used in customer segmentation, diagnosis systems, and any scenario where clear decision rules need to be established.

- Suitability: Suitable for both numerical and categorical input and output variables. However, they are prone to overfitting.

- Random Forest

- Mechanism: An ensemble method that operates by constructing multiple decision trees during training and outputs the class that is the mode of the classes of the individual trees.

- Applications: Used in feature importance ranking, medical diagnosis, and stock market prediction.

- Suitability: More robust against overfitting than a single decision tree and can handle large datasets with higher dimensionality.

- Support Vector Machines (SVM)

- Mechanism: SVMs are a set of supervised learning methods used for classification, regression, and outliers detection. The core idea is to find a maximum marginal hyperplane that best divides the dataset into classes.

- Applications: Face detection, handwriting recognition, and classification of images.

- Suitability: Effective in high dimensional spaces and in cases where the number of dimensions exceeds the number of samples.

- Neural Networks

- Mechanism: Inspired by biological neural networks, these algorithms approximate a mapping function from input variables to outputs. They are composed of layers of nodes, each of which uses a nonlinear activation function.

- Applications: Broad applications from speech recognition, and image recognition to natural language processing.

- Suitability: Highly flexible and capable of modeling complex nonlinear relationships. Requires large amounts of data and computational power.

- K-Nearest Neighbors (KNN)

- Mechanism: KNN classifies data points based on the “nearest neighbors” principle. A new data point is assigned the category of the majority of its k nearest neighbors in the training data.

- Applications: KNN is used in a variety of settings such as recommendation systems, image classification, and credit scoring.

- Suitability: It is simple and versatile, suitable for both classification and regression tasks. However, KNN can be computationally expensive for large datasets due to its need to compute the distance of each query instance to all training samples.

Choosing the Right Supervised Tool: When selecting a supervised learning algorithm, consider the following factors:

- Problem Type: When deciding on a supervised learning algorithm, it’s crucial to first determine the nature of the problem you are trying to solve.

- If your goal is to predict a continuous value, such as predicting house prices or stock prices, then regression algorithms would be more suitable.

- On the other hand, if you need to categorize data points into specific classes, such as classifying emails as spam or not spam, then classification algorithms are the way to go.

- Data Characteristics: Understanding the characteristics of your data is essential in selecting the appropriate supervised learning tool.

- Linear models like linear regression are effective when dealing with numerical data, where the relationship between variables can be represented linearly.

- Support Vector Machines (SVMs) and K-nearest neighbors (KNN), on the other hand, are capable of handling mixed data types, making them versatile choices for datasets with both numerical and categorical features.

- Interpretability: Consider the level of interpretability you require from your model.

- If being able to interpret and explain how the model makes its predictions is important for your application, then opting for algorithms that offer transparency and explainability is key.

- Decision trees, for instance, provide a clear and intuitive way to understand how the model makes decisions based on the features of the data.

- Similarly, K-Nearest Neighbors (KNN) is a simple and interpretable algorithm that can be useful when transparency is a priority, especially compared to more complex models like neural networks or ensemble methods.

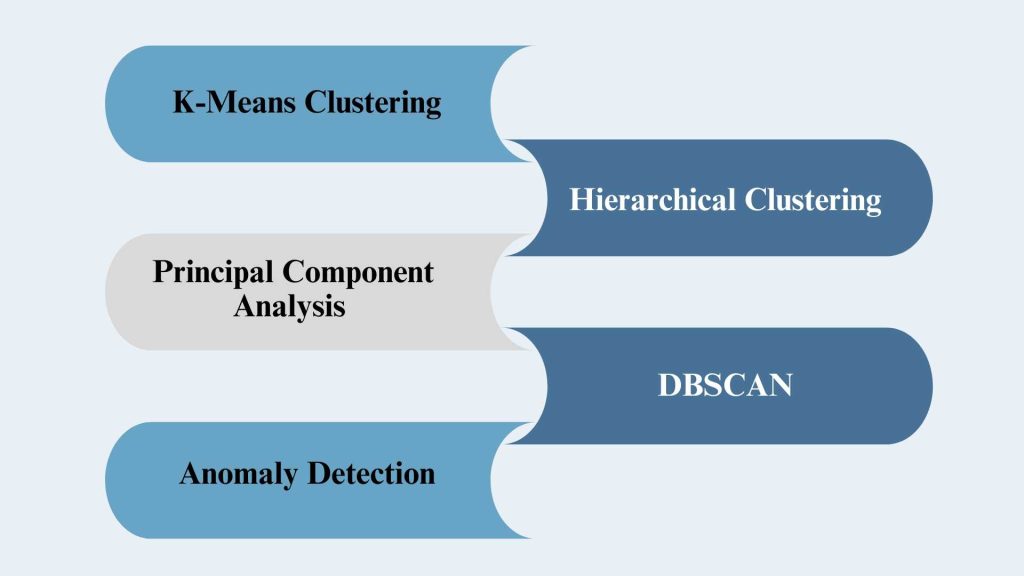

Unveiling the Unsupervised World: Pattern Finders:

Unsupervised learning algorithms operate on unlabeled data, where data points lack a predefined category or target variable. These algorithms excel at uncovering hidden patterns, relationships, and structures within the data itself. Here, we explore some common unsupervised learning algorithms and their ideal applications:

- K-Means Clustering

- Mechanism: A method of vector quantization, originally from signal processing, that aims to partition n observations into k clusters in which each observation belongs to the cluster with the nearest mean.

- Applications: Market segmentation, document clustering, or organizing computing clusters.

- Suitability: Efficient for large datasets but can struggle with non-spherical clusters or clusters of different sizes and densities.

- Hierarchical Clustering

- Mechanism: Builds a tree of clusters and does not require the number of clusters to be specified in advance. Clusters are formed hierarchically and can be visualized using a dendrogram.

- Applications: Used in taxonomy creation, and understanding of group relationships within a dataset.

- Suitability: Suitable for datasets where the inherent data structure is unknown and a detailed cluster hierarchy is beneficial.

- Principal Component Analysis (PCA)

- Mechanism: A statistical procedure that uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables.

- Applications: Used in exploratory data analysis and for making predictive models. It is commonly used for dimensionality reduction in data preprocessing.

- Suitability: Effective in reducing the dimensionality of data with many variables, thereby improving the efficiency of other algorithms.

- DBSCAN

- Mechanism: Stands for Density-Based Spatial Clustering of Applications with Noise. It groups points that are closely packed together, marking as outliers points that lie alone in low-density regions.

- Applications: Useful in anomaly detection, identifying fraudulent transactions, and outlier detection.

- Suitability: Performs well with clusters of similar density. Not suited for data with varying densities and high-dimensional space.

- Anomaly Detection

- Mechanism: Anomaly detection techniques typically involve clustering algorithms to group data points and outlier detection methods like Isolation Forest to identify anomalies based on their deviation from the majority of the data.

- Applications: Anomaly detection can be applied in various fields such as cybersecurity for detecting fraudulent activities, in predictive maintenance to identify potential system failures before they occur, and in healthcare for spotting unusual patterns in patient data that may indicate health issues.

- Suitability: Anomaly detection is suitable for situations where identifying rare events or outliers is crucial for decision-making or problem-solving. It is particularly effective in scenarios where the majority of the data is normal, and anomalies represent a small but significant portion of the dataset.

Choosing the Right Unsupervised Tool: When selecting an unsupervised learning algorithm, consider the following factors:

- Task Objective: When choosing the right unsupervised learning tool, it’s crucial to first identify the task objective.

- If the goal is to group similar data points based on their inherent patterns and similarities, then clustering algorithms would be the ideal choice.

- On the other hand, if the aim is to reduce the dimensionality of the dataset to make it more manageable for further analysis, Principal Component Analysis (PCA) might be more suitable.

- Data Characteristics: Another important factor to consider is the nature of the data you are working with.

- Clustering algorithms typically perform well with numerical data, where they can identify patterns and similarities based on the numerical values of the features.

- However, some anomaly detection techniques are capable of handling categorical data as well, making them a good option for datasets with a mix of numerical and categorical variables.

- Understanding the characteristics of your data will help you select an unsupervised learning algorithm that is best suited for the task at hand.

- Interpretability: One challenge often faced with unsupervised learning algorithms, particularly clustering algorithms, is the interpretability of the results.

- While these algorithms can effectively group data points based on similarities, understanding the underlying logic behind the clusters formed can be complex.

- However, the use of visualization techniques can greatly aid in interpreting the results.

- Visualizing the clusters and relationships within the data can provide valuable insights into the patterns and structures present, making it easier to draw meaningful conclusions from the unsupervised learning process.

Machine Learning tasks: Understanding the Human Element

Choosing the right algorithm is crucial, but it’s not the only factor for successful ML models. Here are additional considerations:

- Data Quality: High-quality, clean, and relevant data is essential for building robust models. Techniques like data cleaning, handling missing values, and addressing outliers are critical before applying any algorithm.

- Feature Engineering: The features used to train the model significantly impact its performance. Feature engineering involves creating new features from existing ones or selecting the most informative features for the task at hand.

- Model Evaluation: After training, model performance needs to be evaluated on unseen data using relevant metrics. This ensures the model generalizes well to new data and avoids overfitting to the training data.

Collaboration is Key: Selecting the Optimal Solution

Choosing the right ML algorithm is not a one-person job. Effective collaboration between various stakeholders is key:

- Data Scientists: Their expertise lies in understanding various algorithms, their strengths and weaknesses, and the factors that influence algorithm selection.

- Domain Experts: Their knowledge of the business domain and the specific problem being addressed provides valuable context for selecting an algorithm that aligns with the overall business goals.

- Data Engineers: They can advise on the feasibility of implementing specific algorithms within the available computational infrastructure.

Conclusion:

Engaging in machine learning tasks involves comprehending the nature of the problem, understanding the nuances of the data, and exploring the capabilities of various algorithms. Data scientists can harness both supervised and unsupervised learning techniques in this pursuit. It’s crucial to take into account factors like interpretability, dimensionality, and collaboration with domain experts and data engineers to ensure that the selected algorithm is in line with the overarching business objectives. Armed with the appropriate tools, data scientists can proceed to the next phase of model development and training, moving steadily toward a solution that truly makes a difference in the real world.

FAQ’s:

1. What are the 3 main types of machine learning tasks?

The three main types of machine learning tasks are supervised learning, unsupervised learning, and reinforcement learning.

2. What is a task in machine learning?

A task in machine learning refers to a specific problem or objective that the algorithm is designed to solve or achieve, such as classification, regression, clustering, or reinforcement learning.

3. What is the most common machine learning task?

The most common machine learning task is supervised learning, where the algorithm learns from labeled data to make predictions or decisions.

4. What are the activities in machine learning?

The activities in machine learning include data preprocessing, feature engineering, model selection, training, evaluation, and deployment.

5. What are 2 main types of machine learning tasks?

The two main types of machine learning tasks are supervised learning and unsupervised learning, each serving different purposes in extracting insights and making predictions from data.