In the field of Natural Language Processing (NLP), one important task is to assess the relationship between texts. This process, known as Textual Entailment, plays a vital role in various NLP applications, such as sentiment analysis, question answering systems, information retrieval, and machine translation. In this article, we will explore the concept of Textual Entailment, its significance in NLP, and the tools and techniques used to analyze the relationship between texts.

Introduction

NLP for Textual Entailment involves the development of computational techniques to determine the logical relationship between two text fragments, where one can be inferred or implied from the other. This area of research aims to enhance semantic understanding and enable machines to make accurate deductions based on textual information. By leveraging natural language processing algorithms, such as deep learning and contextualized word embeddings, advancements in textual entailment contribute to various applications, including information retrieval, question answering systems, and automated reasoning. The ongoing pursuit of improving entailment models and expanding their capabilities holds great potential for advancing the field of natural language understanding.

What is NLP (Natural Language Processing)?

Natural Language Processing (NLP) is a branch of AI that deals with the interaction between computers and human language. It encompasses tasks such as speech recognition, natural language understanding, and natural language generation. NLP aims to bridge the gap between human language and machine understanding, enabling computers to process and analyze textual data.

Understanding Textual Entailment

Definition

Textual entailment refers to the task of determining whether a given text fragment (the hypothesis) can be logically inferred or implied from another text fragment (the premise). It involves analyzing the semantic relationship between the two fragments, assessing if the meaning of the premise entails or implies the meaning of the hypothesis. Textual entailment plays a crucial role in various natural language processing applications, such as question answering, information retrieval, and machine translation.

Examples

- Text: “The cat is sleeping on the mat.”

Hypothesis: “A feline is resting on the floor covering.”

Relationship: Entailment

- Text: “Sheila bought a new car.”

Hypothesis: “Someone made a recent vehicle purchase.”

Relationship: Entailment

Importance of Textual Entailment in NLP

Textual entailment plays a crucial role in Natural Language Processing (NLP) by enabling deeper understanding of text and enhancing the performance of various language-related tasks. Here are some key reasons why textual entailment is important in NLP:

- Text Understanding and Inference: Textual entailment allows NLP systems to go beyond surface-level understanding and delve into the implicit relationships between sentences. By determining whether one text implies or entails another, NLP models can accurately infer meaning and make more informed decisions based on the textual context.

- Question Answering and Information Retrieval: Textual entailment helps in question answering systems by verifying if the information contained in a given answer is entailed by the corresponding question.

- Paraphrase Detection and Generation: Identifying paraphrases (textual variations with similar meaning) is a fundamental task in NLP. Textual entailment aids in paraphrase detection by determining if two texts entail each other, indicating their semantic equivalence.

- Sentiment Analysis and Opinion Mining: Textual entailment aids in sentiment analysis by ascertaining whether a given text implies a specific sentiment or opinion. This enables accurate classification of the expressed sentiment in a sentence or document, facilitating applications such as social media monitoring, customer feedback analysis, and brand reputation management.

- Machine Translation and Summarization: Textual entailment is valuable for machine translation systems by aligning source and target sentences at a semantic level. It aids in ensuring that the translated text accurately captures the meaning of the source text.

- Dialogue Systems and Chatbots: Textual entailment is particularly relevant in dialogue systems and chatbots. It plays a crucial role in maintaining coherence and logical consistency throughout conversations. By examining whether a response entails or aligns with the preceding user input, entailment models significantly contribute to generating more contextually appropriate and coherent responses.

Tools and Techniques for Textual Entailment

Several tools and techniques are employed in NLP to analyze and assess it.These include:

Semantic Role Labeling (SRL)

SRL is a technique used to identify the roles played by different entities in a sentence. It helps in understanding the relationships between verbs and their associated entities, such as subjects, objects, and modifiers. SRL can provide valuable information for determining textual entailment.

Dependency Parsing

Dependency parsing involves analyzing the grammatical structure of a sentence by identifying the relationships between words. Additionally, it represents these relationships as directed links between words, forming a dependency tree. Consequently, dependency parsing aids in understanding the semantic connections between words and contributes significantly to textual entailment analysis.

Word Embeddings

Word embeddings, which are numerical representations of words, capture their semantic meanings and relationships. These representations are learned from large text corpora using techniques like Word2Vec and GloVe. Moreover, word embeddings assist in capturing the contextual information of words, a crucial aspect for textual entailment tasks.

Recurrent Neural Networks (RNNs)

RNNs, being a type of neural network, excel in processing sequential data by maintaining internal states or memories. Moreover, they are extensively employed in various NLP tasks, including textual entailment, due to their ability to model word dependencies and capture the contextual nuances of the text.

Attention Mechanisms

Attention mechanisms focus on specific parts of the text during processing. They allow NLP models to allocate more attention to relevant words or phrases, improving the accuracy of textual entailment predictions. Attention mechanisms have proven to be effective in tasks that require the modeling of complex relationships between texts.

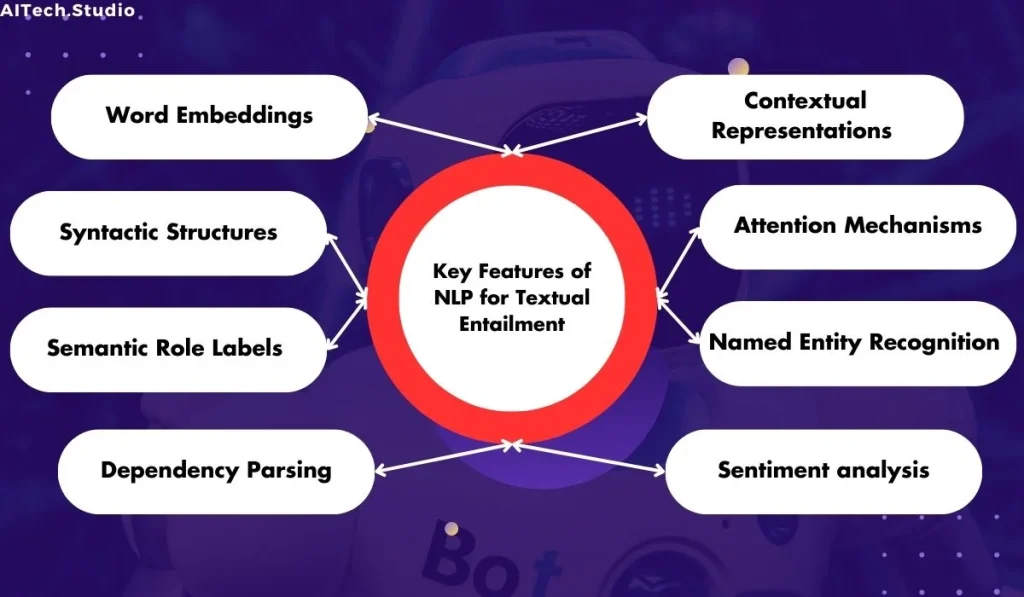

Features of NLP for Textual Entailment: Assessing the Relationship between Texts

- Word Embeddings: Word embeddings, such as Word2Vec or GloVe, represent words as dense numerical vectors in a high-dimensional space. These embeddings capture the semantic meaning and relationships between words, allowing the model to understand the contextual information within the text.

- Syntactic Structures: Syntactic structures, such as parse trees or dependency graphs, provide information about the grammatical relationships between words in a sentence.

- Semantic Role Labels: Additionally, sentiment analysis techniques can be employed to analyze the sentiment expressed in the text fragments. By understanding the sentiment conveyed in the premise and hypothesis, the model can better infer the entailment relationship between the texts.

- Dependency Parsing: Dependency parsing involves analyzing the grammatical structure of a sentence by identifying the relationships between words.

- Contextual Representations: Contextual representations, such as those obtained from models like BERT (Bidirectional Encoder Representations from Transformers), capture the contextual information of words based on their surrounding context.

- Attention Mechanisms: Attention mechanisms focus on specific parts of the text during processing. They allow the model to allocate more attention to relevant words or phrases, capturing the crucial information for textual entailment.

- Named Entity Recognition: Named Entity Recognition (NER) identifies and classifies named entities in text, such as person names, locations, organizations, or dates. Incorporating NER helps in recognizing important entities that can contribute to the understanding and assessment of textual entailment.

- Sentiment Analysis: Additionally, sentiment analysis techniques can be employed to analyze the sentiment expressed in the text fragments. By understanding the sentiment conveyed in the premise and hypothesis, the model can better infer the entailment relationship between the texts.

Step Involved in Natural Language Processing for Textual Entailment: Assessing the Relationship between Texts

- Feature Extraction: Relevant features are extracted from the preprocessed text data. These features include word embeddings, syntactic structures, semantic role labels, and dependency parse trees. The objective is to capture the necessary semantic and contextual information for assessing the entailment relationship.

- Model Training:Researchers train textual entailment models using various machine learning or deep learning models, such as RNNs, CNNs, and Transformers. They train these models on labeled datasets with meticulously annotated entailment relationships.

- Model Evaluation: After training the model, it is evaluated using a separate test set. Evaluation metrics such as accuracy, precision, recall, and F1 score are used to measure the model’s performance in predicting textual entailment accurately.

- Model Fine-tuning: Model fine-tuning involves adjusting hyperparameters, exploring different architectures, and incorporating additional techniques like attention mechanisms or ensemble methods based on evaluation results to improve performance.

- Inference and Prediction: Once the model is trained and fine-tuned, it can be used for inference and prediction. Given a new pair of text fragments, the model analyzes the entailment relationship and predicts whether the hypothesis can be logically inferred from the premise.

- Error Analysis and Iteration: It is essential to perform error analysis on the model’s predictions to identify common patterns or challenges. This analysis helps in understanding the limitations of the model and guides further iterations to enhance its performance.

- Application Integration: Finally, integrating the trained textual entailment model into various NLP applications allows its utilization in tasks such as sentiment analysis, question answering, information retrieval, machine translation, and other tasks that depend on comprehending the relationship between texts.

The Best Textual Entailment Products:

- spaCy : Spacy is a popular, efficient open-source library for NLP. It focuses on speed and usability, offering robust features like tokenization, part-of-speech tagging, entity recognition, and dependency parsing. With pre-trained models for multiple languages, it’s ideal for multilingual applications. Moreover the streamlined API and efficient algorithms make it an excellent choice for developers and researchers, enabling easy processing and analysis of large text volumes, enhancing productivity and accelerating research efforts.

To Know more

- Microsoft Azure Cognitive Services : Microsoft Azure Cognitive Services is a cloud-based suite of tools and services that allow developers to integrate AI capabilities into their applications. Additionally, it offers APIs and services for tasks such as text analytics, speech recognition, and image recognition. Moreover, developers can enhance their applications with NLP, computer vision, and other AI functionalities using pre-trained models and advanced algorithms. Furthermore, Azure Cognitive Services simplifies the integration of AI technologies, thereby enabling developers to build intelligent and engaging applications quickly and efficiently.

- OpenAI GPT-3 : OpenAI’s GPT-3, being an advanced language model, is ideal for textual entailment. Moreover, it effectively analyzes text relationships and provides accurate predictions. Additionally, its wide usage in NLP applications makes it a powerful tool for addressing textual entailment tasks.

- IBM Watson Natural Language Understanding : IBM Watson Natural Language Understanding is a powerful NLP tool that offers textual entailment capabilities. Furthermore, it utilizes advanced techniques like deep learning and semantic analysis to accurately assess text relationships. Additionally, the tool provides an API for easy integration and includes features such as sentiment analysis and entity recognition.

To Know more

Applications of NLP and Textual Entailment

Textual Entailment has numerous applications in the field of NLP. Some notable applications include:

Sentiment Analysis

Understanding entailment between sentiment-related texts enhances sentiment analysis models, aiding accurate categorization of text as positive, negative, or neutral.

Question Answering Systems

Textual entailment is crucial for question answering systems to match questions with relevant text passages. Furthermore, by assessing entailment, these systems can extract the necessary information to answer user queries accurately.

Information Retrieval

In information retrieval tasks, textual entailment assists in retrieving relevant documents or passages based on the user’s query. Furthermore, the system effectively ranks retrieved results by considering entailment, ensuring the presentation of relevant information.

Textual entailment aids machine translation systems by ensuring accurate translations based on entailment between source and target languages. This enables the system to produce more contextually appropriate translations.

Challenges in Textual Entailment

While It has many benefits, it also poses several challenges. These challenges include:

- Ambiguity: It often faces ambiguity, where a given text can have multiple interpretations or entailments. Furthermore, resolving this ambiguity is crucial for accurate entailment analysis.

- Polysemy: Polysemy refers to words or phrases that have multiple meanings. Handling polysemy in textual entailment poses a challenge; nevertheless, it is imperative to infer the correct meaning based on the context.

- Data Sparsity: It models rely on large amounts of labeled data for training. However, obtaining such data can be challenging, resulting in data sparsity and limited resources for model development.

- Domain Adaptation: It models trained on one domain may struggle to perform well on texts from a different domain. Furthermore, adapting these models to different domains is an ongoing challenge in the field.

Future Directions and Advancements

In the future, Textual Entailment will make progress by utilizing transfer learning, pre-trained models, and external knowledge integration to overcome challenges and enhance accuracy and robustness.

- Enhanced Semantic Representation: Future advancements in textual entailment will focus on improving semantic representation techniques.

- Integration of Deep Learning: Deep learning algorithms will play a crucial role in advancing textual entailment.

- Multilingual Textual Entailment: Textual entailment’s future relies on multilingual support as NLP progresses.

- Contextualized Word Embeddings: Another area of focus will be on incorporating contextualized word embeddings in textual entailment models.

- Domain-Specific Entailment Models: Future advancements will involve the development of domain-specific entailment models.

- Explainable Textual Entailment: Ensuring transparency and interpretability of textual entailment models will be an important direction for future advancements.

Conclusion

Textual entailment is crucial in NLP, benefiting tasks like sentiment analysis and machine translation, with ongoing research promising advancements.