Cross-validation is a crucial technique in machine learning for assessing model performance and generalizability. It involves partitioning the dataset into subsets for training and testing, allowing for multiple evaluations of the model’s effectiveness. On the other hand, regularization is a method used to prevent overfitting by adding a penalty term to the model’s cost function, discouraging complex models that may fit the training data too closely. By introducing constraints on the model parameters, regularization helps improve its ability to generalize to unseen data. Understanding the principles and applications of cross-validation and regularization is essential for building robust and also reliable machine-learning models. This introduction sets the foundation for exploring how these techniques work together to enhance model performance and prevent overfitting in various predictive modeling scenarios.

Understanding Overfitting and Model Generalizability

Overfitting and model generalizability are two closely related concepts in machine learning that are crucial to understanding for building effective predictive models.

Overfitting

Overfitting occurs when a model learns the training data too well, capturing noise and random fluctuations in the data, rather than the underlying patterns. As a result, the model performs exceptionally well on the training data but fails to generalize to new, unseen data. Overfitting is particularly common in complex models with a large number of parameters, such as deep neural networks.

Some key characteristics of overfitting:

- The model performs very well on the training data but poorly on the validation/test data.

- The model has learned the training data too specifically, failing to capture the broader patterns.

- Overfitting is more likely to occur when the model complexity is high relative to the amount of training data available.

Model Generalizability

Generalizability refers to the model’s ability to make accurate predictions on new, unseen data that has the same characteristics as the training set. A model with good generalizability can apply the learned patterns to novel instances, rather than just memorizing the training examples.

Factors that influence model generalizability:

- Size and quality of the training dataset – Larger, more representative datasets tend to improve generalization.

- Model complexity – Overly complex models are more prone to overfitting and poor generalization.

- Regularization techniques – Methods like L1/L2 regularization, dropout, and also early stopping can help improve generalization.

- Cross-validation – Evaluating model performance on held-out data provides a better estimate of generalization error.

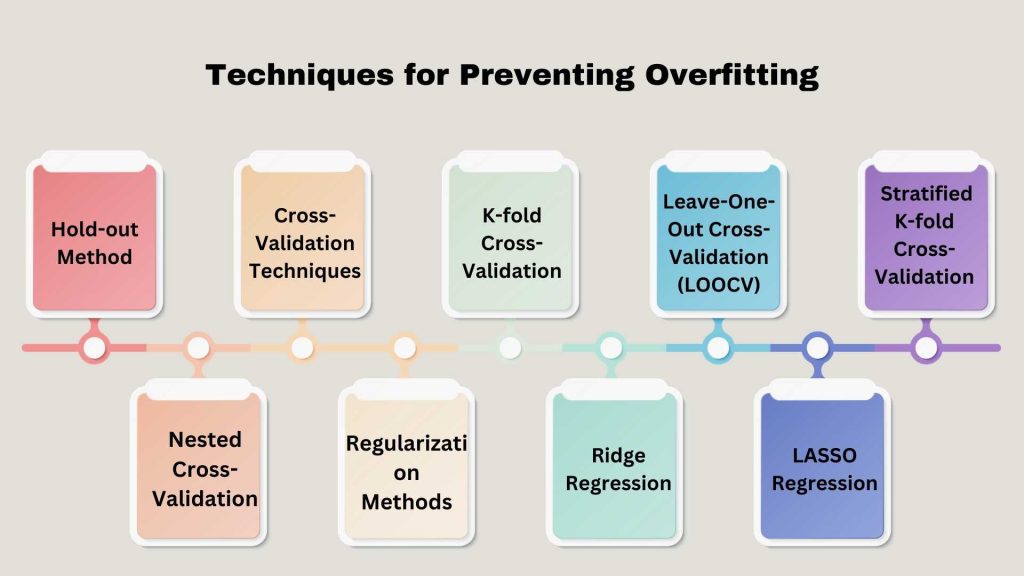

Techniques Cross-Validation & Regularization for Preventing Overfitting

Overfitting is a common challenge in machine learning, where a model performs exceptionally well on the training data but fails to generalize to new, unseen data. To address this issue, several techniques have been developed to prevent overfitting and improve model generalizability.

Hold-out Method

The hold-out method is a simple approach to evaluating model performance and also preventing overfitting. It involves splitting the available data into two distinct sets:

- Training set: Used to train the model.

- Validation/Test set: Used to evaluate the model’s performance on unseen data.

The key steps in the hold-out method are:

- Split the dataset into training and validation/test sets, typically with a 70:30 or 80:20 ratio.

- Train the model using the training set.

- Evaluate the model’s performance on the validation/test set.

- If the model performs well on the validation/test set, it is likely to generalize well to new data.

The hold-out method provides a realistic estimate of the model’s performance on unseen data, but it has a limitation: the model’s performance can vary depending on the specific split of the data. To address this, cross-validation techniques are often used.

Cross-Validation Techniques

Cross-validation is a more robust approach to evaluating model performance and preventing overfitting. It involves partitioning the data into multiple subsets and training/evaluating the model on different combinations of these subsets.

K-fold Cross-Validation

K-fold cross-validation is a widely used cross-validation technique. It involves the following steps:

- Divide the dataset into K equal-sized subsets (folds).

- Use K-1 folds as the training set and the remaining fold as the validation set.

- Train the model on the K-1 folds and evaluate its performance on the validation fold.

- Repeat steps 2-3 K times, using a different fold as the validation set each time.

- Calculate the average performance across all K validation folds as the final estimate of the model’s performance.

K-fold cross-validation offers a robust estimate of model performance compared to the hold-out method. It employs multiple validation sets, with common K values being 5 or 10, though the optimal value varies.

Leave-One-Out Cross-Validation (LOOCV)

Leave-One-Out Cross-Validation (LOOCV) is a special case of K-fold cross-validation where K is equal to the number of samples in the dataset. In LOOCV:

- The model is trained on all but one sample and then evaluated on the held-out sample.

- This process is repeated for each sample in the dataset, and also the average performance is calculated.

LOOCV is computationally expensive, as it requires training the model N times (where N is the number of samples). However, it can be useful for small datasets where K-fold cross-validation may not be feasible.

Stratified K-fold Cross-Validation

Stratified K-fold cross-validation is a variation of K-fold cross-validation that ensures the distribution of the target variable is approximately the same in each fold. 1 This is particularly useful when the dataset has an imbalanced target variable distribution.

The key steps in stratified K-fold cross-validation are:

- Divide the dataset into K folds, ensuring that the proportion of each target class is approximately the same in each fold.

- Use K-1 folds as the training set and the remaining fold as the validation set.

- Train the model on the K-1 folds and evaluate its performance on the validation fold.

- Repeat steps 2-3 K times, using a different fold as the validation set each time.

- Calculate the average performance across all K validation folds as the final estimate of the model’s performance.

Stratified K-fold cross-validation helps to ensure that the model is evaluated on a representative sample of the target variable distribution, which can improve the reliability of the performance estimate.

Nested Cross-Validation

Nested cross-validation is a more advanced cross-validation technique that is used to estimate the model’s generalization performance and tune its hyperparameters simultaneously. It involves two levels of cross-validation:

- Outer cross-validation loop: Used to estimate the model’s generalization performance.

- Inner cross-validation loop: Used to tune the model’s hyperparameters.

The key steps in nested cross-validation are:

- Divide the dataset into K folds for the outer cross-validation loop.

- For each outer fold: Use the current outer fold as the test set.

- Calculate the average performance across all K outer folds as the final estimate of the model’s generalization performance.

Nested cross-validation provides a more reliable estimate of the model’s generalization performance, as it separates the model selection and performance evaluation processes. This helps to avoid biased performance estimates that can occur when hyperparameters are tuned on the same data used to evaluate the model.

Regularization Methods

It is another powerful technique for preventing overfitting and improving model generalizability. Regularization works by adding a penalty term to the model’s cost function, which discourages the model from becoming too complex and fitting the training data too closely.

Ridge Regression

Ridge regression is a form of linear regression that adds a penalty term proportional to the sum of the squares of the model coefficients. The ridge regression cost function is:

$L_{\text{ridge}}(\boldsymbol{\beta}) = \frac{1}{n} \sum_{i=1}^n \left(y_i – \boldsymbol{\beta}^T \mathbf{x}i\right)^2 + \lambda \sum{j=1}^m \beta_j^2$

where $\lambda$ is the regularization parameter that controls the strength of the penalty term. A larger value of $\lambda$ leads to smaller coefficient values, resulting in a simpler, more generalized model.

Ridge regression is particularly effective when the features in the dataset are highly correlated, as it can help to stabilize the model and prevent overfitting.

LASSO Regression

LASSO (Least Absolute Shrinkage and Selection Operator) regression is another form of regularized linear regression that uses the sum of the absolute values of the model coefficients as the penalty term. The LASSO regression cost function is:

$L_{\text{LASSO}}(\boldsymbol{\beta}) = \frac{1}{n} \sum_{i=1}^n \left(y_i – \boldsymbol{\beta}^T \mathbf{x}i\right)^2 + \lambda \sum{j=1}^m |\beta_j|$

The key difference between LASSO and ridge regression is that LASSO can perform feature selection by driving some of the coefficients to exactly zero, effectively removing those features from the model. This makes LASSO particularly useful when dealing with high-dimensional datasets with many irrelevant features.

Both ridge and LASSO regression require tuning the regularization parameter $\lambda$ to find the optimal balance between model complexity and generalization performance. This can be done using techniques like cross-validation.

In summary, techniques like the hold-out method aid in preventing overfitting in models. Various cross-validation approaches, including K-fold and LOOCV, also play a role. Stratified K-fold and nested cross-validation are among these diverse approaches. Additionally, regularization methods such as ridge and LASSO regression help prevent overfitting. These techniques collectively ensure models generalize effectively to new, unseen data. Generalization is crucial for the successful deployment of these models in real-world applications.

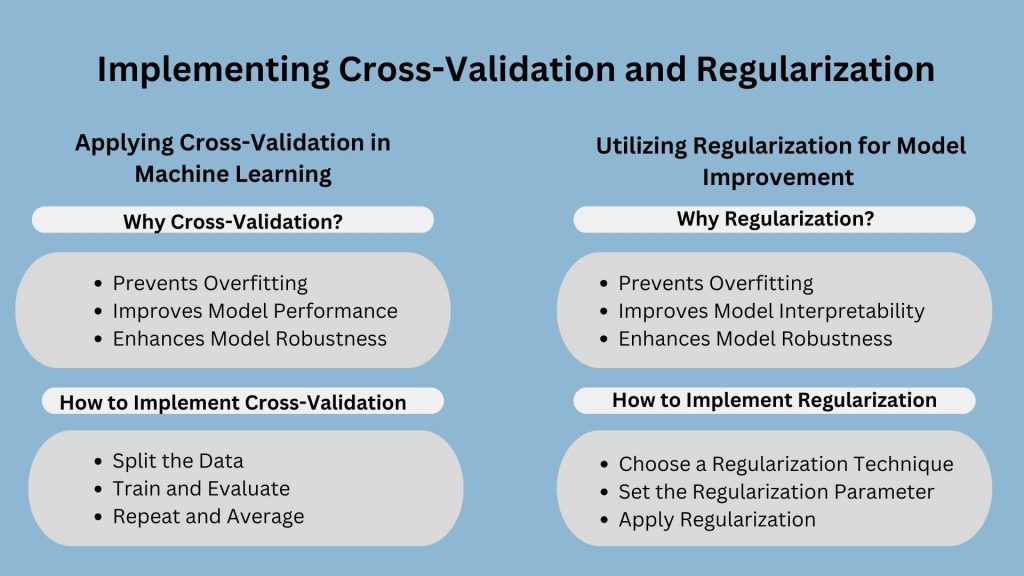

Implementing Cross-Validation and Regularization

Applying Cross-Validation in Machine Learning

Cross-validation is crucial in ML, evaluating model performance by splitting data and training on subsets. It prevents overfitting, ensuring the model’s complexity doesn’t hinder generalization to new data.

Why Cross-Validation?

- Prevents Overfitting: Cross-validation helps to prevent overfitting by ensuring that the model is not too complex and can generalize well to new data.

- Improves Model Performance: By evaluating the model on different subsets of the data, cross-validation provides a more accurate estimate of the model’s performance.

- Enhances Model Robustness: Cross-validation helps to identify and address issues in the model that may not be apparent from a single evaluation.

How to Implement Cross-Validation

- Split the Data: Split the available data into subsets, typically using a random or stratified approach.

- Train and Evaluate: Train the model on each subset and evaluate its performance on the remaining subset.

- Repeat and Average: Repeat the process for each subset and average the performance metrics to obtain a final estimate.

Utilizing Regularization for Model Improvement

Regularization is a powerful technique for improving model performance by reducing the complexity of the model. It works by adding a penalty term to the model’s cost function, which discourages the model from becoming too complex and fitting the training data too closely.

Why Regularization?

- Prevents Overfitting: Regularization helps to prevent overfitting by reducing the complexity of the model and ensuring it generalizes well to new data.

- Improves Model Interpretability: Regularization can improve model interpretability by reducing the number of parameters and also making the model more transparent.

- Enhances Model Robustness: Regularization can enhance model robustness by reducing the impact of noisy or irrelevant data on the model’s performance.

How to Implement Regularization

- Choose a Regularization Technique: Select a regularization technique, such as L1 or L2 regularization, based on the specific needs of the problem and the characteristics of the data.

- Set the Regularization Parameter: Determine the value of the regularization parameter, such as the strength of the penalty term, based on the specific needs of the problem and the characteristics of the data.

- Apply Regularization: Apply the chosen regularization technique to the model by adding the penalty term to the model’s cost function.

Combining Cross-Validation and Regularization

Combining cross-validation and regularization can provide even better results by ensuring that the model is not too complex and can generalize well to new data. This approach can be particularly effective when dealing with complex datasets or when the model is prone to overfitting.

Why Combine Cross-Validation and Regularization?

- Enhances Model Robustness: Integration of cross-validation and regularization enhances model robustness, mitigating the influence of noisy data. This integration reduces the impact of irrelevant data, thus improving model performance.

- Improves Model Performance: Combining cross-validation and regularization can improve model performance by ensuring that the model is not too complex and can generalize well to new data.

- Enhances Model Interpretability: Combining cross-validation and regularization can enhance model interpretability by reducing the number of parameters and making the model more transparent.

How to Combine Cross-Validation and Regularization

- Split the Data: Split the available data into subsets, typically using a random or stratified approach.

- Train and Evaluate: Train the model on each subset and evaluate its performance on the remaining subset.

- Apply Regularization: Apply the chosen regularization technique to the model by adding the penalty term to the model’s cost function.

- Repeat and Average: Repeat the process for each subset and average the performance metrics to obtain a final estimate.

Through cross-validation and regularization integration, practitioners craft robust models, proficient in generalizing to new data. This approach fosters models less susceptible to overfitting, ensuring effectiveness and robustness.

Evaluating Model Performance

Evaluating model performance ensures effective generalization and accurate predictions on new, unseen data. Assessing model fit with cross-validation and understanding regularization’s impact are crucial aspects.

Assessing Model Fit with Cross-Validation

It is a critical step in evaluating the generalization performance of a machine learning model. Cross-validation techniques, such as K-fold cross-validation and Leave-One-Out Cross-Validation (LOOCV), provide a robust way to estimate how well a model will perform on unseen data.

Importance of Cross-Validation

- Generalization Performance: Cross-validation helps to assess how well a model can generalize to new, unseen data by evaluating its performance on multiple subsets of the training data.

- Robust Performance Estimates: By iterative training and testing the model on different subsets of the data, cross-validation provides more reliable performance estimates compared to a single train-test split.

- Hyperparameter Tuning: Cross-validation can also be used for tuning hyperparameters to optimize the model’s performance.

Implementing Cross-Validation

- K-fold Cross-Validation: Dividing the data into K subsets and iteratively using each subset as a validation set while training on the remaining data.

- Leave-One-Out Cross-Validation (LOOCV): Similar to K-fold, each data point is used as a validation set in turn, making it computationally expensive but useful for small datasets.

Benefits of Cross-Validation

- Bias-Variance Tradeoff: Cross-validation helps to balance bias and variance by providing a more accurate estimate of the model’s generalization error.

- Model Selection: It aids in selecting the best model by comparing their performance across different validation sets.

Impact of Regularization on Model Accuracy

Regularization techniques, such as Ridge Regression and LASSO Regression, are crucial in improving model accuracy by preventing overfitting and reducing model complexity.

Role of Regularization

- Preventing Overfitting: Regularization adds a penalty term to the model’s cost function, discouraging overly complex models that may fit the training data too closely.

- Improving Generalization: By reducing model complexity, regularization helps the model generalize better to new, unseen data.

Types of Regularization

- Ridge Regression: Adds a penalty term proportional to the sum of the squares of the model coefficients, helping to stabilize the model and prevent overfitting.

- LASSO Regression: Uses the sum of the absolute values of the model coefficients as the penalty term, allowing for feature selection by driving some coefficients to zero.

Impact on Model Accuracy

- Model Stability: Regularization techniques improve model stability by reducing the impact of noisy or irrelevant data on the model’s performance.

- Optimal Model Complexity: By controlling the model complexity, regularization ensures that the model strikes the right balance between bias and variance, leading to improved accuracy.

In conclusion, assessing model fit with cross-validation and understanding the impact of regularization on model accuracy are essential steps in developing robust and accurate machine-learning models that can perform well on real-world data. By leveraging these techniques effectively, data scientists can ensure their models are reliable, stable, and capable of making accurate predictions.

Advanced Topics in Cross-Validation and Regularization

In the realm of machine learning, advanced topics like hyperparameter tuning and model selection using cross-validation play a pivotal role in optimizing model performance and ensuring robustness. These techniques go beyond basic model training and evaluation, offering sophisticated strategies to fine-tune models and select the best-performing ones.

Hyperparameter Tuning

Hyperparameters are parameters that are set before the learning process begins and control the learning process itself. Hyperparameter tuning involves finding the optimal values for these parameters to enhance model performance.

Importance of Hyperparameter Tuning

- Optimizing Model Performance: Hyperparameter tuning helps to find the best configuration for a model, leading to improved performance on unseen data.

- Avoiding Overfitting: Tuning hyperparameters can prevent overfitting by optimizing the model’s complexity.

- Enhancing Generalization: Finding the right hyperparameters can enhance the model’s ability to generalize well to new data.

Strategies for Hyperparameter Tuning

- Grid Search: Exhaustively searches through a specified parameter grid to find the best combination of hyperparameters.

- Random Search: Randomly samples hyperparameter values from predefined ranges, offering a more efficient search strategy.

- Bayesian Optimization: Uses probabilistic models to predict the performance of different hyperparameter configurations, guiding the search towards promising regions.

Model Selection using Cross-Validation

Model selection is the process of choosing the best model from a set of candidate models based on their performance metrics. Cross-validation is a powerful technique for model selection, allowing for a robust comparison of different models.

Significance of Model Selection

- Identifying the Best Model: Model selection helps to identify the model that performs best on unseen data, ensuring optimal predictive accuracy.

- Comparing Model Variants: It enables a systematic comparison of different model variants to choose the most suitable one for the task.

- Improving Generalization: Selecting the right model can enhance the model’s generalization ability and robustness.

Implementing Model Selection with Cross-Validation

- K-fold Cross-Validation: Utilizing K-fold cross-validation to evaluate multiple models and also select the one with the best average performance across folds.

- Nested Cross-Validation: Employing nested cross-validation for model selection, where an inner loop is used for hyperparameter tuning and an outer loop for model evaluation.

- Performance Metrics: Using appropriate performance metrics, such as accuracy, precision, recall, or F1 score, to compare and select the best model.

Benefits of Advanced Topics in Cross-Validation and Regularization

- Optimized Model Performance: Hyperparameter tuning and model selection enhance model performance by finding the best hyperparameters and model architecture.

- Improved Generalization: These techniques help to improve model generalization by preventing overfitting and selecting models that can generalize well to new data.

- Efficient Model Development: By systematically tuning hyperparameters and selecting the best model, moreover the model development process becomes more efficient and effective.

In conclusion, delving into advanced topics like hyperparameter tuning and model selection using cross-validation is essential for maximizing the performance and generalization capabilities of machine learning models. By mastering these techniques, data scientists can build more accurate, robust, and reliable models for a wide range of applications.

Case Studies and Practical Applications

Real-world Examples of Cross-Validation

Cross-validation is a widely used technique in various real-world machine-learning applications to ensure the robustness and generalizability of models. Here are some examples of how cross-validation is applied in practice:

Predicting Hospital Readmissions

- Problem: Predicting the risk of hospital readmission for patients, is crucial for improving patient outcomes and reducing healthcare costs.

- Approach: Researchers used a nested cross-validation approach to evaluate the performance of different machine learning models, including logistic regression, random forests, and gradient boosting, in predicting hospital readmissions.

- Benefits: The nested cross-validation approach helped to provide an unbiased estimate of the model’s performance and identify the best-performing model for this task.

Detecting Diabetic Retinopathy

- Problem: Developing an automated system to detect diabetic retinopathy, a leading cause of blindness, from retinal images.

- Approach: Researchers employed a 5-fold cross-validation strategy to evaluate the performance of deep learning models in classifying retinal images as having diabetic retinopathy or not.

- Benefits: Cross-validation ensured that the model’s performance was evaluated on unseen data, providing a reliable estimate of its ability to generalize to new patient cases.

Predicting Breast Cancer Recurrence

- Problem: Predicting the risk of breast cancer recurrence to guide treatment decisions and improve patient outcomes.

- Approach: A study used a leave-one-out cross-validation (LOOCV) approach to assess the performance of a machine learning model in predicting breast cancer recurrence based on clinical and genomic data.

- Benefits: LOOCV was particularly useful in this case, as the dataset was relatively small, and the technique allowed for the maximum utilization of the available data for model evaluation.

Successful Implementation of Regularization Techniques

Ridge Regression and LASSO Regression are utilized in real-world machine-learning applications to enhance model performance. Their application successfully prevents overfitting, ensuring robustness across various scenarios.

LASSO Regression is applied in real-world machine-learning to enhance model performance and prevent overfitting. Its successful application spans various scenarios, ensuring robustness and effectiveness.

Predicting Housing Prices

- Problem: Developing a model to accurately predict housing prices based on various features, such as location, size, and amenities.

- Approach: Ridge Regression and LASSO Regression were combined to balance model complexity against predictive accuracy. This combination helped researchers strike a balance between model complexity and predictive accuracy.

- Benefits: Ridge Regression stabilizes models, enhancing generalization by shrinking coefficients of correlated features toward zero. It improves generalization without eliminating highly correlated features from the model.

Classifying Spam Emails

- Problem: Building a model to accurately classify emails as spam or non-spam, which is crucial for effective email filtering and security.

- Approach: A study employed LASSO Regression to perform feature selection and build a parsimonious model for spam email classification.

- Benefits: LASSO Regression identifies key features, enhancing the distinction between spam and non-spam emails. This results in a more interpretable and efficient model for email classification.

Predicting Student Performance

- Problem: LASSO Regression identifies key features, enhancing the distinction between spam and non-spam emails. Similarly, This results in a more interpretable and efficient model for email classification.

- Approach: Ridge Regression and LASSO Regression were combined to balance model complexity against predictive accuracy. This combination helped researchers strike a balance between model complexity and predictive accuracy.

- Benefits: The hybrid Ridge and LASSO Regression approach captures relevant features while maintaining suitable complexity. However, It leads to enhanced predictive performance and improved generalization to new student data.

Real-world examples showcase cross-validation and regularization techniques’ practical applications, emphasizing their importance. They underscore the significance of building robust, reliable machine-learning models adept at generalizing to new data.

Conclusion

In Conclusion, cross-validation and regularization are crucial in machine learning to prevent overfitting and ensure robust model performance. Cross-validation techniques, such as K-fold and LOOCV, assess model performance on unseen data. Regularization methods like Ridge and Lasso Regression decrease model complexity to prevent overfitting. By combining these techniques, models can be optimized for better generalization and accuracy. Additionally, The significance of these techniques is underscored by their application in domains like image classification. Practical uses include spam detection and disease diagnosis, highlighting their importance across domains.